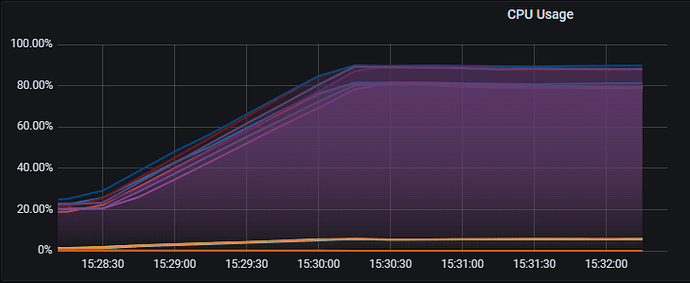

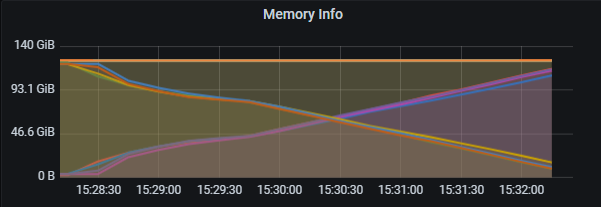

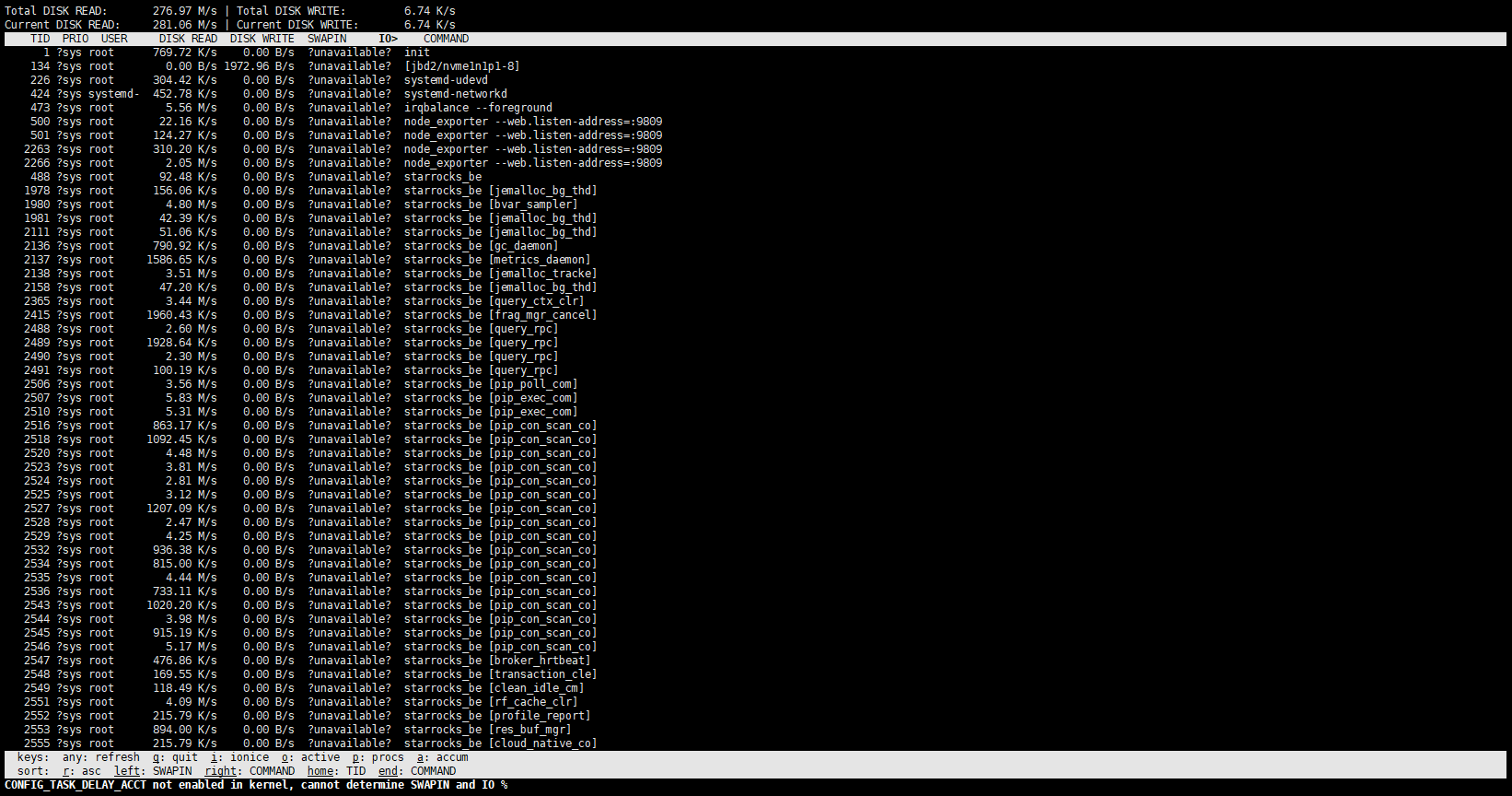

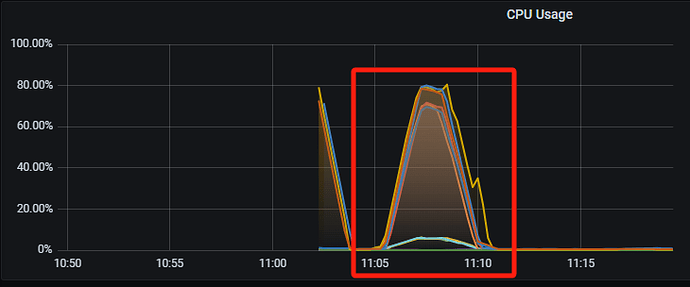

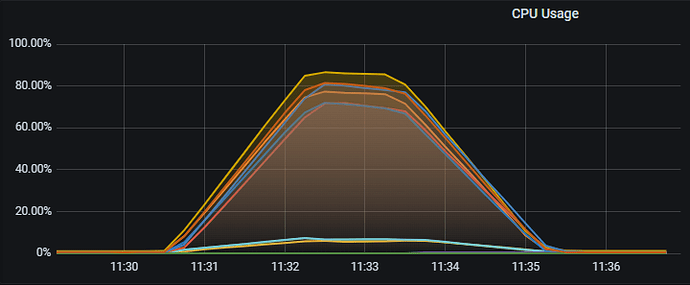

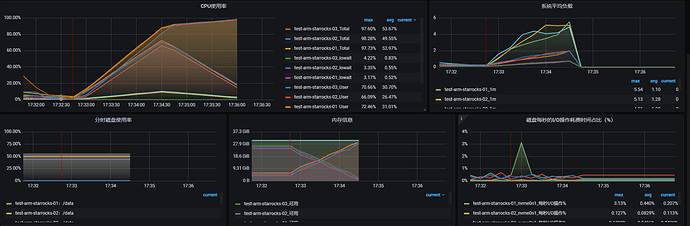

2-4分钟之后会降下去。

这是带 limit 的 explain:

mysql> explain WITH assigned_accounts AS ( SELECT * FROM pt_mid.dwd_spark_daily_option_exercise_assignment_record_df WHERE yyyymmdd = 20250115 and cash_trade_date is NULL LIMIT 1000 ), parsed_data AS ( SELECT yyyymmdd, account_no, account_type, security_subtype, SPLIT(symbol, ' ')[0] AS stock_symbol, business_type, quantity FROM assigned_accounts ) SELECT * FROM parsed_data p LEFT JOIN ice.ice_dwd.dwd_assets_position_sodhis_di a ON p.account_no = a.broker_account_id AND p.stock_symbol = a.symbol and p.yyyymmdd = a.yyyymmdd limit 1000\G

*************************** 1. row ***************************

Explain String: PLAN FRAGMENT 0

*************************** 2. row ***************************

Explain String: OUTPUT EXPRS:82: yyyymmdd | 60: account_no | 61: account_type | 62: security_subtype | 83: expr | 64: business_type | 69: quantity | 84: user_id | 85: broker_id | 86: broker_account_id | 87: process_date | 88: firm | 89: correspondentid | 90: correspondent_office_id | 91: office_code | 92: registered_rep_code | 93: account_type | 94: symbol | 95: cusip | 96: trade_quantity | 97: settle_quantity | 98: currency_code | 99: security_type_code | 100: ticker_type | 101: descp | 102: margin_eligible_code | 103: closing_price | 104: last_activty_date | 105: loc_location | 106: loc_memo | 107: option_amount | 108: conversion_factor | 109: underlying_cusip | 110: option_symbol_root | 111: option_contract_date | 112: strike_price | 113: callput | 114: expiration_delivery_date | 115: position_market_value | 116: customer_source | 117: origin_data_region | 118: yyyymmdd

*************************** 3. row ***************************

Explain String: PARTITION: UNPARTITIONED

*************************** 4. row ***************************

Explain String:

*************************** 5. row ***************************

Explain String: RESULT SINK

*************************** 6. row ***************************

Explain String:

*************************** 7. row ***************************

Explain String: 6:EXCHANGE

*************************** 8. row ***************************

Explain String: limit: 1000

*************************** 9. row ***************************

Explain String:

*************************** 10. row ***************************

Explain String: PLAN FRAGMENT 1

*************************** 11. row ***************************

Explain String: OUTPUT EXPRS:

*************************** 12. row ***************************

Explain String: PARTITION: HASH_PARTITIONED: 86: broker_account_id, 94: symbol, 118: yyyymmdd

*************************** 13. row ***************************

Explain String:

*************************** 14. row ***************************

Explain String: STREAM DATA SINK

*************************** 15. row ***************************

Explain String: EXCHANGE ID: 06

*************************** 16. row ***************************

Explain String: UNPARTITIONED

*************************** 17. row ***************************

Explain String:

*************************** 18. row ***************************

Explain String: 5:HASH JOIN

*************************** 19. row ***************************

Explain String: | join op: RIGHT OUTER JOIN (PARTITIONED)

*************************** 20. row ***************************

Explain String: | colocate: false, reason:

*************************** 21. row ***************************

Explain String: | equal join conjunct: 86: broker_account_id = 60: account_no

*************************** 22. row ***************************

Explain String: | equal join conjunct: 94: symbol = 83: expr

*************************** 23. row ***************************

Explain String: | equal join conjunct: 118: yyyymmdd = 82: yyyymmdd

*************************** 24. row ***************************

Explain String: | limit: 1000

*************************** 25. row ***************************

Explain String: |

*************************** 26. row ***************************

Explain String: |----4:EXCHANGE

*************************** 27. row ***************************

Explain String: | limit: 1000

*************************** 28. row ***************************

Explain String: |

*************************** 29. row ***************************

Explain String: 1:EXCHANGE

*************************** 30. row ***************************

Explain String:

*************************** 31. row ***************************

Explain String: PLAN FRAGMENT 2

*************************** 32. row ***************************

Explain String: OUTPUT EXPRS:

*************************** 33. row ***************************

Explain String: PARTITION: RANDOM

*************************** 34. row ***************************

Explain String:

*************************** 35. row ***************************

Explain String: STREAM DATA SINK

*************************** 36. row ***************************

Explain String: EXCHANGE ID: 04

*************************** 37. row ***************************

Explain String: HASH_PARTITIONED: 60: account_no, 83: expr, 82: yyyymmdd

*************************** 38. row ***************************

Explain String:

*************************** 39. row ***************************

Explain String: 3:Project

*************************** 40. row ***************************

Explain String: | <slot 60> : 60: account_no

*************************** 41. row ***************************

Explain String: | <slot 61> : 61: account_type

*************************** 42. row ***************************

Explain String: | <slot 62> : 62: security_subtype

*************************** 43. row ***************************

Explain String: | <slot 64> : 64: business_type

*************************** 44. row ***************************

Explain String: | <slot 69> : 69: quantity

*************************** 45. row ***************************

Explain String: | <slot 82> : 82: yyyymmdd

*************************** 46. row ***************************

Explain String: | <slot 83> : split(59: symbol, ' ')[0]

*************************** 47. row ***************************

Explain String: | limit: 1000

*************************** 48. row ***************************

Explain String: |

*************************** 49. row ***************************

Explain String: 2:HdfsScanNode

*************************** 50. row ***************************

Explain String: TABLE: dwd_spark_daily_option_exercise_assignment_record_df

*************************** 51. row ***************************

Explain String: PARTITION PREDICATES: 82: yyyymmdd = '20250115'

*************************** 52. row ***************************

Explain String: NON-PARTITION PREDICATES: 80: cash_trade_date IS NULL

*************************** 53. row ***************************

Explain String: partitions=1/1

*************************** 54. row ***************************

Explain String: cardinality=2

*************************** 55. row ***************************

Explain String: avgRowSize=9.0

*************************** 56. row ***************************

Explain String: numNodes=0

*************************** 57. row ***************************

Explain String: limit: 1000

*************************** 58. row ***************************

Explain String:

*************************** 59. row ***************************

Explain String: PLAN FRAGMENT 3

*************************** 60. row ***************************

Explain String: OUTPUT EXPRS:

*************************** 61. row ***************************

Explain String: PARTITION: RANDOM

*************************** 62. row ***************************

Explain String:

*************************** 63. row ***************************

Explain String: STREAM DATA SINK

*************************** 64. row ***************************

Explain String: EXCHANGE ID: 01

*************************** 65. row ***************************

Explain String: HASH_PARTITIONED: 86: broker_account_id, 94: symbol, 118: yyyymmdd

*************************** 66. row ***************************

Explain String:

*************************** 67. row ***************************

Explain String: 0:IcebergScanNode

*************************** 68. row ***************************

Explain String: TABLE: ice.ice_dwd.dwd_assets_position_sodhis_di

*************************** 69. row ***************************

Explain String: cardinality=13170616485

*************************** 70. row ***************************

Explain String: avgRowSize=35.0

*************************** 71. row ***************************

Explain String: numNodes=0

71 rows in set (1.53 sec)

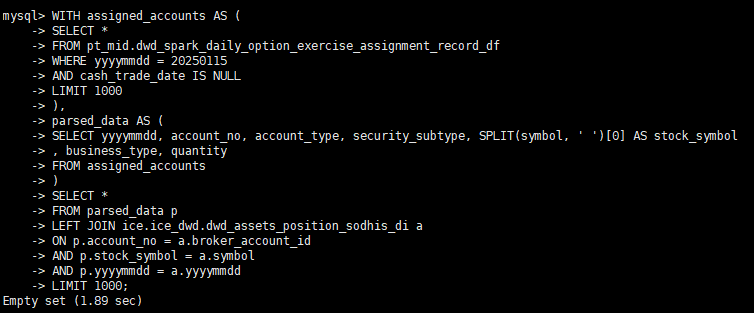

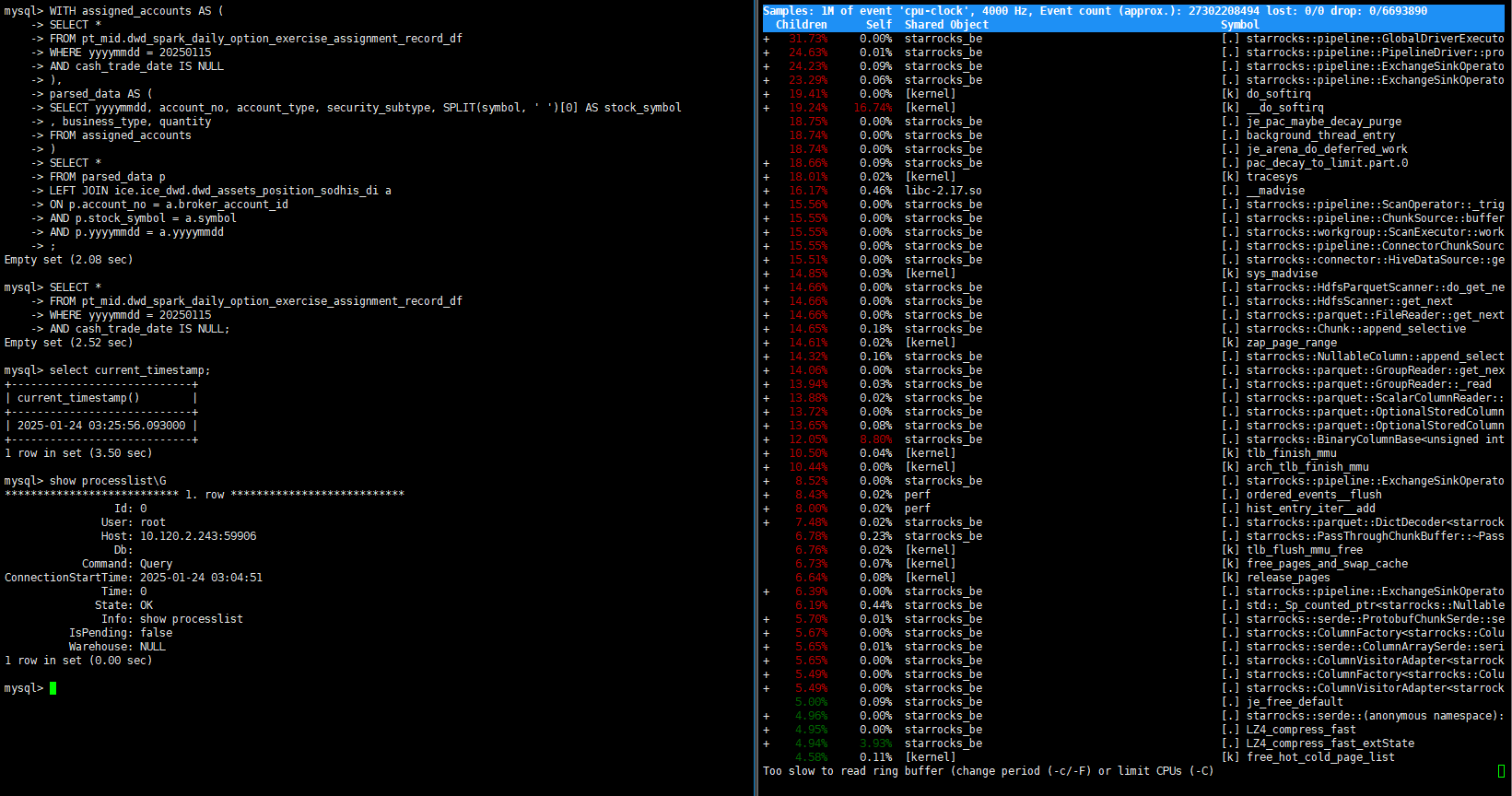

另外,还测试了不带 limit 的查询,现象都是一样的:

WITH assigned_accounts AS (

SELECT *

FROM pt_mid.dwd_spark_daily_option_exercise_assignment_record_df

WHERE yyyymmdd = 20250115

AND cash_trade_date IS NULL

),

parsed_data AS (

SELECT yyyymmdd, account_no, account_type, security_subtype, SPLIT(symbol, ' ')[0] AS stock_symbol

, business_type, quantity

FROM assigned_accounts

)

SELECT *

FROM parsed_data p

LEFT JOIN ice.ice_dwd.dwd_assets_position_sodhis_di a

ON p.account_no = a.broker_account_id

AND p.stock_symbol = a.symbol

AND p.yyyymmdd = a.yyyymmdd

;

SELECT *

FROM pt_mid.dwd_spark_daily_option_exercise_assignment_record_df

WHERE yyyymmdd = 20250115

AND cash_trade_date IS NULL;

这是不带 limit 的 explain:

mysql> explain WITH assigned_accounts AS ( SELECT * FROM pt_mid.dwd_spark_daily_option_exercise_assignment_record_df WHERE yyyymmdd = 20250115 AND cash_trade_date IS NULL ), parsed_data AS ( SELECT yyyymmdd, account_no, account_type, security_subtype, SPLIT(symbol, ' ')[0] AS stock_symbol , business_type, quantity FROM assigned_accounts ) SELECT * FROM parsed_data p LEFT JOIN ice.ice_dwd.dwd_assets_position_sodhis_di a ON p.account_no = a.broker_account_id AND p.stock_symbol = a.symbol AND p.yyyymmdd = a.yyyymmdd\G

*************************** 1. row ***************************

Explain String: PLAN FRAGMENT 0

*************************** 2. row ***************************

Explain String: OUTPUT EXPRS:82: yyyymmdd | 60: account_no | 61: account_type | 62: security_subtype | 83: expr |64: business_type | 69: quantity | 84: user_id | 85: broker_id | 86: broker_account_id | 87: process_date | 88: firm | 89: correspondentid | 90: correspondent_office_id | 91: office_code | 92: registered_rep_code | 93: account_type | 94: symbol | 95: cusip | 96: trade_quantity | 97: settle_quantity | 98: currency_code | 99: security_type_code| 100: ticker_type | 101: descp | 102: margin_eligible_code | 103: closing_price | 104: last_activty_date | 105: loc_location | 106: loc_memo | 107: option_amount | 108: conversion_factor | 109: underlying_cusip | 110: option_symbol_root | 111: option_contract_date | 112: strike_price | 113: callput | 114: expiration_delivery_date | 115: position_market_value | 116: customer_source | 117: origin_data_region | 118: yyyymmdd

*************************** 3. row ***************************

Explain String: PARTITION: UNPARTITIONED

*************************** 4. row ***************************

Explain String:

*************************** 5. row ***************************

Explain String: RESULT SINK

*************************** 6. row ***************************

Explain String:

*************************** 7. row ***************************

Explain String: 6:EXCHANGE

*************************** 8. row ***************************

Explain String:

*************************** 9. row ***************************

Explain String: PLAN FRAGMENT 1

*************************** 10. row ***************************

Explain String: OUTPUT EXPRS:

*************************** 11. row ***************************

Explain String: PARTITION: HASH_PARTITIONED: 86: broker_account_id, 94: symbol, 118: yyyymmdd

*************************** 12. row ***************************

Explain String:

*************************** 13. row ***************************

Explain String: STREAM DATA SINK

*************************** 14. row ***************************

Explain String: EXCHANGE ID: 06

*************************** 15. row ***************************

Explain String: UNPARTITIONED

*************************** 16. row ***************************

Explain String:

*************************** 17. row ***************************

Explain String: 5:HASH JOIN

*************************** 18. row ***************************

Explain String: | join op: RIGHT OUTER JOIN (PARTITIONED)

*************************** 19. row ***************************

Explain String: | colocate: false, reason:

*************************** 20. row ***************************

Explain String: | equal join conjunct: 86: broker_account_id = 60: account_no

*************************** 21. row ***************************

Explain String: | equal join conjunct: 94: symbol = 83: expr

*************************** 22. row ***************************

Explain String: | equal join conjunct: 118: yyyymmdd = 82: yyyymmdd

*************************** 23. row ***************************

Explain String: |

*************************** 24. row ***************************

Explain String: |----4:EXCHANGE

*************************** 25. row ***************************

Explain String: |

*************************** 26. row ***************************

Explain String: 1:EXCHANGE

*************************** 27. row ***************************

Explain String:

*************************** 28. row ***************************

Explain String: PLAN FRAGMENT 2

*************************** 29. row ***************************

Explain String: OUTPUT EXPRS:

*************************** 30. row ***************************

Explain String: PARTITION: RANDOM

*************************** 31. row ***************************

Explain String:

*************************** 32. row ***************************

Explain String: STREAM DATA SINK

*************************** 33. row ***************************

Explain String: EXCHANGE ID: 04

*************************** 34. row ***************************

Explain String: HASH_PARTITIONED: 60: account_no, 83: expr, 82: yyyymmdd

*************************** 35. row ***************************

Explain String:

*************************** 36. row ***************************

Explain String: 3:Project

*************************** 37. row ***************************

Explain String: | <slot 60> : 60: account_no

*************************** 38. row ***************************

Explain String: | <slot 61> : 61: account_type

*************************** 39. row ***************************

Explain String: | <slot 62> : 62: security_subtype

*************************** 40. row ***************************

Explain String: | <slot 64> : 64: business_type

*************************** 41. row ***************************

Explain String: | <slot 69> : 69: quantity

*************************** 42. row ***************************

Explain String: | <slot 82> : 82: yyyymmdd

*************************** 43. row ***************************

Explain String: | <slot 83> : split(59: symbol, ' ')[0]

*************************** 44. row ***************************

Explain String: |

*************************** 45. row ***************************

Explain String: 2:HdfsScanNode

*************************** 46. row ***************************

Explain String: TABLE: dwd_spark_daily_option_exercise_assignment_record_df

*************************** 47. row ***************************

Explain String: PARTITION PREDICATES: 82: yyyymmdd = '20250115'

*************************** 48. row ***************************

Explain String: NON-PARTITION PREDICATES: 80: cash_trade_date IS NULL

*************************** 49. row ***************************

Explain String: partitions=1/1

*************************** 50. row ***************************

Explain String: cardinality=2

*************************** 51. row ***************************

Explain String: avgRowSize=9.0

*************************** 52. row ***************************

Explain String: numNodes=0

*************************** 53. row ***************************

Explain String:

*************************** 54. row ***************************

Explain String: PLAN FRAGMENT 3

*************************** 55. row ***************************

Explain String: OUTPUT EXPRS:

*************************** 56. row ***************************

Explain String: PARTITION: RANDOM

*************************** 57. row ***************************

Explain String:

*************************** 58. row ***************************

Explain String: STREAM DATA SINK

*************************** 59. row ***************************

Explain String: EXCHANGE ID: 01

*************************** 60. row ***************************

Explain String: HASH_PARTITIONED: 86: broker_account_id, 94: symbol, 118: yyyymmdd

*************************** 61. row ***************************

Explain String:

*************************** 62. row ***************************

Explain String: 0:IcebergScanNode

*************************** 63. row ***************************

Explain String: TABLE: ice.ice_dwd.dwd_assets_position_sodhis_di

*************************** 64. row ***************************

Explain String: cardinality=13170616485

*************************** 65. row ***************************

Explain String: avgRowSize=35.0

*************************** 66. row ***************************

Explain String: numNodes=0

66 rows in set (1.48 sec)