fe.audit.log

{

"Timestamp": "1737877769532",

"Client": "10.60.22.0:45684",

"User": "root",

"AuthorizedUser": "'root'@'%'",

"ResourceGroup": "default_wg",

"Catalog": "unified_catalog",

"Db": "",

"State": "EOF",

"ErrorCode": "",

"Time": "4076",

"ScanBytes": "17020",

"ScanRows": "0",

"ReturnRows": "0",

"CpuCostNs": "15565590",

"MemCostBytes": "81460432",

"StmtId": "4",

"QueryId": "12aa3836-dbba-11ef-8af1-06ae1418ac3a",

"IsQuery": "true",

"feIp": "10.60.22.0",

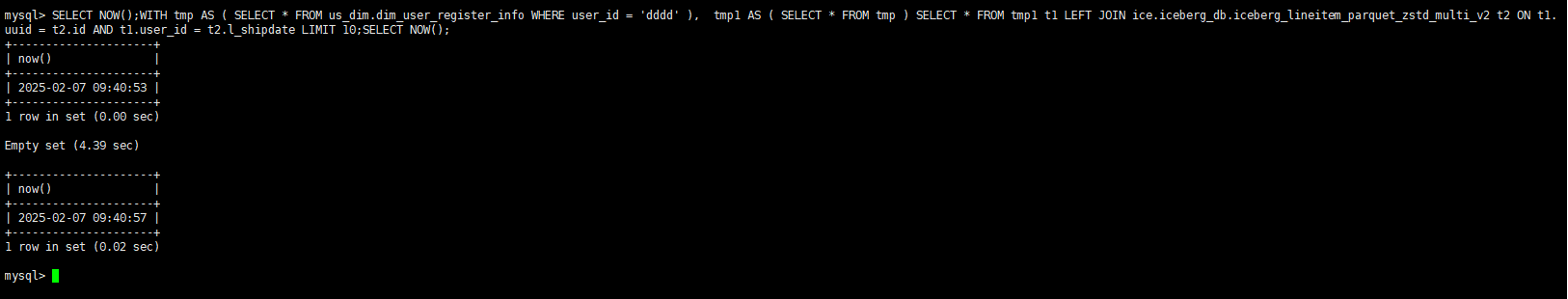

"Stmt": "with tmp as( select * from us_dim.dim_user_register_info where user_id ='dddd' ), tmp1 as(select * from tmp) select * from tmp1 t1 left join ice.iceberg_db.iceberg_lineitem_parquet_zstd_multi_v2 t2 on t1.uuid=t2.id and t1.user_id=t2.l_shipdate limit 10",

"Digest": "",

"PlanCpuCost": "1.6531322052E12",

"PlanMemCost": "896.0",

"PendingTimeMs": "43",

"BigQueryLogCPUSecondThreshold": "480",

"BigQueryLogScanBytesThreshold": "10737418240",

"BigQueryLogScanRowsThreshold": "1000000000",

"Warehouse": "default_warehouse",

"CandidateMVs": null,

"HitMvs": null,

"IsForwardToLeader": "false"

}

fe.internal.log

2025-01-26 07:49:32.681Z INFO (stats-cache-refresher-6|115) [StatisticExecutor.executeDQL():486] statistic execute query | QueryId [1487f8f9-dbba-11ef-8af1-06ae1418ac3a] | SQL: SELECT cast(7 as INT), column_name, cast(json_object("buckets", buckets, "mcv", mcv) as varchar) FROM external_histogram_statistics WHERE table_uuid = 'unified_catalog.us_dim.dim_user_register_info.1717579531' and column_name in ('channel_3_en', 'have_nick_name', 'channel_3', 'register_os', 'channel_4', 'source_channel', 'have_personal_signature', 'channel_1', 'channel_2', 'register_type', 'app_channel', 'channel_1_en', 'user_id', 'invite_source', 'mktb_id', 'channel_4_en', 'register_time', 'register_account_status', 'channel_2_en', 'uuid', 'update_time', 'register_address', 'register_device_id', 'register_platform', 'have_set_picture', 'final_channel', 'invite_user_id', 'register_version')

2025-01-26 07:49:32.897Z INFO (stats-cache-refresher-0|109) [StatisticExecutor.executeDQL():486] statistic execute query | QueryId [14a9d8df-dbba-11ef-8af1-06ae1418ac3a] | SQL: SELECT cast(7 as INT), column_name, cast(json_object("buckets", buckets, "mcv", mcv) as varchar) FROM external_histogram_statistics WHERE table_uuid = 'ice.iceberg_db.iceberg_lineitem_parquet_zstd_multi_v2.c9d1437b-2766-4e58-a1b7-863257f17e3e' and column_name in ('ext22', 'ext21', 'l_linestatus', 'ext24', 'ext23', 'ext20', 'ext29', 'ext26', 'ext25', 'ext28', 'ext27', 'l_returnflag', 'ext11', 'ext10', 'l_comment', 'ext13', 'ext12', 'l_extendedprice', 'l_quantity', 'ext50', 'ext19', 'ext18', 'ext15', 'ext14', 'ext17', 'ext16', 'l_suppkey', 'l_orderkey', 'l_linenumber', 'ext44', 'id', 'ext43', 'ext46', 'ext45', 'ext42', 'ext41', 'ext48', 'ext47', 'ext49', 'ext9', 'l_partkey', 'ext6', 'ext5', 'ext8', 'ext7', 'ext2', 'ext1', 'l_commitdate', 'ext4', 'ext3', 'l_discount', 'l_tax', 'ext30', 'l_shipmode', 'l_shipdate', 'l_receiptdate', 'l_shipinstruct')

2025-01-26 07:49:32.910Z INFO (stats-cache-refresher-8|117) [StatisticExecutor.executeDQL():486] statistic execute query | QueryId [14a9158a-dbba-11ef-8af1-06ae1418ac3a] | SQL: SELECT cast(6 as INT), column_name, sum(row_count),cast(sum(data_size) as bigint), hll_union_agg(ndv), sum(null_count), cast(max(cast(max as string)) as string), cast(min(cast(min as string)) as string) FROM external_column_statistics WHERE table_uuid = "unified_catalog.us_dim.dim_user_register_info.1717579531" and column_name in ("channel_3_en", "have_nick_name", "channel_3", "register_os", "channel_4", "source_channel", "have_personal_signature", "channel_1", "channel_2", "register_type", "app_channel", "channel_1_en", "user_id", "invite_source", "mktb_id", "channel_4_en", "register_time", "register_account_status", "channel_2_en", "uuid", "update_time", "register_address", "register_device_id", "register_platform", "have_set_picture", "final_channel", "invite_user_id", "register_version") GROUP BY table_uuid, column_name

2025-01-26 07:49:32.931Z INFO (stats-cache-refresher-3|112) [StatisticExecutor.executeDQL():486] statistic execute query | QueryId [14aa4e10-dbba-11ef-8af1-06ae1418ac3a] | SQL: SELECT cast(6 as INT), column_name, sum(row_count),cast(sum(data_size) as bigint), hll_union_agg(ndv), sum(null_count), cast(max(cast(max as string)) as string), cast(min(cast(min as string)) as string) FROM external_column_statistics WHERE table_uuid = "ice.iceberg_db.iceberg_lineitem_parquet_zstd_multi_v2.c9d1437b-2766-4e58-a1b7-863257f17e3e" and column_name in ("ext22", "ext21", "l_linestatus", "ext24", "ext23", "ext20", "ext29", "ext26", "ext25", "ext28", "ext27", "l_returnflag", "ext11", "ext10", "l_comment", "ext13", "ext12", "ext50", "ext19", "ext18", "ext15", "ext14", "ext17", "ext16", "l_orderkey", "ext44", "id", "ext43", "ext46", "ext45", "ext42", "ext41", "ext48", "ext47", "ext49", "ext9", "ext6", "ext5", "ext8", "ext7", "ext2", "ext1", "l_commitdate", "ext4", "ext3", "ext30", "l_shipmode", "l_shipdate", "l_receiptdate", "l_shipinstruct") GROUP BY table_uuid, column_name UNION ALL SELECT cast(6 as INT), column_name, sum(row_count), cast(sum(data_size) as bigint), hll_union_agg(ndv), sum(null_count), cast(max(cast(max as double)) as string), cast(min(cast(min as double)) as string) FROM external_column_statistics WHERE table_uuid = "ice.iceberg_db.iceberg_lineitem_parquet_zstd_multi_v2.c9d1437b-2766-4e58-a1b7-863257f17e3e" and column_name in ("l_extendedprice", "l_quantity", "l_discount", "l_tax") GROUP BY table_uuid, column_name UNION ALL SELECT cast(6 as INT), column_name, sum(row_count), cast(sum(data_size) as bigint), hll_union_agg(ndv), sum(null_count), cast(max(cast(max as bigint)) as string), cast(min(cast(min as bigint)) as string) FROM external_column_statistics WHERE table_uuid = "ice.iceberg_db.iceberg_lineitem_parquet_zstd_multi_v2.c9d1437b-2766-4e58-a1b7-863257f17e3e" and column_name in ("l_suppkey", "l_linenumber", "l_partkey") GROUP BY table_uuid, column_name