版本:3.3.0

配置:fe 16 32g

cn 32c 128g

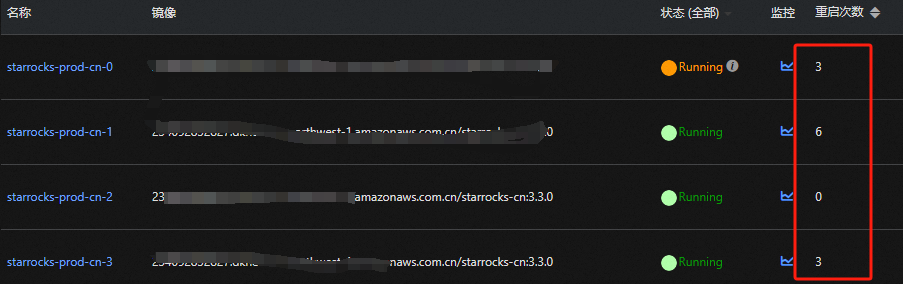

先暂停所有routine load任务,然后批量启动routine load任务后,cn节点开始频繁挨个重启,提示查询超出内存限制。

不是很明白,平时一直都能正常运行,一直没什么问题。

W0801 12:47:24.572686 532 fragment_executor.cpp:733] Prepare fragment failed: 2553dcf5-4fc1-11ef-aaba-d67f2872cc74 fragment_instance_id=2553dcf5-4fc1-11ef-aaba-d67f2872cc75 is_stream_pipeline=0 backend_num=0 fragment= TPlanFragment(plan=TPlan(nodes=[TPlanNode(node_id=25, node_type=AGGREGATION_NODE, num_children=1, limit=-1, row_tuples=[16], nullable_tuples=[0], conjuncts=, compact_data=0, hash_join_node=, agg_node=TAggregationNode(grouping_exprs=[], aggregate_functions=[TExpr(nodes=[TExprNode(node_type=AGG_EXPR, type=TTypeDesc(types=[TTypeNode(type=SCALAR, scalar_type=TScalarType(type=BIGINT, len=, precision=, scale=), struct_fields=, is_named=)]), opcode=, num_children=1, agg_expr=TAggregateExpr(is_merge_agg=0), bool_literal=, case_expr=, date_literal=, float_literal=, int_literal=, in_predicate=, is_null_pred=, like_pred=, literal_pred=, slot_ref=, string_literal=, tuple_is_null_pred=, info_func=, decimal_literal=, output_scale=-1, fn_call_expr=, large_int_literal=, output_column=, output_type=, vector_opcode=, fn=TFunction(name=TFunctionName(db_name=, function_name=count), binary_type=BUILTIN, arg_types=[TTypeDesc(types=[TTypeNode(type=SCALAR, scalar_type=TScalarType(type=TINYINT, len=, precision=, scale=), struct_fields=, is_named=)])], ret_type=TTypeDesc(types=[TTypeNode(type=SCALAR, scalar_type=TScalarType(type=BIGINT, len=, precision=, scale=), struct_fields=, is_named=)]), has_var_args=0, comment=, signature=, hdfs_location=, scalar_fn=, aggregate_fn=TAggregateFunction(intermediate_type=TTypeDesc(types=[TTypeNode(type=SCALAR, scalar_type=TScalarType(type=BIGINT, len=, precision=, scale=), struct_fields=, is_named=)]), update_fn_symbol=, init_fn_symbol=, serialize_fn_symbol=, merge_fn_symbol=, finalize_fn_symbol=, get_value_fn_symbol=, remove_fn_symbol=, is_analytic_only_fn=0, symbol=, is_asc_order=, nulls_first=, is_distinct=0), id=0, checksum=, fid=0, table_fn=, could_apply_dict_optimize=0, ignore_nulls=0, isolated=), vararg_start_idx=, child_type=, vslot_ref=, used_subfield_names=, binary_literal=, copy_flag=, check_is_out_of_bounds=, use_vectorized=, has_nullable_child=0, is_nullable=0, child_type_desc=, is_monotonic=0, dict_query_expr=, dictionary_get_expr=, is_index_only_filter=0), TExprNode(node_type=INT_LITERAL, type=TTypeDesc(types=[TTypeNode(type=SCALAR, scalar_type=TScalarType(type=TINYINT, len=, precision=, scale=), struct_fields=, is_named=)]), opcode=, num_children=0, agg_expr=, bool_literal=, case_expr=, date_literal=, float_literal=, int_literal=TIntLiteral(value=0), in_predicate=, is_null_pred=, like_pred=, literal_pred=, slot_ref=, string_literal=, tuple_is_null_pred=, info_func=, decimal_literal=, output_scale=-1, fn_call_expr=, large_int_literal=, output_column=, output_type=, vector_opcode=, fn=, vararg_start_idx=, child_type=, vslot_ref=, used_subfield_names=, binary_literal=, copy_flag=, check_is_out_of_bounds=, use_vectorized=, has_nullable_child=0, is_nullable=0, child_type_desc=, is_monotonic=1, dict_query_expr=, dictionary_get_expr=, is_index_only_filter=0)])], intermediate_tuple_id=16, output_tuple_id=16, need_finalize=1, use_streaming_preaggregation=0, has_outer_join_child=1, streaming_preaggregation_mode=AUTO, sql_grouping_keys=, sql_aggregate_functions=count(0), agg_func_set_version=3, intermediate_aggr_exprs=[TExpr(nodes=[TExprNode(node_type=SLOT_REF, type=TTypeDesc(types=[TTypeNode(type=SCALAR, scalar_type=TScalarType(type=BIGINT, len=, precision=, scale=), struct_fields=, is_named=)]), opcode=, num_children=0, agg_expr=, bool_literal=, case_expr=, date_literal=, float_literal=, int_literal=, in_predicate=, is_null_pred=, like_pred=, literal_pred=, slot_ref=TSlotRef(slot_id=255, tuple_id=16), string_literal=, tuple_is_null_pred=, info_func=, decimal_literal=, output_scale=-1, fn_call_expr=, large_int_literal=, output_column=-1, output_type=, vector_opcode=, fn=, vararg_start_idx=, child_type=, vslot_ref=, used_subfield_names=, binary_literal=, copy_flag=, check_is_out_of_bounds=, use_vectorized=, has_nullable_child=0, is_nullable=0, child_type_desc=, is_monotonic=1, dict_query_expr=, dictionary_get_expr=, is_index_only_filter=0)])], interpolate_passthrough=0, use_sort_agg=0, use_per_bucket_optimize=0, enable_pipeline_share_limit=1), sort_node=, merge_node=, exchange_node=, mysql_scan_node=, olap_scan_node=, file_scan_node=, schema_scan_node=, meta_scan_node=, analytic_node=, union_node=, resource_profile=, es_scan_node=, repeat_node=, assert_num_rows_node=, intersect_node=, except_node=, merge_join_node=, use_vectorized=, hdfs_scan_node=, project_node=, table_function_node=, probe_runtime_filters=, decode_node=, local_rf_waiting_set={}, filter_null_value_columns=, need_create_tuple_columns=0, jdbc_scan_node=, connector_scan_node=, cross_join_node=, lake_scan_node=, nestloop_join_node=, stream_scan_node=, stream_join_node=, stream_agg_node=), TPlanNode(node_id=24, node_type=EXCHANGE_NODE, num_children=0, limit=-1, row_tuples=[15], nullable_tuples=[0], conjuncts=, compact_data=0, hash_join_node=, agg_node=, sort_node=, merge_node=, exchange_node=TExchangeNode(input_row_tuples=[15], sort_info=, offset=, partition_type=UNPARTITIONED, enable_parallel_merge=1), mysql_scan_node=, olap_scan_node=, file_scan_node=, schema_scan_node=, meta_scan_node=, analytic_node=, union_node=, resource_profile=, es_scan_node=, repeat_node=, assert_num_rows_node=, intersect_node=, except_node=, merge_join_node=, use_vectorized=, hdfs_scan_node=, project_node=, table_function_node=, probe_runtime_filters=, decode_node=, local_rf_waiting_set={}, filter_null_value_columns=, need_create_tuple_columns=0, jdbc_scan_node=, connector_scan_node=, cross_join_node=, lake_scan_node=, nestloop_join_node=, stream_scan_node=, stream_join_node=, stream_agg_node=)]), output_exprs=[TExpr(nodes=[TExprNode(node_type=SLOT_REF, type=TTypeDesc(types=[TTypeNode(type=SCALAR, scalar_type=TScalarType(type=BIGINT, len=, precision=, scale=), struct_fields=, is_named=)]), opcode=, num_children=0, agg_expr=, bool_literal=, case_expr=, date_literal=, float_literal=, int_literal=, in_predicate=, is_null_pred=, like_pred=, literal_pred=, slot_ref=TSlotRef(slot_id=255, tuple_id=16), string_literal=, tuple_is_null_pred=, info_func=, decimal_literal=, output_scale=-1, fn_call_expr=, large_int_literal=, output_column=-1, output_type=, vector_opcode=, fn=, vararg_start_idx=, child_type=, vslot_ref=, used_subfield_names=, binary_literal=, copy_flag=, check_is_out_of_bounds=, use_vectorized=, has_nullable_child=0, is_nullable=0, child_type_desc=, is_monotonic=1, dict_query_expr=, dictionary_get_expr=, is_index_only_filter=0)])], output_sink=TDataSink(type=RESULT_SINK, stream_sink=, result_sink=TResultSink(type=MYSQL_PROTOCAL, file_options=, format=, is_binary_row=0), mysql_table_sink=, export_sink=, olap_table_sink=, memory_scratch_sink=, multi_cast_stream_sink=, schema_table_sink=, iceberg_table_sink=, hive_table_sink=, table_function_table_sink=, dictionary_cache_sink=), partition=TDataPartition(type=UNPARTITIONED, partition_exprs=[], partition_infos=), min_reservation_bytes=, initial_reservation_total_claims=, query_global_dicts=, load_global_dicts=, cache_param=, query_global_dict_exprs=, group_execution_param=)

W0801 12:47:24.572898 532 internal_service.cpp:308] exec plan fragment failed, errmsg=Memory of query_pool exceed limit. Start execute plan fragment. Used: 137436833392, Limit: 96307909409. Mem usage has exceed the limit of query pool