【详述】通过flink导入数据到主键宽表,大概150+列,这个column_metadata 是因为列多导致占用很高么,有什么优化方法

【背景】数据导入

【业务影响】内存占用过高,查询及写入报错

【是否存算分离】

【StarRocks版本】3.2.4

【集群规模】3fe(1 follower+2observer)+3be(fe与be混部)

【机器信息】8C/32G/万兆

单个BE有多大数据量

show backends; 看下有多少Tablet

遇到同样的问题。Starrocks 3.1.11版本, 只有一张表,200个tablet

tpch导入60亿行占用的内存都很小

导入一个180列的宽表,大概5 亿行,导入过程中starrocks_be_column_metadata_mem_bytes一直很大

starrocks_be_chunk_allocator_mem_bytes 250421296

starrocks_be_column_metadata_mem_bytes 10849786742

starrocks_be_column_pool_mem_bytes 1304515317

starrocks_be_column_zonemap_index_mem_bytes 3867071510

starrocks_be_compaction_mem_bytes 90834920

starrocks_be_load_mem_bytes 413168104

starrocks_be_metadata_mem_bytes 13322836466

starrocks_be_ordinal_index_mem_bytes 2056806848

starrocks_be_process_mem_bytes 19321655096

starrocks_be_query_mem_bytes 498469576

starrocks_be_rowset_metadata_mem_bytes 217563435

starrocks_be_segment_metadata_mem_bytes 2254897417

starrocks_be_segment_zonemap_mem_bytes 2151605346

starrocks_be_short_key_index_mem_bytes 3203

starrocks_be_storage_page_cache_mem_bytes 355668720

starrocks_be_tablet_metadata_mem_bytes 588872

starrocks_be_tablet_schema_mem_bytes 41864

starrocks_be_update_mem_bytes 265138

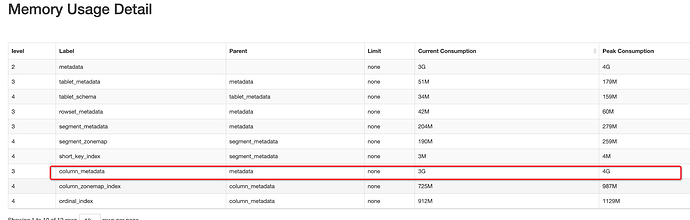

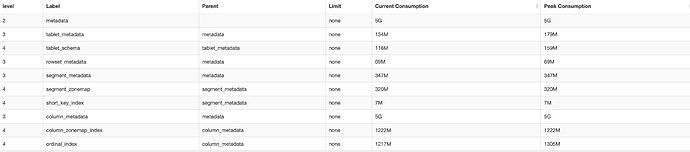

| level | Label | Parent | Limit | Current Consumption | Peak Consumption |

|---|---|---|---|---|---|

| 1 | process | 304G | 26G | 26G | |

| 2 | query_pool | process | 273G | 247M | 1032M |

| 2 | load | process | 91G | 811M | 2G |

| 2 | compaction | process | 304G | 38M | 6G |

| 2 | schema_change | process | none | 0 | 0 |

| 2 | column_pool | process | none | 1244M | 1418M |

| 2 | page_cache | process | none | 374M | 374M |

| 2 | chunk_allocator | process | none | 238M | 238M |

| 2 | clone | process | none | 0 | 0 |

| 2 | consistency | process | 10G | 0 | 0 |

| 2 | datacache | process | none | 0 | 0 |

| 2 | replication | process | none | 0 | 0 |

| 2 | metadata | process | none | 19G | 19G |

| 2 | update | process | 182G | 375K | 37M |

您好,我这边也是遇到同样的问题:

机器是32U+256G的Be,超高IO(350M/s)

两张表:

第一张表75列,8亿行, avg_row_length 1836, tablet 分了218个

第二张表40列,46亿行,avg_row_length 1200, tablet 分了620个

第一张表导入完,第二张表表就会因为内存不足,Be就拒绝导入了;并且发现内存里面的数据基本不会减少

啥版本,表结构是么样的。

版本 3.1.10

8亿行的表结构大概如下:

CREATE TABLE task_info (

id int(11) NOT NULL,

/**** 其余74行,有varchar和binint这些 ***/

) ENGINE=OLAP

PRIMARY KEY(id)

DISTRIBUTED BY HASH(id) BUCKETS 218

PROPERTIES (

“replication_num” = “1”,

“in_memory” = “false”,

“enable_persistent_index” = “true”,

“replicated_storage” = “true”,

“compression” = “LZ4”

);

请问一下,能在导入的时候完一张表之后,主动卸载掉column_metedata吗?

你上面那个图没截全吧,还有几条没显示?

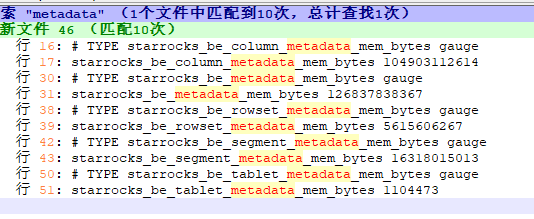

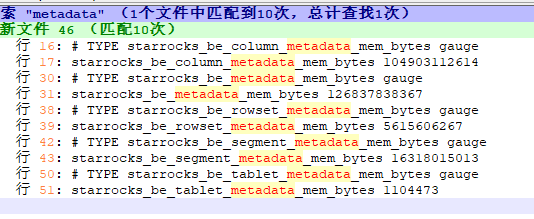

grep 下_mem_bytes

你单个BE多大数据量

starrocks_be_bitmap_index_mem_bytes 0

TYPE starrocks_be_bloom_filter_index_mem_bytes gauge

starrocks_be_bloom_filter_index_mem_bytes 0

TYPE starrocks_be_chunk_allocator_mem_bytes gauge

starrocks_be_chunk_allocator_mem_bytes 360975136

TYPE starrocks_be_clone_mem_bytes gauge

starrocks_be_clone_mem_bytes 0

TYPE starrocks_be_column_metadata_mem_bytes gauge

starrocks_be_column_metadata_mem_bytes 104903112614

TYPE starrocks_be_column_pool_mem_bytes gauge

starrocks_be_column_pool_mem_bytes 0

TYPE starrocks_be_column_zonemap_index_mem_bytes gauge

starrocks_be_column_zonemap_index_mem_bytes 35749623398

TYPE starrocks_be_compaction_mem_bytes gauge

starrocks_be_compaction_mem_bytes 16957568

TYPE starrocks_be_consistency_mem_bytes gauge

starrocks_be_consistency_mem_bytes 0

TYPE starrocks_be_datacache_mem_bytes gauge

starrocks_be_datacache_mem_bytes 0

TYPE starrocks_be_load_mem_bytes gauge

starrocks_be_load_mem_bytes 1383332216

TYPE starrocks_be_metadata_mem_bytes gauge

starrocks_be_metadata_mem_bytes 126837838367

TYPE starrocks_be_ordinal_index_mem_bytes gauge

starrocks_be_ordinal_index_mem_bytes 19157805472

TYPE starrocks_be_process_mem_bytes gauge

starrocks_be_process_mem_bytes 207517903856

TYPE starrocks_be_query_mem_bytes gauge

starrocks_be_query_mem_bytes 121907544

TYPE starrocks_be_rowset_metadata_mem_bytes gauge

starrocks_be_rowset_metadata_mem_bytes 5615606267

TYPE starrocks_be_schema_change_mem_bytes gauge

starrocks_be_schema_change_mem_bytes 0

TYPE starrocks_be_segment_metadata_mem_bytes gauge

starrocks_be_segment_metadata_mem_bytes 16318015013

TYPE starrocks_be_segment_zonemap_mem_bytes gauge

starrocks_be_segment_zonemap_mem_bytes 13610421714

TYPE starrocks_be_short_key_index_mem_bytes gauge

starrocks_be_short_key_index_mem_bytes 8050

TYPE starrocks_be_storage_page_cache_mem_bytes gauge

starrocks_be_storage_page_cache_mem_bytes 458851232

TYPE starrocks_be_tablet_metadata_mem_bytes gauge

starrocks_be_tablet_metadata_mem_bytes 1104473

TYPE starrocks_be_tablet_schema_mem_bytes gauge

starrocks_be_tablet_schema_mem_bytes 11641

TYPE starrocks_be_update_mem_bytes gauge

starrocks_be_update_mem_bytes 47417722549

BE规格 单机部署 32U 256G,磁盘 15T,想导入30T数据。

当前BE已经有多少T数据了

修改be.conf data_page_size=1048576,重新导入,试试

好的,感谢。目前be的配置。

我的BE配置如下:

tablet_max_versions = 5000

update_compaction_size_threshold=67108864

update_compaction_check_interval_seconds=2

update_compaction_num_threads_per_disk=16

update_compaction_per_tablet_min_interval_seconds=120

cumulative_compaction_num_threads_per_disk = 8

base_compaction_num_threads_per_disk = 8

cumulative_compaction_check_interval_seconds = 2

flush_thread_num_per_store = 16

enable_pindex_read_by_page=true

enable_parallel_get_and_bf=false

disable_column_pool = true

transaction_publish_version_worker_count=64

transaction_apply_worker_count=64

关于您的上一个问题:BE已经有多少数据了?每次我都是把数据都删除干净了,开始导入的,发现一直失败。

为啥要改这些配置?