【详述】be节点崩溃

【背景】

【业务影响】

【是否存算分离】

【StarRocks版本】3.1.4

【集群规模】例如:3fe(1 follower+2observer)+ 3be(fe与be混部)

【机器信息】CPU虚拟核/内存/网卡,例如:16C/64G/万兆

【联系方式】xiaxiong2010@163.com

【附件】Fail to publish version: Internal error: primary key does not support concurrent log applying

看着是publish version有问题 麻烦发下crash be节点的 be.out日志呢

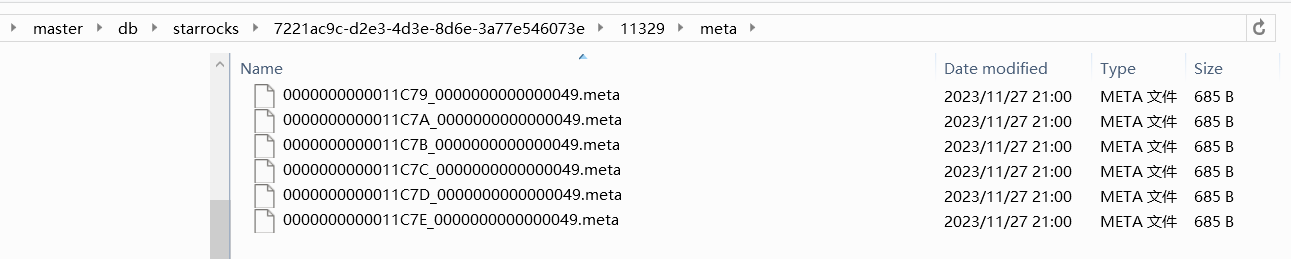

hdfsOpenFile(/db/starrocks/7221ac9c-d2e3-4d3e-8d6e-3a77e546073e/11329/meta/0000000000011C7C_0000000000000048.meta): FileSystem#open((Lorg/apache/hadoop/fs/Path;I)Lorg/apache/hadoop/fs/FSDataInputStream;) error:

RemoteException: File does not exist: /db/starrocks/7221ac9c-d2e3-4d3e-8d6e-3a77e546073e/11329/meta/0000000000011C7C_0000000000000048.meta

at org.apache.hadoop.hdfs.server.namenode.INodeFile.valueOf(INodeFile.java:86)

at org.apache.hadoop.hdfs.server.namenode.INodeFile.valueOf(INodeFile.java:76)

at org.apache.hadoop.hdfs.server.namenode.FSDirStatAndListingOp.getBlockLocations(FSDirStatAndListingOp.java:158)

at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.getBlockLocations(FSNamesystem.java:1931)

at org.apache.hadoop.hdfs.server.namenode.NameNodeRpcServer.getBlockLocations(NameNodeRpcServer.java:738)

at org.apache.hadoop.hdfs.protocolPB.ClientNamenodeProtocolServerSideTranslatorPB.getBlockLocations(ClientNamenodeProtocolServerSideTranslatorPB.java:426)

at org.apache.hadoop.hdfs.protocol.proto.ClientNamenodeProtocolProtos$ClientNamenodeProtocol$2.callBlockingMethod(ClientNamenodeProtocolProtos.java)

at org.apache.hadoop.ipc.ProtobufRpcEngine$Server$ProtoBufRpcInvoker.call(ProtobufRpcEngine.java:524)

at org.apache.hadoop.ipc.RPC$Server.call(RPC.java:1025)

at org.apache.hadoop.ipc.Server$RpcCall.run(Server.java:876)

at org.apache.hadoop.ipc.Server$RpcCall.run(Server.java:822)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:422)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1730)

at org.apache.hadoop.ipc.Server$Handler.run(Server.java:2682)

java.io.FileNotFoundException: File does not exist: /db/starrocks/7221ac9c-d2e3-4d3e-8d6e-3a77e546073e/11329/meta/0000000000011C7C_0000000000000048.meta

at org.apache.hadoop.hdfs.server.namenode.INodeFile.valueOf(INodeFile.java:86)

at org.apache.hadoop.hdfs.server.namenode.INodeFile.valueOf(INodeFile.java:76)

at org.apache.hadoop.hdfs.server.namenode.FSDirStatAndListingOp.getBlockLocations(FSDirStatAndListingOp.java:158)

at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.getBlockLocations(FSNamesystem.java:1931)

at org.apache.hadoop.hdfs.server.namenode.NameNodeRpcServer.getBlockLocations(NameNodeRpcServer.java:738)

at org.apache.hadoop.hdfs.protocolPB.ClientNamenodeProtocolServerSideTranslatorPB.getBlockLocations(ClientNamenodeProtocolServerSideTranslatorPB.java:426)

at org.apache.hadoop.hdfs.protocol.proto.ClientNamenodeProtocolProtos$ClientNamenodeProtocol$2.callBlockingMethod(ClientNamenodeProtocolProtos.java)

at org.apache.hadoop.ipc.ProtobufRpcEngine$Server$ProtoBufRpcInvoker.call(ProtobufRpcEngine.java:524)

at org.apache.hadoop.ipc.RPC$Server.call(RPC.java:1025)

at org.apache.hadoop.ipc.Server$RpcCall.run(Server.java:876)

at org.apache.hadoop.ipc.Server$RpcCall.run(Server.java:822)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:422)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1730)

at org.apache.hadoop.ipc.Server$Handler.run(Server.java:2682)

at sun.reflect.GeneratedConstructorAccessor10.newInstance(Unknown Source)

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45)

at java.lang.reflect.Constructor.newInstance(Constructor.java:423)

at org.apache.hadoop.ipc.RemoteException.instantiateException(RemoteException.java:121)

at org.apache.hadoop.ipc.RemoteException.unwrapRemoteException(RemoteException.java:88)

at org.apache.hadoop.hdfs.DFSClient.callGetBlockLocations(DFSClient.java:902)

at org.apache.hadoop.hdfs.DFSClient.getLocatedBlocks(DFSClient.java:889)

at org.apache.hadoop.hdfs.DFSClient.getLocatedBlocks(DFSClient.java:878)

at org.apache.hadoop.hdfs.DFSClient.open(DFSClient.java:1046)

at org.apache.hadoop.hdfs.DistributedFileSystem$4.doCall(DistributedFileSystem.java:343)

at org.apache.hadoop.hdfs.DistributedFileSystem$4.doCall(DistributedFileSystem.java:339)

at org.apache.hadoop.fs.FileSystemLinkResolver.resolve(FileSystemLinkResolver.java:81)

at org.apache.hadoop.hdfs.DistributedFileSystem.open(DistributedFileSystem.java:356)请问这个问题有解决吗?我也遇到相同问题

贴下完整的be.out