为了更快的定位您的问题,请提供以下信息,谢谢

【详述】flink使用flink-connector写入主键模型的表数据后,导致写入表无法正常查询,通过重启be节点也无法正常查询,表结构如下

CREATE TABLE dm_transfer_shop_size2 (

id varchar(65533) NOT NULL COMMENT “”,

t_date varchar(65533) NULL COMMENT “”,

shop_name varchar(65533) NULL COMMENT “”,

goods_no varchar(65533) NULL COMMENT “”,

color varchar(65533) NULL COMMENT “”,

sku_no varchar(65533) NULL COMMENT “”,

size varchar(65533) NULL COMMENT “”,

size_name varchar(65533) NULL COMMENT “”,

size_nickname varchar(65533) NULL COMMENT “”,

size_mapping varchar(65533) NULL COMMENT “”,

is_key_size varchar(65533) NULL COMMENT “”,

barcode varchar(65533) NULL COMMENT “”,

class_key varchar(65533) NULL COMMENT “”,

class_id varchar(65533) NULL COMMENT “”,

serial varchar(65533) NULL COMMENT “”,

serial_name varchar(65533) NULL COMMENT “”,

original_serial varchar(65533) NULL COMMENT “”,

original_serial_name varchar(65533) NULL COMMENT “”,

business_series varchar(65533) NULL COMMENT “”,

style varchar(65533) NULL COMMENT “”,

style_name varchar(65533) NULL COMMENT “”,

goods_level varchar(65533) NULL COMMENT “”,

goods_level_name varchar(65533) NULL COMMENT “”,

class varchar(65533) NULL COMMENT “”,

class_name varchar(65533) NULL COMMENT “”,

subclass varchar(65533) NULL COMMENT “”,

subclass_name varchar(65533) NULL COMMENT “”,

is_basic varchar(65533) NULL COMMENT “”,

shop_market_date varchar(65533) NULL COMMENT “”,

shop_lifecycle_end_date varchar(65533) NULL COMMENT “”,

is_in_lifecycle_shop varchar(65533) NULL COMMENT “”,

is_break_size varchar(65533) NULL COMMENT “”,

is_bargain varchar(65533) NULL COMMENT “”,

sales_status varchar(65533) NULL COMMENT “”,

sale_qty varchar(65533) NULL COMMENT “”,

sale_tag_amt varchar(65533) NULL COMMENT “”,

sale_amt varchar(65533) NULL COMMENT “”,

end_stock_tag_amt varchar(65533) NULL COMMENT “”,

end_stock_qty varchar(65533) NULL COMMENT “”,

e_onway_stock_qty varchar(65533) NULL COMMENT “”,

e_onway_stock_amt varchar(65533) NULL COMMENT “”,

latest7_sale_qty varchar(65533) NULL COMMENT “”,

latest14_sale_qty varchar(65533) NULL COMMENT “”,

latest21_sale_qty varchar(65533) NULL COMMENT “”,

latest7_sale_tag_amt varchar(65533) NULL COMMENT “”,

latest7_sale_amt varchar(65533) NULL COMMENT “”,

nation_latest7_sale_qty varchar(65533) NULL COMMENT “”,

sales_ratio varchar(65533) NULL COMMENT “”,

avg_sale_qty varchar(65533) NULL COMMENT “”,

sale_days varchar(65533) NULL COMMENT “”,

effective_stock_qty varchar(65533) NULL COMMENT “”,

effective_stock_amt varchar(65533) NULL COMMENT “”,

total_sale_qty varchar(65533) NULL COMMENT “”,

total_sale_amt varchar(65533) NULL COMMENT “”,

max_received_date varchar(65533) NULL COMMENT “”,

max_received_days varchar(65533) NULL COMMENT “”,

latest30_sale_qty varchar(65533) NULL COMMENT “”,

latest30_sale_amt varchar(65533) NULL COMMENT “”,

shop_o2o_stock_qty varchar(65533) NULL COMMENT “”,

available_stock_qty varchar(65533) NULL COMMENT “”,

is_onway_break_size varchar(65533) NULL COMMENT “”,

shop_no varchar(65533) NULL COMMENT “”,

avg_latest14_sale_qty varchar(65533) NULL COMMENT “”,

temperature_zone varchar(65533) NULL COMMENT “”,

is_trans_seasonal_id varchar(65533) NULL COMMENT “”,

create_time varchar(65533) NULL COMMENT “”,

order_type varchar(65533) NULL COMMENT “”,

sale_level varchar(65533) NULL COMMENT “”,

is_scan_shoping_id varchar(65533) NULL COMMENT “”,

is_price_adjustment_now_day_id varchar(65533) NULL COMMENT “”,

is_price_adjustment_30_day_id varchar(65533) NULL COMMENT “”,

is_transfer_bills_tc_30_day_id varchar(65533) NULL COMMENT “”,

is_shop_standard_depth_id varchar(65533) NULL COMMENT “”,

shop_standard_depth_standard_value varchar(65533) NULL COMMENT “”,

shop_standard_depth_standard_max_value varchar(65533) NULL COMMENT “”,

shop_standard_depth_standard_min_value varchar(65533) NULL COMMENT “”,

is_deleted_type varchar(65533) NULL COMMENT “”,

original_design_no varchar(65533) NULL COMMENT “”,

original_design_no_and_color varchar(65533) NULL COMMENT “”,

shop_received_size_num varchar(65533) NULL COMMENT “”,

sales_season varchar(65533) NULL COMMENT “”,

unique_code varchar(65533) NULL COMMENT “”,

shop_stock_id varchar(65533) NULL COMMENT “”,

goods_year varchar(65533) NULL COMMENT “”,

goods_season varchar(65533) NULL COMMENT “”,

allocation_goods_attributes varchar(65533) NULL COMMENT “”,

is_config_shops_sku_standard_id varchar(65533) NULL COMMENT “”,

date_key varchar(65533) NULL COMMENT “”

) ENGINE=OLAP

PRIMARY KEY(id)

DISTRIBUTED BY HASH(id)

ORDER BY(shop_no, sku_no, size)

PROPERTIES (

“replication_num” = “3”,

“in_memory” = “false”,

“enable_persistent_index” = “true”,

“replicated_storage” = “true”,

“compression” = “LZ4”

);

【背景】flink写入starrocks数据

【业务影响】测试

【是否存算分离】否

【StarRocks版本】StarRocks-3.2.0-rc01

【集群规模】3fe(1 follower+2observer)+3be(fe与be混部)

【机器信息】8C/128G

【表模型】主键模型

【导入或者导出方式】Flink导入

【联系方式】无

【附件】

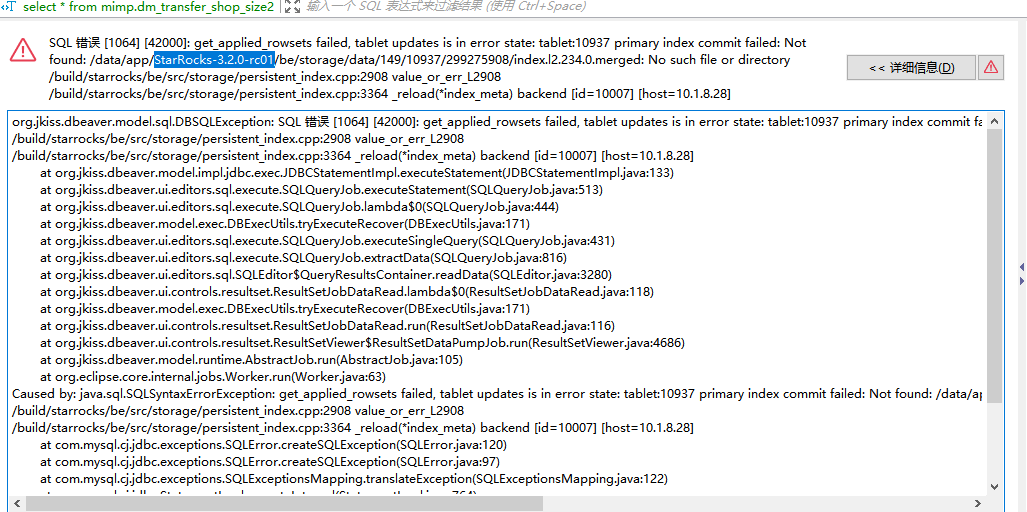

异常:

org.jkiss.dbeaver.model.sql.DBSQLException: SQL 错误 [1064] [42000]: get_applied_rowsets failed, tablet updates is in error state: tablet:10937 primary index commit failed: Not found: /data/app/StarRocks-3.2.0-rc01/be/storage/data/149/10937/299275908/index.l2.234.0.merged: No such file or directory

/build/starrocks/be/src/storage/persistent_index.cpp:2908 value_or_err_L2908

/build/starrocks/be/src/storage/persistent_index.cpp:3364 _reload(*index_meta) backend [id=10007] [host=10.1.8.28]

at org.jkiss.dbeaver.model.impl.jdbc.exec.JDBCStatementImpl.executeStatement(JDBCStatementImpl.java:133)

at org.jkiss.dbeaver.ui.editors.sql.execute.SQLQueryJob.executeStatement(SQLQueryJob.java:513)

at org.jkiss.dbeaver.ui.editors.sql.execute.SQLQueryJob.lambda$0(SQLQueryJob.java:444)

at org.jkiss.dbeaver.model.exec.DBExecUtils.tryExecuteRecover(DBExecUtils.java:171)

at org.jkiss.dbeaver.ui.editors.sql.execute.SQLQueryJob.executeSingleQuery(SQLQueryJob.java:431)

at org.jkiss.dbeaver.ui.editors.sql.execute.SQLQueryJob.extractData(SQLQueryJob.java:816)

at org.jkiss.dbeaver.ui.editors.sql.SQLEditor$QueryResultsContainer.readData(SQLEditor.java:3280)

at org.jkiss.dbeaver.ui.controls.resultset.ResultSetJobDataRead.lambda$0(ResultSetJobDataRead.java:118)

at org.jkiss.dbeaver.model.exec.DBExecUtils.tryExecuteRecover(DBExecUtils.java:171)

at org.jkiss.dbeaver.ui.controls.resultset.ResultSetJobDataRead.run(ResultSetJobDataRead.java:116)

at org.jkiss.dbeaver.ui.controls.resultset.ResultSetViewer$ResultSetDataPumpJob.run(ResultSetViewer.java:4686)

at org.jkiss.dbeaver.model.runtime.AbstractJob.run(AbstractJob.java:105)

at org.eclipse.core.internal.jobs.Worker.run(Worker.java:63)

Caused by: java.sql.SQLSyntaxErrorException: get_applied_rowsets failed, tablet updates is in error state: tablet:10937 primary index commit failed: Not found: /data/app/StarRocks-3.2.0-rc01/be/storage/data/149/10937/299275908/index.l2.234.0.merged: No such file or directory

/build/starrocks/be/src/storage/persistent_index.cpp:2908 value_or_err_L2908

/build/starrocks/be/src/storage/persistent_index.cpp:3364 _reload(*index_meta) backend [id=10007] [host=10.1.8.28]

at com.mysql.cj.jdbc.exceptions.SQLError.createSQLException(SQLError.java:120)

at com.mysql.cj.jdbc.exceptions.SQLError.createSQLException(SQLError.java:97)

at com.mysql.cj.jdbc.exceptions.SQLExceptionsMapping.translateException(SQLExceptionsMapping.java:122)

at com.mysql.cj.jdbc.StatementImpl.executeInternal(StatementImpl.java:764)

at com.mysql.cj.jdbc.StatementImpl.execute(StatementImpl.java:648)

at org.jkiss.dbeaver.model.impl.jdbc.exec.JDBCStatementImpl.execute(JDBCStatementImpl.java:330)

at org.jkiss.dbeaver.model.impl.jdbc.exec.JDBCStatementImpl.executeStatement(JDBCStatementImpl.java:130)

... 12 more