是加了-F执行命令后挂了。。。版本是2.5.17存算一体 3FE 5BE ,三个FE都不能执行手动GC那个命令

确认下jmap的路径和启动fe的jdk路径一致吗?一致的话可能是刚才fe进程无响应了,需要结合jstack分析

另外看下有多少个tablet,默认的jvm应该是8g,可能不够

我可以加您下微信么,详细请教下您

要么您加我下 微:17664126034

社区群里加我即可

FE master节点jmap -histo pid执行结果如下,看前面最大的也是300多M,看起来还好,目前内存是16G的,看不出啥问题,大佬指导一下

num #instances #bytes class name

1: 16280929 390742296 java.util.ArrayList

2: 11309865 361915680 java.util.HashMap$Node

3: 5683135 337636224 [Ljava.lang.Object;

4: 4054338 287550440 [C

5: 273356 281812368 [B

6: 1639307 236060208 com.starrocks.thrift.TExprNode

7: 7510894 180261456 java.lang.Long

8: 1133782 141058464 [Ljava.util.HashMap$Node;

9: 3125076 125003040 com.starrocks.transaction.TabletCommitInfo

10: 3940329 94567896 java.lang.String

11: 101742 85860200 [I

12: 2426667 77653344 com.starrocks.thrift.TScalarType

13: 1512270 72588960 java.util.HashMap

14: 2426829 58243896 com.starrocks.thrift.TTypeNode

15: 2426667 38826672 com.starrocks.thrift.TTypeDesc

16: 661747 31763856 java.nio.HeapByteBuffer

17: 300920 31295680 com.starrocks.analysis.SlotRef

18: 1224828 29395872 com.starrocks.thrift.TSlotRef

19: 126966 23361744 com.starrocks.thrift.TPlanNode

20: 115942 21333328 com.starrocks.transaction.TransactionState

21: 144594 20821536 com.starrocks.catalog.Replica

22: 1251543 20024688 com.starrocks.thrift.TExpr

23: 619355 19819360 org.antlr.v4.runtime.atn.ATNConfig

24: 221719 17737520 com.starrocks.thrift.TFunction

25: 670666 16095984 com.google.common.collect.ImmutableMapEntry

26: 37415 13469400 com.starrocks.thrift.TQueryOptions

27: 829231 13267696 java.lang.Integer

28: 180728 13012416 com.starrocks.analysis.SlotDescriptor

29: 315723 12628920 java.util.LinkedHashMap$Entry

30: 204231 11436936 com.starrocks.thrift.TAggregateFunction

31: 225805 10838640 com.sun.tools.javac.file.ZipFileIndex$Entry

32: 6307 9757560 [Lorg.antlr.v4.runtime.dfa.DFAState;

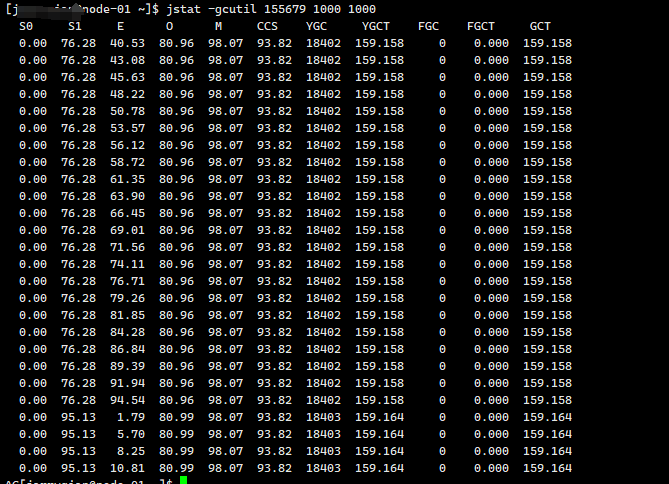

老师 我的fe follwer jvm飙升 这种怎么处理呢

老师 我的fe follwer jvm飙升 这种怎么处理呢

BE内存从mem tracker、监控和日志都分析不出来的,可以抓下heap profile,https://github.com/StarRocks/starrocks/pull/35322

FE内存分析

cd fe/bin;

./profiler.sh -e alloc -d 300 -f alloc-profile.html ${fe-pid}

会采集300s的数据,通过 alloc-profile.html可以查看火焰图,分析是哪部分内存占用

3.3.6版本执行这个命令会报"[ERROR] Profiler already started"是咋回事呢

3.3默认开了自动采集

好的,采集后的数据怎么看呢,通过fe的web吗

这个网页打开即可

feip:8030/alloc-profile.html,我这用访问会404

默认采集的在日志目录下,拿出来用网页打开

可以了,谢谢

大佬,我这把两天的profile打出来了,没看出来有什么问题,最近fe内存还是一直在上涨,加你微信帮忙分析下吧

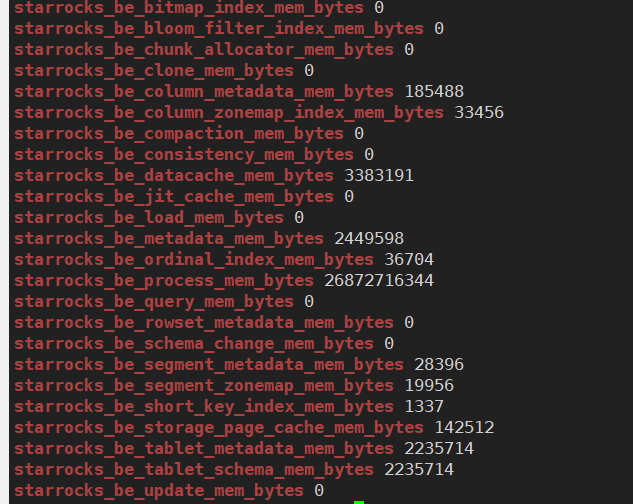

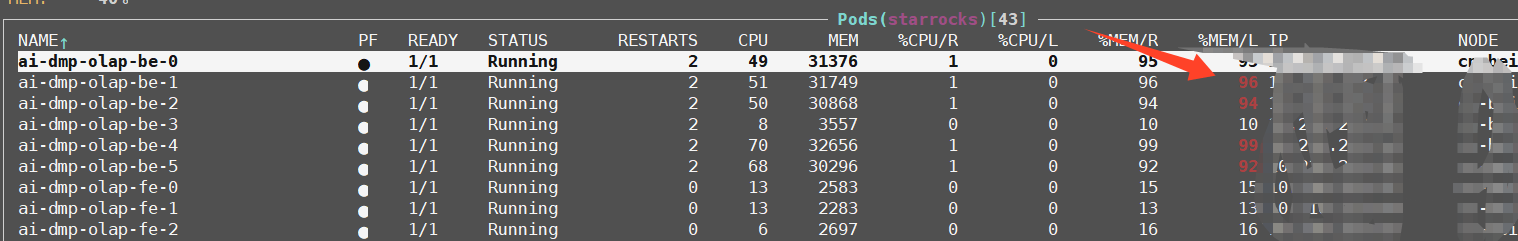

我部署的是存算分离3.5.4版本 cn节点的内存通过datax导入数据后 一直居高不下,过几个小时 三个节点会相继重启 重建pod

curl -XGET -s http://127.0.0.1:8040/metrics | grep “^starrocks_be_.*_mem_bytes|^starrocks_be_tcmalloc_bytes_in_use”

starrocks_be_bitmap_index_mem_bytes 0

starrocks_be_bloom_filter_index_mem_bytes 0

starrocks_be_chunk_allocator_mem_bytes 0

starrocks_be_clone_mem_bytes 0

starrocks_be_column_metadata_mem_bytes 1333471460

starrocks_be_column_zonemap_index_mem_bytes 516353652

starrocks_be_compaction_mem_bytes 0

starrocks_be_consistency_mem_bytes 0

starrocks_be_datacache_mem_bytes 28585937

starrocks_be_jit_cache_mem_bytes 0

starrocks_be_load_mem_bytes 0

starrocks_be_metadata_mem_bytes 1694568600

starrocks_be_ordinal_index_mem_bytes 158214128

starrocks_be_process_mem_bytes 4651971096

starrocks_be_query_mem_bytes 0

starrocks_be_rowset_metadata_mem_bytes 0

starrocks_be_schema_change_mem_bytes 0

starrocks_be_segment_metadata_mem_bytes 361076629

starrocks_be_segment_zonemap_mem_bytes 274554624

starrocks_be_short_key_index_mem_bytes 1150

starrocks_be_storage_page_cache_mem_bytes 1602232722

starrocks_be_tablet_metadata_mem_bytes 20511

starrocks_be_tablet_schema_mem_bytes 20511

starrocks_be_update_mem_bytes 0