Flink-connector-starrocks,starrocks数据库字段类型为varchar,长度为64,不能为空;一批数据中,存在一条数据该字段大于64字符,那么会导致整批数据入库失败,是否有跳过该条数据而让其他数据正常入库的功能?

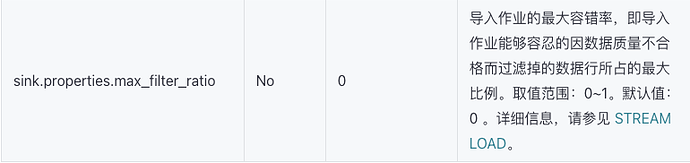

flink-connector-starrocks版本:1.2.3_flink-1.15,starrocks:2.3.0,把max_filter_ratio设置为1,也不起效果

发一下现在的 flink sink 的配置

StarRocksSinkOptions.builder()

.withProperty(“jdbc-url”, parameterTool.get(“StarRocks.dbJdbcUrl”))

.withProperty(“load-url”, parameterTool.get(“StarRocks.dbLoadUrl”))

.withProperty(“username”, parameterTool.get(“StarRocks.dbUserName”))

.withProperty(“password”, parameterTool.get(“StarRocks.dbPassWord”))

.withProperty(“table-name”, parameterTool.get(“StarRocks.callDetail.tbName”))

.withProperty(“database-name”, parameterTool.get(“StarRocks.dbName”))

.withProperty(“sink.properties.format”, “json”)

.withProperty(“sink.properties.strip_outer_array”, “true”)

.withProperty(“sink.semantic”, “exactly-once”)

.withProperty(“sink.properties.ignore_json_size”, “true”)

.withProperty(“sink.properties.max_filter_ratio”, “1”)

.build()