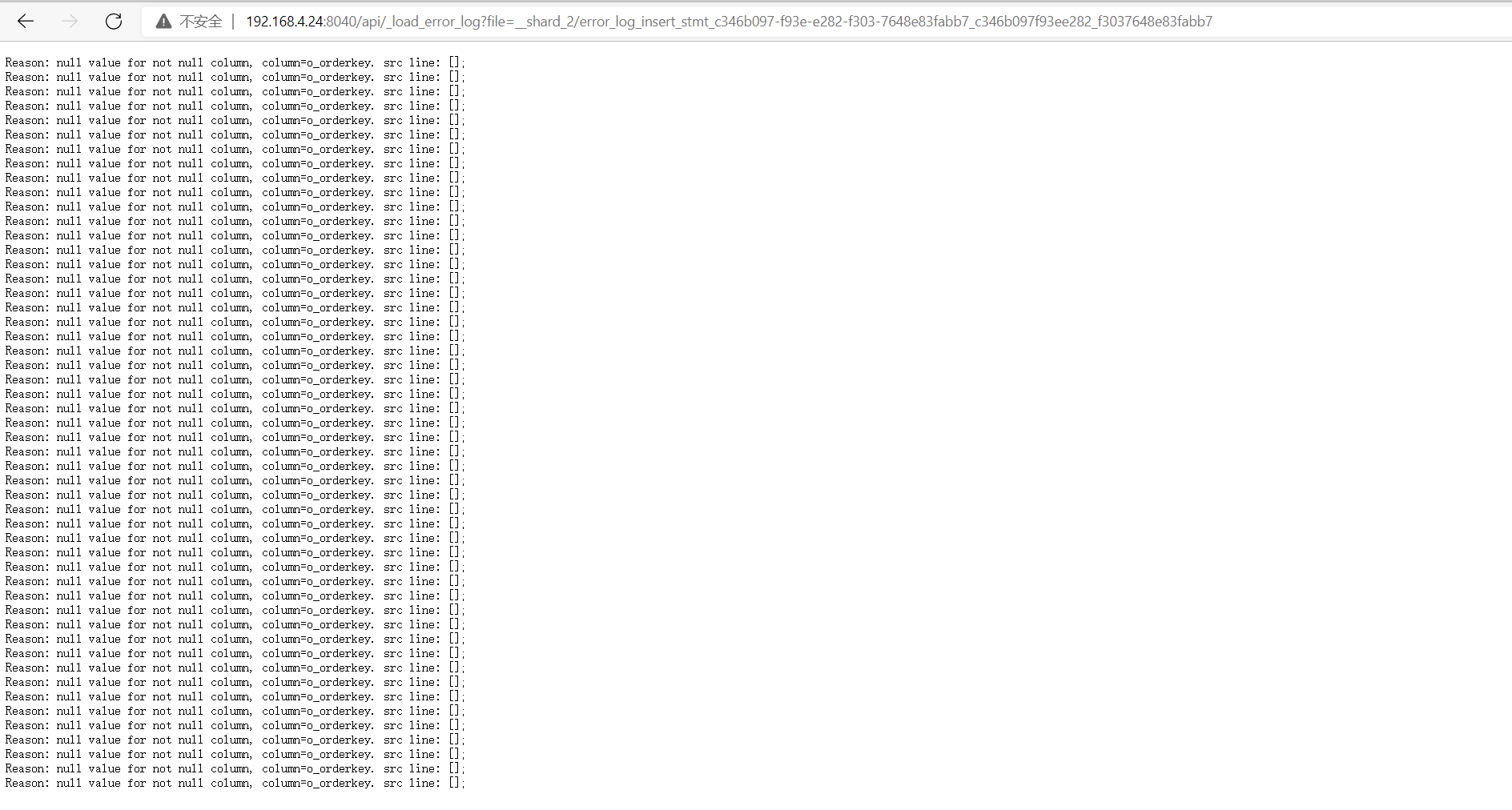

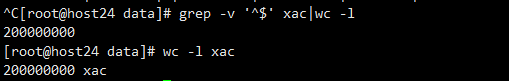

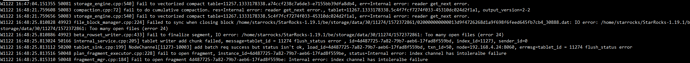

【详述】通过Stream Load的方式导数,数据量168G,本地csv文件,多次观察"WriteDataTimeMs"在600s的时候就终止了,状态“Fail”,原因是“Cancelled”。

具体步骤:执行

nohup curl --location-trusted -u root: -H “label:orders1t” -H “column_separator:|” -T /home/starrocks/2.18.0_rc2/dbgen/1tdata/data/orders.tbl http://192.168.4.24:8040/api/tpch/orders/_stream_load &

返回结果:

{

“TxnId”: 26,

“Label”: “orders1t”,

“Status”: “Fail”,

“Message”: “cancelled”,

“NumberTotalRows”: 0,

“NumberLoadedRows”: 0,

“NumberFilteredRows”: 0,

“NumberUnselectedRows”: 0,

“LoadBytes”: 62076297216,

“LoadTimeMs”: 704684,

“BeginTxnTimeMs”: 0,

“StreamLoadPutTimeMs”: 2,

“ReadDataTimeMs”: 528790,

“WriteDataTimeMs”: 601733,

“CommitAndPublishTimeMs”: 0

}

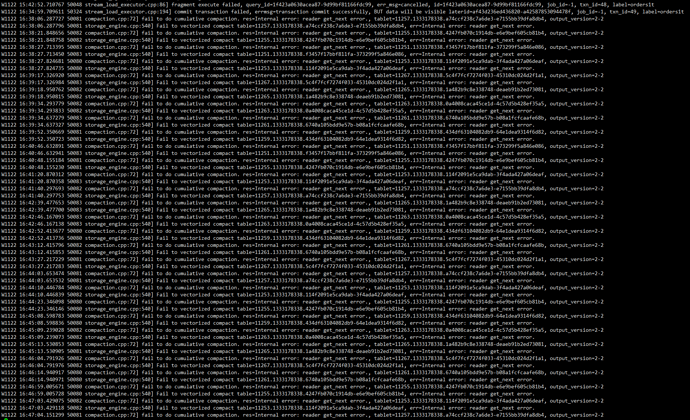

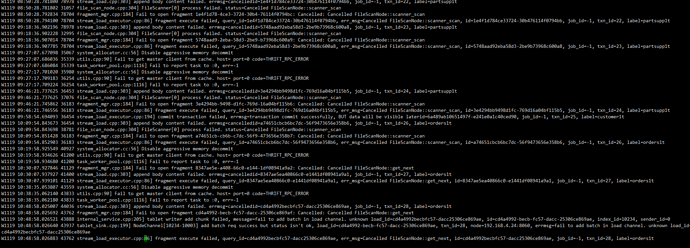

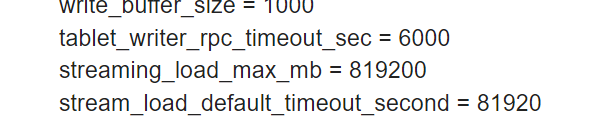

be.conf追加参数:

push_write_mbytes_per_sec = 30

write_buffer_size = 1000

tablet_writer_rpc_timeout_sec = 6000

streaming_load_max_mb = 819200

stream_load_default_timeout_second = 81920

cumulative_compaction_num_threads_per_disk = 4

base_compaction_num_threads_per_disk = 2

cumulative_compaction_check_interval_seconds = 2

【导入/导出方式】Stream Load

【StarRocks版本】StarRocks-1.19.1

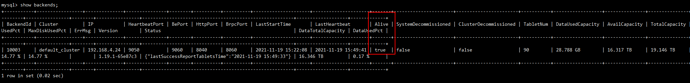

【集群规模】单机上面搭建的1FE+1BE+1Broker

【机器信息】104C/376G/万兆

【附件】

,是否还有其他参数需要设置?

,是否还有其他参数需要设置?