在一个hdfs集群的 都是同一个hdfs集群 同一个kerberos集群

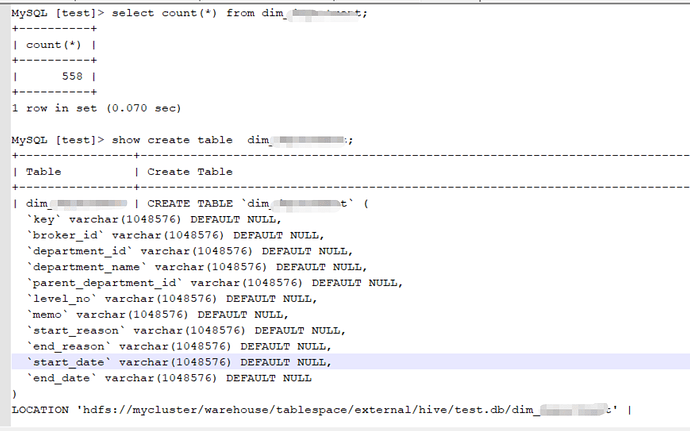

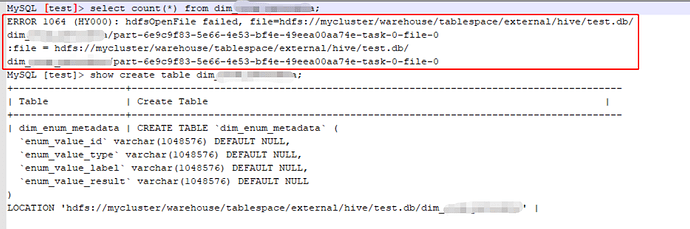

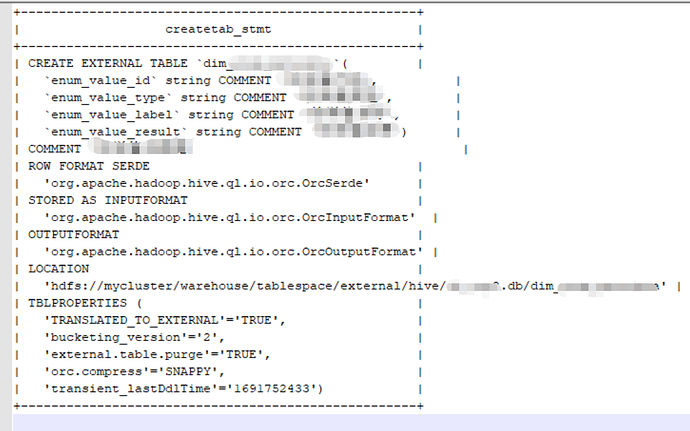

分别选择同一个 catalog 下面的一个能查的 table,一个不能查的 table,show create table 看一眼吧。

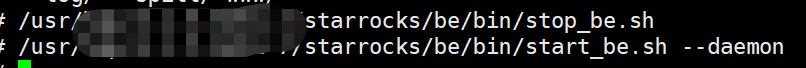

尝试重启下be,然后第一次直接访问那个现在不能访问的表看看?

重启命令

查询报错 zh这是当时be的日志I0830 16:37:10.384459 90235 tablet_meta.h:220] Set binlog config of tablet=24033 to BinlogConfig={version=-1, binlog_enable=false, binlog_ttl_second=1800, binlog_max_size=9223372036854775807}

I0830 16:37:10.384527 90235 tablet_meta.h:220] Set binlog config of tablet=24037 to BinlogConfig={version=-1, binlog_enable=false, binlog_ttl_second=1800, binlog_max_size=9223372036854775807}

I0830 16:37:10.384640 90235 data_dir.cpp:330] load tablet from meta finished, loaded tablet: 140, error tablet: 0, path: /hadoop/starrocks-data

I0830 16:37:10.396725 90302 fragment_mgr.cpp:529] FragmentMgr cancel worker start working.

I0830 16:37:10.408212 90139 exec_env.cpp:222] [PIPELINE] Exec thread pool: thread_num=32

I0830 16:37:10.567082 91060 runtime_filter_worker.cpp:776] RuntimeFilterWorker start working.

I0830 16:37:10.567353 91062 profile_report_worker.cpp:111] ProfileReportWorker start working.

I0830 16:37:10.567427 91063 result_buffer_mgr.cpp:145] result buffer manager cancel thread begin.

I0830 16:37:10.572373 90139 load_path_mgr.cpp:68] Load path configured to [/hadoop/starrocks-data/mini_download]

I0830 16:37:10.585605 91236 compaction_manager.cpp:69] start compaction scheduler

I0830 16:37:10.585848 91238 storage_engine.cpp:663] start to check compaction

I0830 16:37:10.586555 91248 olap_server.cpp:753] begin to do tablet meta checkpoint:/hadoop/starrocks-data

I0830 16:37:10.586872 91251 olap_server.cpp:703] try to perform path gc by tablet!

I0830 16:37:10.586899 90139 olap_server.cpp:230] All backgroud threads of storage engine have started.

I0830 16:37:10.587507 90139 thrift_server.cpp:388] heartbeat has started listening port on 9050

I0830 16:37:10.589571 90139 starlet_server.cc:58] Starlet grpc server started on 0.0.0.0:9070

I0830 16:37:10.589676 90139 backend_base.cpp:79] StarRocksInternalService has started listening port on 9060

I0830 16:37:10.589767 91262 starlet.cc:83] Empty starmanager address, skip reporting!

I0830 16:37:10.590035 90139 thrift_server.cpp:388] BackendService has started listening port on 9060

I0830 16:37:10.598877 90139 server.cpp:1069] Server[starrocks::BackendInternalServiceImplstarrocks::PInternalService+starrocks::LakeServiceImpl+starrocks::BackendInternalServiceImpldoris::PBackendService] is serving on port=8060.

I0830 16:37:10.598897 90139 server.cpp:1072] Check out http://ambaric1:8060 in web browser.

I0830 16:37:15.335920 91868 heartbeat_server.cpp:76] get heartbeat from FE.host:172.28.88.87, port:9020, cluster id:1279666403, counter:1

I0830 16:37:15.335964 91868 heartbeat_server.cpp:98] Updating master info: TMasterInfo(network_address=TNetworkAddress(hostname=172.28.88.87, port=9020), cluster_id=1279666403, epoch=14, token=8c7b2e79-a40c-419c-8885-8d9a90c2f175, backend_ip=172.28.88.91, http_port=8040, heartbeat_flags=0, backend_id=10006, min_active_txn_id=77160)

I0830 16:37:15.335994 91868 heartbeat_server.cpp:103] Master FE is changed or restarted. report tablet and disk info immediately

I0830 16:37:15.582964 91215 tablet_manager.cpp:834] Report all 140 tablets info

I0830 16:37:15.585249 91215 tablet_manager.cpp:834] Report all 140 tablets info

I0830 16:37:20.596423 91262 starlet.cc:83] Empty starmanager address, skip reporting!

I0830 16:37:24.345463 90367 fragment_executor.cpp:165] Prepare(): query_id=71707c4e-4710-11ee-bdb5-525400da7882 fragment_instance_id=71707c4e-4710-11ee-bdb5-525400da7885 is_stream_pipeline=0 backend_num=0

I0830 16:37:25.181432 90166 daemon.cpp:202] Current memory statistics: process(6668256), query_pool(6320), load(0), metadata(307096), compaction(0), schema_change(0), column_pool(0), page_cache(0), update(0), chunk_allocator(0), clone(0), consistency(0)

I0830 16:37:27.078356 90431 internal_service.cpp:486] cancel fragment, fragment_instance_id=71707c4e-4710-11ee-bdb5-525400da7885, reason: InternalError

W0830 16:37:27.078420 90431 fragment_context.cpp:123] [Driver] Canceled, query_id=71707c4e-4710-11ee-bdb5-525400da7882, instance_id=71707c4e-4710-11ee-bdb5-525400da7885, reason=InternalError

得看 be.out 日志,这个 INFO 日志参考意义不大,还是一样的错误吗

报错还是一样的 be.out报错还是和kerberos有关系 这是日志SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/usr/hdp/3.1.5.6091-7/starrocks/be/lib/jni-packages/starrocks-jdbc-bridge-jar-with-dependencies.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/usr/hdp/3.1.5.6091-7/starrocks/be/lib/hadoop/common/lib/slf4j-reload4j-1.7.36.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory]

hdfsOpenFile(hdfs://mycluster/warehouse/tablespace/external/hive/test.db/tablename/part-6e9c9f83-5e66-4e53-bf4e-49eea00aa74e-task-0-file-0): FileSystem#open((Lorg/apache/hadoop/fs/Path;I)Lorg/apache/hadoop/fs/FSDataInputStream;) error:

AccessControlException: Client cannot authenticate via:[TOKEN, KERBEROS]java.io.IOException: DestHost:destPort ambarim1:8020 , LocalHost:localPort ambaric3/172.28.88.93:0. Failed on local exception: java.io.IOException: org.apache.hadoop.security.AccessControlException: Client cannot authenticate via:[TOKEN, KERBEROS]

at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method)

at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62)

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45)

at java.lang.reflect.Constructor.newInstance(Constructor.java:423)

at org.apache.hadoop.net.NetUtils.wrapWithMessage(NetUtils.java:930)

at org.apache.hadoop.net.NetUtils.wrapException(NetUtils.java:905)

at org.apache.hadoop.ipc.Client.getRpcResponse(Client.java:1571)

at org.apache.hadoop.ipc.Client.call(Client.java:1513)

at org.apache.hadoop.ipc.Client.call(Client.java:1410)

at org.apache.hadoop.ipc.ProtobufRpcEngine2$Invoker.invoke(ProtobufRpcEngine2.java:258)

at org.apache.hadoop.ipc.ProtobufRpcEngine2$Invoker.invoke(ProtobufRpcEngine2.java:139)

at com.sun.proxy.$Proxy13.getBlockLocations(Unknown Source)

at org.apache.hadoop.hdfs.protocolPB.ClientNamenodeProtocolTranslatorPB.getBlockLocations(ClientNamenodeProtocolTranslatorPB.java:334)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.hadoop.io.retry.RetryInvocationHandler.invokeMethod(RetryInvocationHandler.java:433)

at org.apache.hadoop.io.retry.RetryInvocationHandler$Call.invokeMethod(RetryInvocationHandler.java:166)

at org.apache.hadoop.io.retry.RetryInvocationHandler$Call.invoke(RetryInvocationHandler.java:158)

at org.apache.hadoop.io.retry.RetryInvocationHandler$Call.invokeOnce(RetryInvocationHandler.java:96)

at org.apache.hadoop.io.retry.RetryInvocationHandler.invoke(RetryInvocationHandler.java:362)

at com.sun.proxy.$Proxy14.getBlockLocations(Unknown Source)

at org.apache.hadoop.hdfs.DFSClient.callGetBlockLocations(DFSClient.java:900)

at org.apache.hadoop.hdfs.DFSClient.getLocatedBlocks(DFSClient.java:889)

at org.apache.hadoop.hdfs.DFSClient.getLocatedBlocks(DFSClient.java:878)

at org.apache.hadoop.hdfs.DFSClient.open(DFSClient.java:1046)

at org.apache.hadoop.hdfs.DistributedFileSystem$4.doCall(DistributedFileSystem.java:343)

at org.apache.hadoop.hdfs.DistributedFileSystem$4.doCall(DistributedFileSystem.java:339)

at org.apache.hadoop.fs.FileSystemLinkResolver.resolve(FileSystemLinkResolver.java:81)

at org.apache.hadoop.hdfs.DistributedFileSystem.open(DistributedFileSystem.java:356)

Caused by: java.io.IOException: org.apache.hadoop.security.AccessControlException: Client cannot authenticate via:[TOKEN, KERBEROS]

at org.apache.hadoop.ipc.Client$Connection$1.run(Client.java:738)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:422)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1899)

at org.apache.hadoop.ipc.Client$Connection.handleSaslConnectionFailure(Client.java:693)

at org.apache.hadoop.ipc.Client$Connection.setupIOstreams(Client.java:796)

at org.apache.hadoop.ipc.Client$Connection.access$3800(Client.java:347)

at org.apache.hadoop.ipc.Client.getConnection(Client.java:1632)

at org.apache.hadoop.ipc.Client.call(Client.java:1457)

… 23 more

Caused by: org.apache.hadoop.security.AccessControlException: Client cannot authenticate via:[TOKEN, KERBEROS]

at org.apache.hadoop.security.SaslRpcClient.selectSaslClient(SaslRpcClient.java:179)

at org.apache.hadoop.security.SaslRpcClient.saslConnect(SaslRpcClient.java:392)

at org.apache.hadoop.ipc.Client$Connection.setupSaslConnection(Client.java:561)

at org.apache.hadoop.ipc.Client$Connection.access$2100(Client.java:347)

at org.apache.hadoop.ipc.Client$Connection$2.run(Client.java:783)

at org.apache.hadoop.ipc.Client$Connection$2.run(Client.java:779)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:422)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1899)

at org.apache.hadoop.ipc.Client$Connection.setupIOstreams(Client.java:779)

… 26 more

很奇怪 三台be的 be.conf hive-core.xml hdfs-core.xml core-site.xml hadoop_env.sh krb5.conf corntab定时任务 这些配置都一样 手动kinit刷keytab也都可以过去 也可以查hdfs 和hive 不知道为什么第三台be查询的时候就报这样的错

这个报错就是 kerberos 问题,要么 kinit 过期了,要么哪里配置的不对,只能说 kerberos 就是比较玄学。可以开启 kerberos 的 debug 模式,看看具体问题的原因。

在sr启动脚本配置-Dsun.security.krb5.debug=true可以吗 这个算开启kerberos的debug了吧

嗯,然后你看 be.out 有没有相关信息输出

很奇怪 感觉没问题 手动认证是没有问题的

这台机器上面的jdk和其他节点一样吗?

有问题节点的klist结果和/etc/krb5.conf发下

一样的 我手动创建了同意的sr princpal和keytab 已经解决这个问题了 不使用hive的keytab了 多谢大佬们的支持

看起来问题是出在keytab上面了,有对比过keytab的md5吗

可能跟keytab和用户都有关系 以前用的hive的keytab和hive用户 现在全改成starrocks的了 就可以了

现在be1,2是OK的?be3 有问题还是3台都有问题?

如果是访问DN有问题的话,看看core-site.xml dn kerberos选项是不是配置好了。

看上去FE没有问题,FE只和NN交互。

检查下面几个选项 core-site.xml 和 hdfs-site.xml 都看看

<property>

<name>hadoop.security.authorization</name>

<value>true</value>

</property>

<property>

<name>dfs.namenode.kerberos.principal</name>

<value>hdfs/_HOST@EMR.C-8120A41F6B0C443D.COM</value>

</property>

<property>

<name>dfs.datanode.kerberos.principal</name>

<value>hdfs/_HOST@EMR.C-8120A41F6B0C443D.COM</value>

</property>