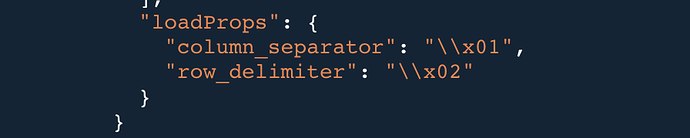

【问题详述】利用 DataX-Web 基于 StarRocks 开发的 StarRocks Writer 插件将 MySQL数据库中的数据导入至 StarRocks,脚本中参数loadProps设置的导入方式是CSV,当mysql数据表中存在字段值‘ \N ’,脚本就会报错。

【报错信息】2023-07-21 04:00:11.786 [Thread-1] WARN StarRocksWriterManager - Failed to flush batch data to StarRocks, retry times = 0

2023-07-21 04:00:11 [AnalysisStatistics.analysisStatisticsLog-53] java.io.IOException: Failed to flush data to StarRocks.

2023-07-21 04:00:11 [AnalysisStatistics.analysisStatisticsLog-53] {“Status”:“Fail”,“BeginTxnTimeMs”:1,“Message”:“too many filtered rows”,“NumberUnselectedRows”:0,“CommitAndPublishTimeMs”:0,“Label”:“042a7138-43a4-46d6-9966-771b62ee49c3”,“LoadBytes”:6857914,“StreamLoadPlanTimeMs”:1,“NumberTotalRows”:35552,“WriteDataTimeMs”:329,“TxnId”:164100,“LoadTimeMs”:331,“ErrorURL”:“http://10.253.250.91:8040/api/_load_error_log?file=error_log_f64ed837b272e794_709e146e6c6eb0a3",“ReadDataTimeMs”:1,“NumberLoadedRows”:35551,"NumberFilteredRows”:1}

2023-07-21 04:00:11 [AnalysisStatistics.analysisStatisticsLog-53] at com.starrocks.connector.datax.plugin.writer.starrockswriter.manager.StarRocksStreamLoadVisitor.doStreamLoad(StarRocksStreamLoadVisitor.java:73) ~[starrockswriter-release.jar:na]

2023-07-21 04:00:11 [AnalysisStatistics.analysisStatisticsLog-53] at com.starrocks.connector.datax.plugin.writer.starrockswriter.manager.StarRocksWriterManager.asyncFlush(StarRocksWriterManager.java:161) [starrockswriter-release.jar:na]

2023-07-21 04:00:11 [AnalysisStatistics.analysisStatisticsLog-53] at com.starrocks.connector.datax.plugin.writer.starrockswriter.manager.StarRocksWriterManager.access$000(StarRocksWriterManager.java:21) [starrockswriter-release.jar:na]

2023-07-21 04:00:11 [AnalysisStatistics.analysisStatisticsLog-53] at com.starrocks.connector.datax.plugin.writer.starrockswriter.manager.StarRocksWriterManager$1.run(StarRocksWriterManager.java:132) [starrockswriter-release.jar:na]

2023-07-21 04:00:11 [AnalysisStatistics.analysisStatisticsLog-53] at java.lang.Thread.run(Thread.java:750) [na:1.8.0_333]

然后进报错链接里查看发现具体报错信息:

[root@iZuf6fq2vuvf3gs8pd9d6sZ ~]# curl http://10.253.250.92:8040/api/_load_error_log?file=error_log_f64a61dd1b712e8e_8e8a2cfc7d57e5b6

Error: NULL value in non-nullable column ‘real_name’. Row: [1682031049539674113, 310100100008, ‘’, NULL, ‘1’, NULL, 1, ‘http://wx.qlogo.cn/mmhead/vtnuMBibofcb7jV6R1VQj3c7OLIKuZPVQb7Iurv5ApfLT4zZ3oD38zg/0’, ‘’, NULL, NULL, 0, 0, 2023-07-20 22:13:47, 0, 2023-07-20 22:13:47, 0, 2023-07-21 11:24:06, 0]

Error: NULL value in non-nullable column ‘alias’. Row: [1682031049539674113, 310100100008, ‘’, ‘’, ‘1’, NULL, 1, ‘http://wx.qlogo.cn/mmhead/vtnuMBibofcb7jV6R1VQj3c7OLIKuZPVQb7Iurv5ApfLT4zZ3oD38zg/0’, ‘’, NULL, NULL, 0, 0, 2023-07-20 22:13:47, 0, 2023-07-20 22:13:47, 0, 2023-07-21 11:24:06, 0]

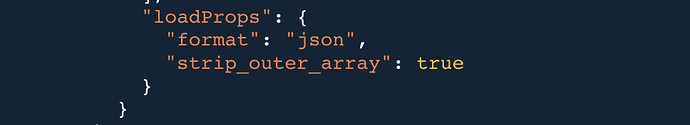

【解决方式】脚本中参数loadProps设置的导入方式由CSV换成JSON格式

【未知情况】也不知道这两种导入方式具体的数据差别在哪里,想问问有没有谁知道这两种方式的好坏区别呢?