-

3.0版本broker load导入2千万数据, 卡住半个小时无响应

-

语句

LOAD LABEL ods.aloha_ordercenter__order_detail_d_123_001 ( DATA INFILE("hdfs:xxxxxxxxxxxxxxxx") INTO TABLE aloha_ordercenter__order_detail_d format as "orc" ( order_detail_id,order_id,user_id,store_id,item_id,sku,product_name,upc,product_marque,department,category,unit_cost,price_with_tax,smart_price,price_without_tax,tax_rate,order_quantity,delivery_quantity,wm_discount,external_discount,discount_amount,receivable_unit_price,detail_line,item_type,report_code,pos_desc,weight,scalable,scale_package_code,product_total_amount,remark,item_extend,create_by,update_by,create_time,update_time,sda,vendor_nbr,file_line,exchange_item_id,exchange_upc,exchange_type,platform_service_charge,ts ) ) WITH BROKER hdfs_broker ( "hadoop.security.authentication" = "kerberos", "kerberos_principal" = "xxxx@sssssssss", "kerberos_keytab" = "/home/eeee/.xxxx.keytab", "dfs.nameservices" = "cnprod1ha", "dfs.ha.namenodes.cnprod1ha" = "nn1,nn2", "dfs.namenode.rpc-address.cnprod1ha.nn1" = "namenode:8020", "dfs.namenode.rpc-address.cnprod1ha.nn2" = "namenode:8020", "dfs.client.failover.proxy.provider.cnprod1ha" = "org.apache.hadoop.hdfs.server.namenode.ha.ConfiguredFailoverProxyProvider" ) PROPERTIES ( "timezone" = "UTC" );

- show load

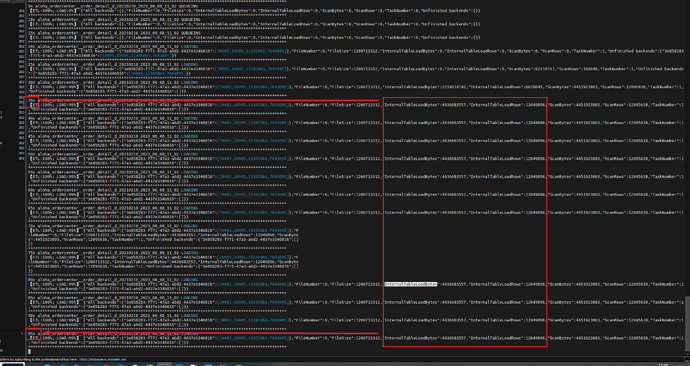

JobId: 51364474 Label: aloha_ordercenter__order_detail_d_20210211_2023_06_08_15_04 State: LOADING Progress: ETL:100%; LOAD:99% Type: BROKER Priority: NORMAL EtlInfo: NULL TaskInfo: resource:N/A; timeout(s):17200; max_filter_ratio:0.001 ErrorMsg: NULL CreateTime: 2023-06-08 15:04:11EtlStartTime: 2023-06-08 15:04:14

EtlFinishTime: 2023-06-08 15:04:14

LoadStartTime: 2023-06-08 15:04:14

LoadFinishTime: NULL

TrackingSQL:

JobDetails: {“All backends”:{“4b4a700f-c73b-4e0e-8946-35947c8bfe50”:[7643091,11395867,11395866,11395863,7643092,7643095]},“FileNumber”:8,“FileSize”:1187976319,“InternalTableLoadBytes”:5943581076,“InternalTableLoadRows”:15862174,“ScanBytes”:5941291284,“ScanRows”:15862174,“TaskNumber”:1,“Unfinished backends”:{“4b4a700f-c73b-4e0e-8946-35947c8bfe50”:[]}}

- 尝试关闭pipeline load恢复正常

enable_pipeline_load = false