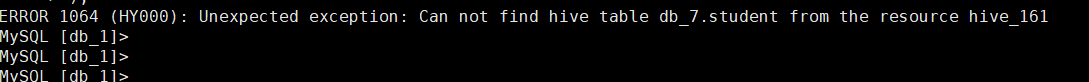

【详述】starrocks创建hive外部表报错

【背景】安装Kerberos、创建Hive 资源、创建外部表失败

【业务影响】

【StarRocks版本】例如:2.5.4

【集群规模】例如:3fe(1 follower+2observer)+3be(fe与be混部)

【机器信息】CPU虚拟核/内存/网卡,例如:80C/128G/万兆

【联系方式】为了在解决问题过程中能及时联系到您获取一些日志信息,请补充下您的联系方式,例如:社区群4-小李或者邮箱,谢谢

MySQL [db_1]> CREATE EXTERNAL RESOURCE “hive_161”

-> PROPERTIES (

-> “type” = “hive”,

-> “hive.metastore.uris” = “thrift://127.0.0.0:9083”,

-> ‘dfs.nameservices’=‘nameservice1’,

-> “dfs.data.transfer.protection”=“authentication”,

-> “hadoop.rpc.protection”=“authentication”,

-> ‘dfs.ha.namenodes.nameservice1’=‘hostname1,hostname2’,

-> ‘dfs.namenode.rpc-address.nameservice1.hostname1’=‘127.0.0.0:8020’,

-> ‘dfs.namenode.rpc-address.nameservice1.hostname2’=‘127.0.0.0:8020’,

-> ‘dfs.client.failover.proxy.provider.nameservice1’=‘org.apache.hadoop.hdfs.server.namenode.ha.ConfiguredFailoverProxyProvider’,

-> ‘hadoop.security.authentication’=‘kerberos’,

-> ‘hadoop.kerberos.principal’=‘hive@GENIUSAFC.COM’,

-> ‘hadoop.kerberos.keytab’=’/etc/security/keytab/hive.keytab’

-> );

create EXTERNAL table student(

id int, name string

)

ENGINE=HIVE

PROPERTIES (

“resource” = “hive_161”,

‘database’ = ‘db_7’,

‘table’ = ‘student’

);