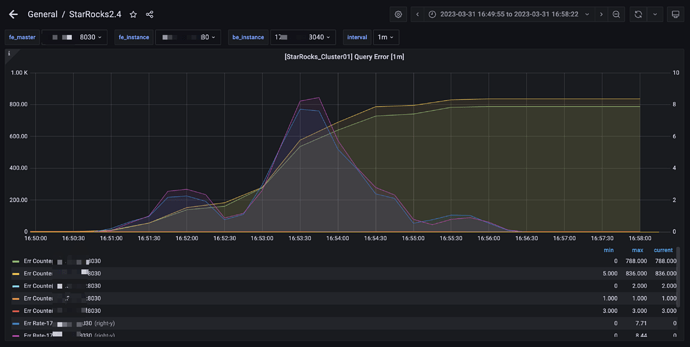

【详述】StarRocks 突发大面积查询时间,大概 10分钟左右恢复,

【背景】10分钟内有大量(4700+)查询连到 observer 节点(bi 页面查询失败,用户可能会反复刷新页面)

【业务影响】

【StarRocks版本】2.4.3

【集群规模】5fe(3 follower+2observer)+6be独立

【机器信息】fe: 16c 32 g, be 16c 64 g

【联系方式】社区6群-春江

【附件】

fe.warn 很多这样的日志

2023-03-31 16:50:52,276 WARN (starrocks-mysql-nio-pool-6205|11890) [ConnectContext.kill():525] kill timeout query, 172.xx.xx.xx:51606, kill connection: true

2023-03-31 16:50:52,276 WARN (starrocks-mysql-nio-pool-6205|11890) [Coordinator.cancel():1357] cancel execution of query, this is outside invoke

2023-03-31 16:50:52,279 WARN (thrift-server-pool-3|156) [Coordinator.updateFragmentExecStatus():2200] one instance report fail errorCode CANCELLED UserCancel, que

ry_id=184c2177-cfa1-11ed-83af-525400d6cfee instance_id=184c2177-cfa1-11ed-83af-525400d6d039

2023-03-31 16:50:52,279 WARN (thrift-server-pool-1|154) [Coordinator.updateFragmentExecStatus():2200] one instance report fail errorCode CANCELLED UserCancel, que

ry_id=184c2177-cfa1-11ed-83af-525400d6cfee instance_id=184c2177-cfa1-11ed-83af-525400d6d036

2023-03-31 16:50:52,301 WARN (thrift-server-pool-7|160) [Coordinator.updateFragmentExecStatus():2200] one instance report fail errorCode CANCELLED UserCancel, que

ry_id=184c2177-cfa1-11ed-83af-525400d6cfee instance_id=184c2177-cfa1-11ed-83af-525400d6d020

2023-03-31 16:50:52,310 WARN (thrift-server-pool-8|161) [Coordinator.updateFragmentExecStatus():2200] one instance report fail errorCode CANCELLED UserCancel, que

ry_id=184c2177-cfa1-11ed-83af-525400d6cfee instance_id=184c2177-cfa1-11ed-83af-525400d6d00e

2023-03-31 16:50:52,310 WARN (thrift-server-pool-9|162) [Coordinator.updateFragmentExecStatus():2200] one instance report fail errorCode CANCELLED UserCancel, que

ry_id=184c2177-cfa1-11ed-83af-525400d6cfee instance_id=184c2177-cfa1-11ed-83af-525400d6d023

2023-03-31 16:50:52,311 WARN (thrift-server-pool-6|159) [Coordinator.updateFragmentExecStatus():2200] one instance report fail errorCode CANCELLED UserCancel, que

ry_id=184c2177-cfa1-11ed-83af-525400d6cfee instance_id=184c2177-cfa1-11ed-83af-525400d6d034

2023-03-31 16:50:52,312 WARN (thrift-server-pool-7|160) [Coordinator.updateFragmentExecStatus():2200] one instance report fail errorCode CANCELLED UserCancel, que

ry_id=184c2177-cfa1-11ed-83af-525400d6cfee instance_id=184c2177-cfa1-11ed-83af-525400d6d012

2023-03-31 16:50:52,319 WARN (thrift-server-pool-8|161) [Coordinator.updateFragmentExecStatus():2200] one instance report fail errorCode CANCELLED UserCancel, que

ry_id=184c2177-cfa1-11ed-83af-525400d6cfee instance_id=184c2177-cfa1-11ed-83af-525400d6d031

2023-03-31 16:50:52,356 WARN (starrocks-mysql-nio-pool-6172|11857) [Coordinator.getNext():1295] get next fail, need cancel. status errorCode CANCELLED UserCancel,

query id: 184c2177-cfa1-11ed-83af-525400d6cfee

2023-03-31 16:50:52,356 WARN (starrocks-mysql-nio-pool-6172|11857) [Coordinator.getNext():1316] query failed: Cancelled

- 慢查询:

- Profile信息

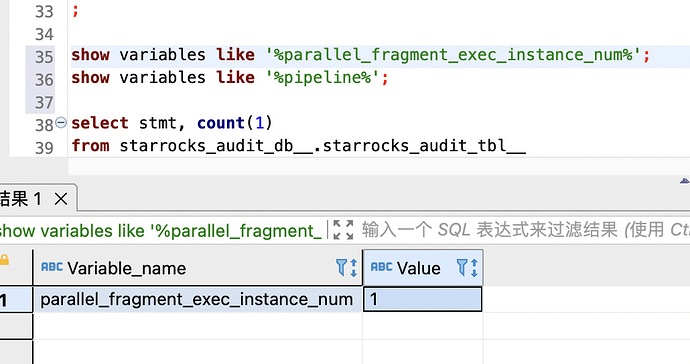

- 并行度:show variables like ‘%parallel_fragment_exec_instance_num%’;

- pipeline是否开启:show variables like ‘%pipeline%’;

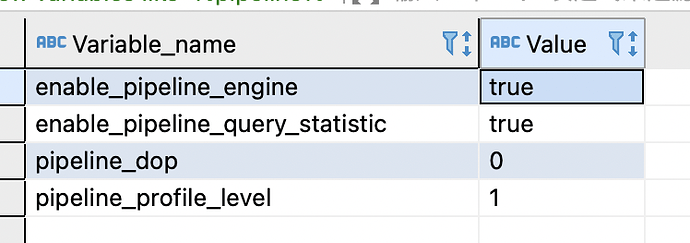

- be节点cpu和内存使用率截图

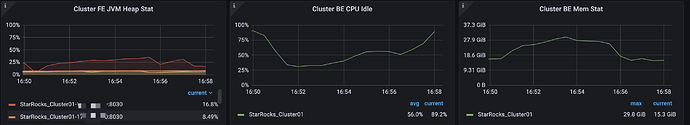

fe 审计日志发现大量(1600 多条),这样的sql

KILL CONNECTION 2385

KILL CONNECTION 1474

KILL CONNECTION 1480

KILL CONNECTION 2387

KILL CONNECTION 2479

KILL CONNECTION 1569

KILL CONNECTION 2481