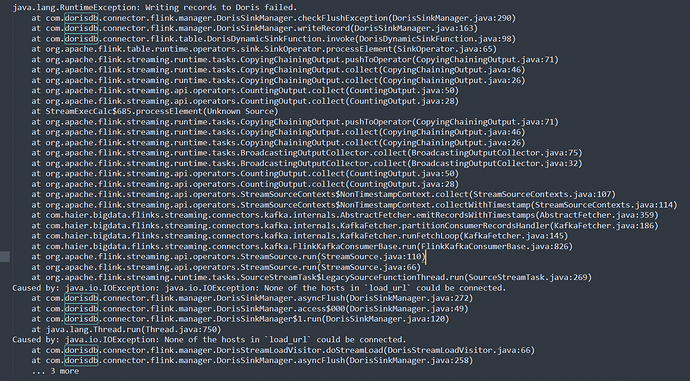

版本2.3.7,使用flink插件写数时,同一个作业不定时会报如下错误,插件用的是doris(敏感词)db当时的版本,当时报错信息还是doris

请问这个错是网络不通的意思吗,flink作业和SR都部署在阿里云

java.lang.RuntimeException: Writing records to Doris failed.

at com.doris(敏感词)db.connector.flink.manager.DorisSinkManager.checkFlushException(DorisSinkManager.java:290)

at com.doris(敏感词)db.connector.flink.manager.DorisSinkManager.writeRecord(DorisSinkManager.java:163)

at com.doris(敏感词)db.connector.flink.table.DorisDynamicSinkFunction.invoke(DorisDynamicSinkFunction.java:98)

at org.apache.flink.table.runtime.operators.sink.SinkOperator.processElement(SinkOperator.java:65)

at org.apache.flink.streaming.runtime.tasks.CopyingChainingOutput.pushToOperator(CopyingChainingOutput.java:71)

at org.apache.flink.streaming.runtime.tasks.CopyingChainingOutput.collect(CopyingChainingOutput.java:46)

at org.apache.flink.streaming.runtime.tasks.CopyingChainingOutput.collect(CopyingChainingOutput.java:26)

at org.apache.flink.streaming.api.operators.CountingOutput.collect(CountingOutput.java:50)

at org.apache.flink.streaming.api.operators.CountingOutput.collect(CountingOutput.java:28)

at StreamExecCalc$685.processElement(Unknown Source)

at org.apache.flink.streaming.runtime.tasks.CopyingChainingOutput.pushToOperator(CopyingChainingOutput.java:71)

at org.apache.flink.streaming.runtime.tasks.CopyingChainingOutput.collect(CopyingChainingOutput.java:46)

at org.apache.flink.streaming.runtime.tasks.CopyingChainingOutput.collect(CopyingChainingOutput.java:26)

at org.apache.flink.streaming.runtime.tasks.BroadcastingOutputCollector.collect(BroadcastingOutputCollector.java:75)

at org.apache.flink.streaming.runtime.tasks.BroadcastingOutputCollector.collect(BroadcastingOutputCollector.java:32)

at org.apache.flink.streaming.api.operators.CountingOutput.collect(CountingOutput.java:50)

at org.apache.flink.streaming.api.operators.CountingOutput.collect(CountingOutput.java:28)

at org.apache.flink.streaming.api.operators.StreamSourceContexts$NonTimestampContext.collect(StreamSourceContexts.java:107)

at org.apache.flink.streaming.api.operators.StreamSourceContexts$NonTimestampContext.collectWithTimestamp(StreamSourceContexts.java:114)

at com.haier.bigdata.flinks.streaming.connectors.kafka.internals.AbstractFetcher.emitRecordsWithTimestamps(AbstractFetcher.java:359)

at com.haier.bigdata.flinks.streaming.connectors.kafka.internals.KafkaFetcher.partitionConsumerRecordsHandler(KafkaFetcher.java:186)

at com.haier.bigdata.flinks.streaming.connectors.kafka.internals.KafkaFetcher.runFetchLoop(KafkaFetcher.java:145)

at com.haier.bigdata.flinks.streaming.connectors.kafka.FlinkKafkaConsumerBase.run(FlinkKafkaConsumerBase.java:826)

at org.apache.flink.streaming.api.operators.StreamSource.run(StreamSource.java:110)

at org.apache.flink.streaming.api.operators.StreamSource.run(StreamSource.java:66)

at org.apache.flink.streaming.runtime.tasks.SourceStreamTask$LegacySourceFunctionThread.run(SourceStreamTask.java:269)

Caused by: java.io.IOException: java.io.IOException: None of the hosts in load_url could be connected.

at com.doris(敏感词)db.connector.flink.manager.DorisSinkManager.asyncFlush(DorisSinkManager.java:272)

at com.doris(敏感词)db.connector.flink.manager.DorisSinkManager.access$000(DorisSinkManager.java:49)

at com.doris(敏感词)db.connector.flink.manager.DorisSinkManager$1.run(DorisSinkManager.java:120)

at java.lang.Thread.run(Thread.java:750)

Caused by: java.io.IOException: None of the hosts in load_url could be connected.

at com.doris(敏感词)db.connector.flink.manager.DorisStreamLoadVisitor.doStreamLoad(DorisStreamLoadVisitor.java:66)

at com.doris(敏感词)db.connector.flink.manager.DorisSinkManager.asyncFlush(DorisSinkManager.java:258)

… 3 more

可能是字段对不上之类的错误 可以检查下 然后也升级下插件版本吧 低版本很多东西跟现在都对不上了

好的,后面会升级

想问下字段对不上什么意思,平时都正常写,报错后重试也能写过去

cat /proc/$fe_pid/limits|grep -E ‘Max processes|Max open files’ 您看下fe进程的最大进程数设置的是多少?也可能是用户连接数达到上线了,您也看下be日志中有没有报错。SHOW PROPERTY FOR ‘user_name’ LIKE ‘max_user_connections’;可以查看用户的连接数