Spark Load方式导入StarRocks指南

一、背景

本文主要阐述用Spark Load方式导入数据到StarRocks。

二、环境准备

2.1 部署依赖组件

这里对于Hadoop、Hive、Spark就不做详细的阐述了,大家可以使用公司自建的系统或者自己参考官方文档部署。

注:以下为本次测试使用的环境,$HOME为家目录,可根据自己实际环境调整(建议创建用户并使用/home/starrocks),另外本次测试为自己部署依赖环境,由于资源有限,会有多个服务混合部署,可根据自己实际环境调整参数

| 服务 | 节点 | 端口 | PATH |

|---|---|---|---|

| JDK | 172.26.194.2 172.26.194.3 172.26.194.4 |

N/A | $HOME/thirdparty/jdk1.8.0_202/ |

| Hadoop | namenode(172.26.194.2) resourcemanager(172.26.194.2) |

9002 8035 |

$HOME/thirdparty/hadoop-2.10.0 |

| Spark | master(172.26.194.2) | N/A | $HOME/thirdparty/spark-2.4.4-bin-hadoop2.7 |

| Hive | metastore(172.26.194.2) | 9083 | $HOME/apache-hive-2.3.7-bin |

2.2 部署StarRocks及其依赖

2.2.1 部署StarRocks

请参考 StarRocks手动部署,并建议按如下端口等配置进行部署。以下为本次测试使用的环境,starrocks部署路径为$HOME/starrocks-1.18.2,可根据自己实际环境调整$HOME

| 服务 | 节点 | 端口 | PATH |

|---|---|---|---|

| fe | 172.26.194.2 | http_port = 8030 rpc_port = 9020 query_port = 9030 edit_log_port = 9010 |

$HOME/starrocks-1.18.2/fe |

| be | 172.26.194.2 172.26.194.3 172.26.194.4 |

be_port = 9060 webserver_port = 8040 heartbeat_service_port = 9050 brpc_port = 8060 |

$HOME/starrocks-1.18.2/be |

| broker | 172.26.194.2 172.26.194.3 172.26.194.4 |

broker_ipc_port=8000 | $HOME/starrocks-1.18.2/broker |

2.2.2 配置Spark和YARN客户端

下面配置Spark和YARN客户端操作也可以在fe.conf中spark_home_default_dir指定spark客户端路径,spark_resource_path指定spark-2x.zip路径,yarn_client_path指定YARN客户端路径,详情请参考StarRocks Spark YARN客户端配置

mkdir /home/starrocks/starrocks-1.18.2/fe/lib/yarn-client

ln -s /home/starrocks/thirdparty/spark-2.4.4-bin-hadoop2.7 /home/starrocks/starrocks-1.18.2/fe/lib/spark2x

ln -s /home/starrocks/thirdparty/hadoop-2.10.0 /home/starrocks/starrocks-1.18.2/fe/lib/yarn-client

2.2.3 打包jar包

提交spark load任务的时候会提交该依赖包到hadoop上

cd /home/starrocks/starrocks-1.18.2/fe/lib/spark2x/jars

zip -q -r spark-2x.zip *

2.2.4 配置环境变量~/.bashrc

export JAVA_HOME=/home/starrocks/thirdparty/jdk1.8.0_202/

export HADOOP_HOME=/home/starrocks/thirdparty/hadoop-2.10.0

export SPARK_HOME=/home/starrocks/thirdparty/spark-2.4.4-bin-hadoop2.7

export HADOOP_CONF_DIR=$HADOOP_HOME/etc/hadoop

export HIVE_HOME= /home/starrocks/thirdparty/apache-hive-2.3.7-bin

export HIVE_CONF_DIR=$HIVE_HOME/conf

2.2.5 配置JAVA_HOME

lib/yarn-client/hadoop/libexec/hadoop-config.sh文件第一行添加JAVA_HOME声明,否则会在获取application状态的时候报如下错误(fe.warn.log中)

2021-07-27 21:34:25,341 WARN (Load etl checker|47) [SparkEtlJobHandler.killEtlJob():297] yarn application kill failed. app id: application_1620958422319_5507, load job id: 17426, msg: which: no /home/starrocks/starrocks-1.18.2/fe/lib/yarn-client/hadoop/bin/yarn in ((null))

Error: JAVA_HOME is not set and could not be found.

export JAVA_HOME=/home/starrocks/thirdparty/jdk1.8.0_202/

2.3 生成模拟数据

模拟数据生成脚本gen_data.py,例如“python gen_data.py m n” 可生成m列n行随机数字

#!/usr/bin/env python

import sys

import random

import time

def genRand(s = 10000):

return random.randint(1,s)

def getLine(cols = 10):

tpl = "%s\t"

line = ""

for x in range(int(cols) -1):

line = line + tpl % genRand(x + 10)

line = line + str(genRand(int(cols) + 10))

return line

def getTable(lines = 10, cols = 10):

tpl = "%s\n"

table = ""

for x in range(int(lines) ):

table = table + tpl % getLine(cols)

return table.strip()

def main():

lines = sys.argv[1]

cols = sys.argv[2]

data = getTable(lines, cols)

print(data)

if __name__ == '__main__':

main()

三、Case模拟

3.1 case csv导入

3.1.1 生成测试数据

python gen_data.py 10000 2 > demo.csv #生成1w行两列的数据

3.1.2 上传至hdfs

创建目录和上传文件都需要有对应的权限,可依据自己实际环境调整

/home/starrocks/thirdparty/hadoop-2.10.0/bin/hadoop fs -mkdir /user/starrocks

/home/starrocks/thirdparty/hadoop-2.10.0/bin/hadoop fs -put demo.csv /user/starrocks/

3.1.3 StarRocks DDL

需要提前创建demo_db数据库(create database demo_db;)

CREATE TABLE `demo_db`.`demo_table1` (

`k1` varchar(50) NULL COMMENT "",

`v1` String NULL COMMENT ""

) ENGINE=OLAP

DUPLICATE KEY(`k1` )

COMMENT "OLAP"

DISTRIBUTED BY HASH(`v1` ) BUCKETS 3

PROPERTIES (

"replication_num" = "1"

);

3.1.4 功能测试

创建spark资源,同一个spark资源只需要创建一次

需提前部署broker,我这里broker名字为broker0,下文working_dir需提前在hdfs上创建对应的路径,具体路径以实际环境为准。需要启动starrocks集群的用户有working_dir写权限

CREATE EXTERNAL RESOURCE "spark_resource0"

PROPERTIES

(

"type" = "spark",

"spark.master" = "yarn",

"spark.submit.deployMode" = "cluster",

"spark.executor.memory" = "1g",

"spark.hadoop.yarn.resourcemanager.address" = "172.26.194.2:8035",

"spark.hadoop.fs.defaultFS" = "hdfs://172.26.194.2:9002",

"working_dir" = "hdfs://172.26.194.2:9002/user/starrocks/sparketl",

"broker" = "broker0"

);

启动load任务

USE demo_db;

LOAD LABEL demo_db.label1

(

DATA INFILE("hdfs://172.26.194.2:9002/user/starrocks/demo.csv")

INTO TABLE demo_table1

COLUMNS TERMINATED BY "\t"

)

WITH RESOURCE 'spark_resource0'

(

"spark.executor.memory" = "500m",

"spark.shuffle.compress" = "true",

"spark.driver.memory" = "1500m",

"spark.testing.memory"="536870912" #512M

)

PROPERTIES

(

"timeout" = "3600",

"max_filter_ratio" = "0.2"

);

结果:

*************************** 1. row ***************************

JobId: 17407

Label: label1

State: FINISHED

Progress: ETL:100%; LOAD:100%

Type: SPARK

EtlInfo: unselected.rows=0; dpp.abnorm.ALL=1; dpp.norm.ALL=10000

TaskInfo: cluster:spark; timeout(s):3600; max_filter_ratio:0.2

ErrorMsg: NULL

CreateTime: 2021-07-27 19:15:43

EtlStartTime: 2021-07-27 19:15:56

EtlFinishTime: 2021-07-27 19:16:10

LoadStartTime: 2021-07-27 19:16:10

LoadFinishTime: 2021-07-27 19:16:15

URL: http://172.26.194.2:8098/proxy/application_1620958422319_5474/

JobDetails: {"Unfinished backends":{"00000000-0000-0000-0000-000000000000":[]},"ScannedRows":10001,"TaskNumber":1,"All backends":{"00000000-0000-0000-0000-000000000000":[-1]},"FileNumber":1,"FileSize":42883}

mysql> select * from demo_table1 limit 5;

+------+------+

| k1 | v1 |

+------+------+

| 1 | 3 |

| 1 | 5 |

| 1 | 4 |

| 1 | 3 |

| 1 | 3 |

+------+------+

5 rows in set (0.05 sec)

3.2 case 源目不匹配导入

3.2.1 生成测试数据

利用上文3.1.1的测试数据即可

3.2.2 StarRocks DDL

建表字段新增了create_time和update_time

CREATE TABLE `demo_db`.`demo_table2` (

`k1` varchar(50) NULL COMMENT "",

`v1` String NULL COMMENT "",

`create_time` datetime NULL COMMENT "",

`update_time` datetime NULL COMMENT ""

) ENGINE=OLAP

DUPLICATE KEY(`k1` )

COMMENT "OLAP"

DISTRIBUTED BY HASH(`v1` ) BUCKETS 3

PROPERTIES (

"replication_num" = "1"

);

3.2.4 功能测试

使用 3.1.4 定义的spark资源

启动load任务

USE demo_db;

LOAD LABEL demo_db.label2

(

DATA INFILE("hdfs://172.26.194.2:9002/user/starrocks/demo.csv")

INTO TABLE demo_table2

COLUMNS TERMINATED BY "\t"

(k1,v1)

SET

(

create_time=current_timestamp(),

update_time=current_timestamp()

)

)

WITH RESOURCE 'spark_resource0'

(

"spark.executor.memory" = "500m",

"spark.shuffle.compress" = "true",

"spark.driver.memory" = "1500m",

"spark.testing.memory"="536870912" #512M

)

PROPERTIES

(

"timeout" = "3600",

"max_filter_ratio" = "0.2"

);

结果:

*************************** 1. row ***************************

JobId: 23122

Label: label2

State: FINISHED

Progress: ETL:100%; LOAD:100%

Type: SPARK

EtlInfo: unselected.rows=0; dpp.abnorm.ALL=0; dpp.norm.ALL=10000

TaskInfo: cluster:spark; timeout(s):3600; max_filter_ratio:0.2

ErrorMsg: NULL

CreateTime: 2021-07-27 20:37:29

EtlStartTime: 2021-07-27 20:37:41

EtlFinishTime: 2021-07-27 20:37:56

LoadStartTime: 2021-07-27 20:37:56

LoadFinishTime: 2021-07-27 20:37:58

URL: http://172.26.194.2:8098/proxy/application_1632749512570_0026/

JobDetails: {"Unfinished backends":{"00000000-0000-0000-0000-000000000000":[]},"ScannedRows":10000,"TaskNumber":1,"All backends":{"00000000-0000-0000-0000-000000000000":[-1]},"FileNumber":1,"FileSize":43532}

mysql> select * from demo_table2 limit 5;

+------+------+---------------------+---------------------+

| k1 | v1 | create_time | update_time |

+------+------+---------------------+---------------------+

| 1 | 9 | 2021-07-27 20:37:49 | 2021-07-27 20:37:49 |

| 1 | 9 | 2021-07-27 20:37:49 | 2021-07-27 20:37:49 |

| 1 | 10 | 2021-07-27 20:37:49 | 2021-07-27 20:37:49 |

| 1 | 9 | 2021-07-27 20:37:49 | 2021-07-27 20:37:49 |

| 1 | 9 | 2021-07-27 20:37:49 | 2021-07-27 20:37:49 |

+------+------+---------------------+---------------------+

5 rows in set (0.00 sec)

3.3 case hive orc导入

3.3.1 生成orc格式数据(如果使用已有orc数据,请跳过这一步)

/home/starrocks/thirdparty/spark-2.4.4-bin-hadoop2.7/bin/spark-shell

scala> sc.setLogLevel("ERROR")

scala> val df = spark.read.option("delimiter","\t").csv("hdfs://172.26.194.2:9002/user/starrocks/demo.csv").toDF("k1","v1")

scala> df.coalesce(1).write.orc("hdfs://172.26.194.2:9002/user/starrocks/demo.orc")

scala> spark.read.orc("hdfs://172.26.194.2:9002/user/starrocks/demo.orc").show(5)

+---+---+

| k1| v1|

+---+---+

| 4| 4|

| 8| 8|

| 10| 6|

| 6| 1|

| 2| 10|

+---+---+

only showing top 5 rows

3.3.2 Hive DDL

CREATE EXTERNAL TABLE `hive_orc`(

`k1` string,

`v1` string)

ROW FORMAT SERDE

'org.apache.hadoop.hive.ql.io.orc.OrcSerde'

STORED AS INPUTFORMAT

'org.apache.hadoop.hive.ql.io.orc.OrcInputFormat'

OUTPUTFORMAT

'org.apache.hadoop.hive.ql.io.orc.OrcOutputFormat'

LOCATION

'hdfs://172.26.194.2:9002/user/starrocks/demo.orc'

TBLPROPERTIES ( 'orc.compression'='snappy');

3.3.3 StarRocks DDL

#新建hive resource

CREATE EXTERNAL RESOURCE "hive0"

PROPERTIES (

"type" = "hive",

"hive.metastore.uris" = "thrift://172.26.194.2:9083"

);

#新建starrocks hive外表

CREATE EXTERNAL TABLE hive_external_orc

(

k1 string,

v1 string

)

ENGINE=hive

properties (

"resource" = "hive0",

"database" = "default",

"table" = "hive_orc",

"hive.metastore.uris" = "thrift://172.26.194.2:9083"

);

#新建starrocks测试表

create table demo_table3 like demo_table1;

3.3.4 功能测试

USE demo_db;

LOAD LABEL demo_db.label3

(

DATA FROM TABLE hive_external_orc

INTO TABLE demo_table3

)

WITH RESOURCE 'spark_resource0'

(

"spark.executor.memory" = "1500m",

"spark.shuffle.compress" = "true",

"spark.driver.memory" = "1500m"

)

PROPERTIES

(

"timeout" = "3600",

"max_filter_ratio" = "0.2"

);

#结果

*************************** 1. row ***************************

JobId: 19028

Label: label3

State: FINISHED

Progress: ETL:100%; LOAD:100%

Type: SPARK

EtlInfo: unselected.rows=0; dpp.abnorm.ALL=0; dpp.norm.ALL=10000

TaskInfo: cluster:spark; timeout(s):3600; max_filter_ratio:0.2

ErrorMsg: NULL

CreateTime: 2021-07-27 21:22:52

EtlStartTime: 2021-07-27 21:23:06

EtlFinishTime: 2021-07-27 21:23:34

LoadStartTime: 2021-07-27 21:23:34

LoadFinishTime: 2021-07-27 21:23:36

URL: http://172.26.194.2:8098/proxy/application_1620958422319_6070/

JobDetails: {"Unfinished backends":{"00000000-0000-0000-0000-000000000000":[]},"ScannedRows":10000,"TaskNumber":1,"All backends":{"00000000-0000-0000-0000-000000000000":[-1]},"FileNumber":0,"FileSize":0}

1 row in set (0.00 sec)

mysql> select * from demo_table3 limit 5;

+------+------+

| k1 | v1 |

+------+------+

| 1 | 11 |

| 1 | 11 |

| 1 | 9 |

| 1 | 7 |

| 1 | 9 |

+------+------+

5 rows in set (0.00 sec)

3.4 case hive parquet导入

3.4.1 生成parquet格式数据(如果使用已有parquet数据,请跳过这一步)

/home/starrocks/thirdparty/spark-2.4.4-bin-hadoop2.7/bin/spark-shell

scala> sc.setLogLevel("ERROR")

scala> val df = spark.read.option("delimiter","\t").csv("hdfs://172.26.194.2:9002/user/starrocks/demo.csv").toDF("k1","v1")

scala> df.coalesce(1).write.parquet("hdfs://172.26.194.2:9002/user/starrocks/demo.parquet")

scala> spark.read.parquet("hdfs://172.26.194.2:9002/user/starrocks/demo.parquet").show(5)

+---+---+

| k1| v1|

+---+---+

| 4| 4|

| 8| 8|

| 10| 6|

| 6| 1|

| 2| 10|

+---+---+

only showing top 5 rows

3.4.2 Hive DDL

CREATE EXTERNAL TABLE `hive_parquet`(

`k1` string,

`v1` string)

ROW FORMAT SERDE

'org.apache.hadoop.hive.ql.io.parquet.serde.ParquetHiveSerDe'

STORED AS INPUTFORMAT

'org.apache.hadoop.hive.ql.io.parquet.MapredParquetInputFormat'

OUTPUTFORMAT

'org.apache.hadoop.hive.ql.io.parquet.MapredParquetOutputFormat'

LOCATION

'hdfs://172.26.194.2:9002/user/starrocks/demo.parquet'

TBLPROPERTIES ( 'parquet.compression'='snappy');

3.4.3 StarRocks DDL

如之前已经创建hive resource,这里就不需要再次创建

create table demo_table4 like demo_table1;

#新建hive resource

CREATE EXTERNAL RESOURCE "hive0"

PROPERTIES (

"type" = "hive",

"hive.metastore.uris" = "thrift://172.26.194.2:9083"

);

#新建Starrocks hive外表

CREATE EXTERNAL TABLE hive_external_parquet

(

k1 string,

v1 string

)

ENGINE=hive

properties (

"resource" = "hive0",

"database" = "default",

"table" = "hive_parquet",

"hive.metastore.uris" = "thrift://172.26.194.2:9083"

);

3.4.4 功能测试

USE demo_db;

LOAD LABEL demo_db.label4

(

DATA FROM TABLE hive_external_parquet

INTO TABLE demo_table4

)

WITH RESOURCE 'spark_resource0'

(

"spark.executor.memory" = "1500m",

"spark.shuffle.compress" = "true",

"spark.driver.memory" = "1500m"

)

PROPERTIES

(

"timeout" = "3600",

"max_filter_ratio" = "0.2"

);

#结果

*************************** 1. row ***************************

JobId: 17424

Label: label4

State: FINISHED

Progress: ETL:100%; LOAD:100%

Type: SPARK

EtlInfo: unselected.rows=0; dpp.abnorm.ALL=0; dpp.norm.ALL=10000

TaskInfo: cluster:spark; timeout(s):3600; max_filter_ratio:0.2

ErrorMsg: NULL

CreateTime: 2021-07-27 21:17:48

EtlStartTime: 2021-07-27 21:17:59

EtlFinishTime: 2021-07-27 21:18:14

LoadStartTime: 2021-07-27 21:18:14

LoadFinishTime: 2021-07-27 21:18:18

URL: http://172.26.194.2:8098/proxy/application_1620958422319_5489/

JobDetails: {"Unfinished backends":{"00000000-0000-0000-0000-000000000000":[]},"ScannedRows":10000,"TaskNumber":1,"All backends":{"00000000-0000-0000-0000-000000000000":[-1]},"FileNumber":0,"FileSize":0}

1 row in set (0.00 sec)

mysql> select * from demo_table4 limit 5;

+------+------+

| k1 | v1 |

+------+------+

| 1 | 11 |

| 1 | 11 |

| 1 | 9 |

| 1 | 7 |

| 1 | 9 |

+------+------+

5 rows in set (0.02 sec)

四、排查问题方法

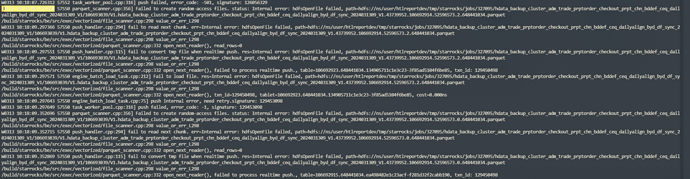

4.1 log/spark_launcher_log #有一些spark-submit失败的原因会在这里

4.2 log/fe.log #可能存在获取状态失败问题

4.3 /home/starrocks/thirdparty/hadoop-2.10.0/logs/userlogs/application_1620958422319_xxxx #作业具体失败原因

五、常见问题

5.1 yarn application status failed

yarn application status failed. spark app id: application_1620958422319_5441, load job id: 17389, timeout: 30000, msg: which: no /home/starrocks/starrocks-1.18.2-fe/fe-dd87506f-6e0b-4b33-8ebb-ce82952433a0/lib/yarn-client/hadoop/bin/yarn in ((null))

Error: JAVA_HOME is not set and could not be found

原因:

lib/yarn-client/hadoop/libexec/hadoop-config.sh中未识别JAVA_HOME

解决方案:

在lib/yarn-client/hadoop/libexec/hadoop-config.sh中声明JAVA_HOME

export JAVA_HOME=/home/starrocks/thirdparty/jdk1.8.0_202/

5.2 内存超限制

21/07/27 16:37:07 INFO yarn.Client: Verifying our application has not requested more than the maximum memory capability of the cluster (2048 MB per container)

Exception in thread "main" java.lang.IllegalArgumentException: Required executor memory (2048), overhead (384 MB), and PySpark memory (0 MB) is above the max threshold (2048 MB) of this cluster! Please check the values of 'yarn.scheduler.maximum-allocation-mb' and/or 'yarn.nodemanager.resource.memory-mb'.

at org.apache.spark.deploy.yarn.Client.verifyClusterResources(Client.scala:346)

at org.apache.spark.deploy.yarn.Client.submitApplication(Client.scala:176)

at org.apache.spark.deploy.yarn.Client.run(Client.scala:1135)

at org.apache.spark.deploy.yarn.YarnClusterApplication.start(Client.scala:1527)

at org.apache.spark.deploy.SparkSubmit.org$apache$spark$deploy$SparkSubmit$$runMain(SparkSubmit.scala:845)

at org.apache.spark.deploy.SparkSubmit.doRunMain$1(SparkSubmit.scala:161)

at org.apache.spark.deploy.SparkSubmit.submit(SparkSubmit.scala:184)

at org.apache.spark.deploy.SparkSubmit.doSubmit(SparkSubmit.scala:86)

at org.apache.spark.deploy.SparkSubmit$$anon$2.doSubmit(SparkSubmit.scala:920)

at org.apache.spark.deploy.SparkSubmit$.main(SparkSubmit.scala:929)

at org.apache.spark.deploy.SparkSubmit.main(SparkSubmit.scala)

21/07/27 16:37:07 INFO util.ShutdownHookManager: Shutdown hook called

原因:

driver内存超过yarn的单个container分配的最大内存,我们测试环境是2048M;spark启动程序的时候需要额外分配非堆内内存用于用于考虑 VM 开销、内部字符串、其他本机开销等开销,默认最小为348M,本次测试配置的jvm为1800M,1800+348=2148M>2048M,所以抛异常了,详细可参考spark.driver.memoryOverhead参数 http://spark.apache.org/docs/latest/configuration.html

5.3 spark内存配置

Exception in thread "main" java.lang.IllegalArgumentException: System memory 466092032 must be at least 471859200.Please increase heap size using the --driver-memory option or spark.driver.memory in Spark configuration.

at org.apache.spark.memory.UnifiedMemoryManager$.getMaxMemory(UnifiedMemoryManager.scala:217)

at org.apache.spark.memory.UnifiedMemoryManager$.apply(UnifiedMemoryManager.scala:199)

at org.apache.spark.SparkEnv$.create(SparkEnv.scala:330)

at org.apache.spark.SparkEnv$.createExecutorEnv(SparkEnv.scala:200)

at org.apache.spark.executor.CoarseGrainedExecutorBackend$$anonfun$run$1.apply$mcV$sp(CoarseGrain

edExecutorBackend.scala:221)

at org.apache.spark.deploy.SparkHadoopUtil$$anon$2.run(SparkHadoopUtil.scala:65)

at org.apache.spark.deploy.SparkHadoopUtil$$anon$2.run(SparkHadoopUtil.scala:64)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:422)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1698)

at org.apache.spark.deploy.SparkHadoopUtil.runAsSparkUser(SparkHadoopUtil.scala:64)

at org.apache.spark.executor.CoarseGrainedExecutorBackend$.run(CoarseGrainedExecutorBackend.scala

:188)

原因:

JVM申请的memory不够导致无法启动SparkContext,可调整spark.testing.memory避免,以下为源码可参考

解决方案:

load中添加参数

"spark.testing.memory"="536870912"

WITH RESOURCE 'spark'

(

"spark.executor.memory" = "500m",

"spark.shuffle.compress" = "true",

"spark.driver.memory" = "1500m",

"spark.testing.memory"="536870912"

)

PROPERTIES

(

"timeout" = "3600",

"max_filter_ratio" = "0.2"

);

5.4 Path does not exist

org.apache.spark.sql.AnalysisException: Path does not exist: hdfs://namenode:9002/user/starrocks/sparketl/jobs/10054/label1/17370/configs/jobconfig.json;

at org.apache.spark.sql.execution.datasources.DataSource$$anonfun$org$apache$spark$sql$execution$datasources$Da

taSource$$checkAndGlobPathIfNecessary$1.apply(DataSource.scala:558)

at org.apache.spark.sql.execution.datasources.DataSource$$anonfun$org$apache$spark$sql$execution$datasources$Da

taSource$$checkAndGlobPathIfNecessary$1.apply(DataSource.scala:545)

at scala.collection.TraversableLike$$anonfun$flatMap$1.apply(TraversableLike.scala:241)

at scala.collection.TraversableLike$$anonfun$flatMap$1.apply(TraversableLike.scala:241)

at scala.collection.immutable.List.foreach(List.scala:392)

at scala.collection.TraversableLike$class.flatMap(TraversableLike.scala:241)

at scala.collection.immutable.List.flatMap(List.scala:355)

at org.apache.spark.sql.execution.datasources.DataSource.org$apache$spark$sql$execution$datasources$DataSource$

$checkAndGlobPathIfNecessary(DataSource.scala:545)

原因:

任务失败主动清理目录导致

解决方案:

查看fe.warn.log或者fe.log具体的异常信息

5.5 Permission denied

Exception in thread "main" org.apache.hadoop.security.AccessControlException: Permission denied: user=starrocks, access=WRITE, inode="/user/starrocks":starrocks:supergroup:drwxr-xr-x

原因:

启动spark任务的时候会在指定的working_dir下上传依赖,启动spark任务的用户(即starrocks集群启动的用户,这里启动集群用户是starrocks)没有权限写working_dir目录导致的

解决方案:

./bin/hadoop fs -chmod 777 /user/starrocks