【详述】

在执行如下查询时,

SELECT

date_id,

COALESCE(SUM(value), 0) AS summary

FROM

fact_tbl

WHERE

project_id IN %(project_ids)s AND

date_id >= 20220505 AND

date_id <= 20220510

GROUP BY

date_id;

其中参数project_ids允许用户传入多个整型值。

- 当输入100个ID值时,查询正常

- 但当ID数量加一,即101个时,遭遇如下错误

ERROR 1064 (HY000): StarRocks planner use long time 3000 ms, This probably because

1. FE Full GC,

2. Hive external table fetch metadata took a long time,

3. The SQL is very complex.

You could

1. adjust FE JVM config,

2. try query again,

3. enlarge new_planner_optimize_timeout session variable

【背景】

表结构如下

CREATE TABLE `fact_tbl` (

`version_id` varchar(128) NOT NULL,

`item_id` varchar(128) NOT NULL,

`date_id` int NOT NULL,

`insertions` int NOT NULL,

`project_id` int NOT NULL

) ENGINE=OLAP

DUPLICATE KEY (

`version_id`,

`item_id`

)

COMMENT "test fact table"

PARTITION BY RANGE (`project_id`) (

around 5000 partitions

)

DISTRIBUTED BY HASH (

`version_id`

) BUCKETS 8

PROPERTIES (

"replication_num" = "1",

"in_memory" = "false",

"storage_format" = "DEFAULT"

);

表中数据量级如下

- size: 2.422 GB

- ReplicaCount: 36008

- rows: 14,007,272

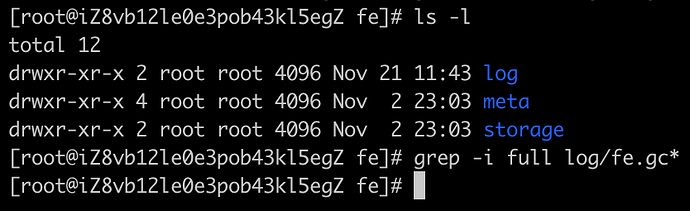

fe JVM配置如下

JAVA_OPTS="-Dlog4j2.formatMsgNoLookups=true -Xmx8192m -XX:+UseMembar -XX:SurvivorRatio=8 -XX:MaxTenuringThreshold=7 -XX:+PrintGCDateStamps -XX:+PrintGCDetails -XX:+UseConcMarkSweepGC -XX:+UseParNewGC -XX:+CMSClassUnloadingEnabled -XX:-CMSParallelRemarkEnabled -XX:CMSInitiatingOccupancyFraction=80 -XX:SoftRefLRUPolicyMSPerMB=0 -Xloggc:$STARROCKS_HOME/log/fe.gc.log.$DATE"

# For jdk 9+, this JAVA_OPTS will be used as default JVM options

JAVA_OPTS_FOR_JDK_9="-Dlog4j2.formatMsgNoLookups=true -Xmx8192m -XX:SurvivorRatio=8 -XX:MaxTenuringThreshold=7 -XX:+CMSClassUnloadingEnabled -XX:-CMSParallelRemarkEnabled -XX:CMSInitiatingOccupancyFraction=80 -XX:SoftRefLRUPolicyMSPerMB=0 -Xlog:gc*:$STARROCKS_HOME/log/fe.gc.log.$DATE:time"

【StarRocks版本】2.2.5

。

。