-

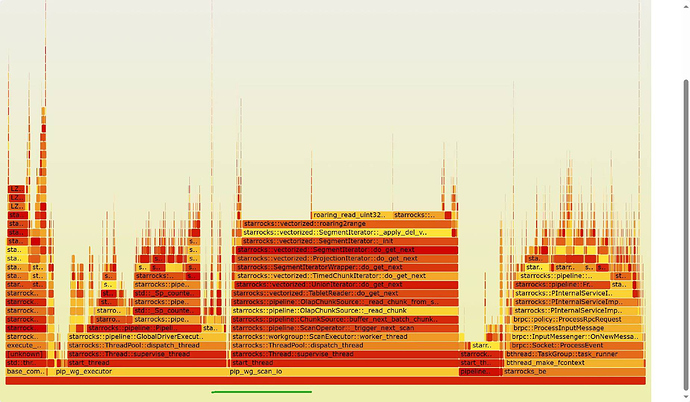

FE CPU打满,大量查询超时

Jstack 有如下堆栈

"starrocks-mysql-nio-pool-2791" #119533 daemon prio=5 os_prio=0 tid=0x00007fe65c060800 nid=0x19244 runnable [0x00007fe629881000]

java.lang.Thread.State: RUNNABLE

at java.util.Arrays.hashCode(Arrays.java:4146)

at java.util.Objects.hash(Objects.java:128)

at com.starrocks.sql.optimizer.base.DistributionCol.hashCode(DistributionCol.java:116)

at java.util.HashMap.hash(HashMap.java:340)

at java.util.HashMap.get(HashMap.java:558)

at com.starrocks.sql.optimizer.base.DistributionDisjointSet.find(DistributionDisjointSet.java:59)

at com.starrocks.sql.optimizer.base.DistributionDisjointSet.union(DistributionDisjointSet.java:73)

at com.starrocks.sql.optimizer.base.DistributionSpec$PropertyInfo.unionNullRelaxCols(DistributionSpec.java:98)

at com.starrocks.sql.optimizer.OutputPropertyDeriver.computeHashJoinDistributionPropertyInfo(OutputPropertyDeriver.java:183)

at com.starrocks.sql.optimizer.OutputPropertyDeriver.visitPhysicalJoin(OutputPropertyDeriver.java:259)

at com.starrocks.sql.optimizer.OutputPropertyDeriver.visitPhysicalHashJoin(OutputPropertyDeriver.java:199)

at com.starrocks.sql.optimizer.OutputPropertyDeriver.visitPhysicalHashJoin(OutputPropertyDeriver.java:76)

at com.starrocks.sql.optimizer.operator.physical.PhysicalHashJoinOperator.accept(PhysicalHashJoinOperator.java:41)

at com.starrocks.sql.optimizer.OutputPropertyDeriver.getOutputProperty(OutputPropertyDeriver.java:95)

at com.starrocks.sql.optimizer.task.EnforceAndCostTask.execute(EnforceAndCostTask.java:206)

at com.starrocks.sql.optimizer.task.SeriallyTaskScheduler.executeTasks(SeriallyTaskScheduler.java:69)

at com.starrocks.sql.optimizer.Optimizer.memoOptimize(Optimizer.java:571)

at com.starrocks.sql.optimizer.Optimizer.optimizeByCost(Optimizer.java:188)

at com.starrocks.sql.optimizer.Optimizer.optimize(Optimizer.java:126)

at com.starrocks.sql.StatementPlanner.createQueryPlanWithReTry(StatementPlanner.java:203)

at com.starrocks.sql.StatementPlanner.planQuery(StatementPlanner.java:119)

at com.starrocks.sql.StatementPlanner.plan(StatementPlanner.java:88)

at com.starrocks.sql.StatementPlanner.plan(StatementPlanner.java:57)

at com.starrocks.qe.StmtExecutor.execute(StmtExecutor.java:436)

at com.starrocks.qe.ConnectProcessor.handleQuery(ConnectProcessor.java:362)

at com.starrocks.qe.ConnectProcessor.dispatch(ConnectProcessor.java:476)

at com.starrocks.qe.ConnectProcessor.processOnce(ConnectProcessor.java:742)

at com.starrocks.mysql.nio.ReadListener.lambda$handleEvent$0(ReadListener.java:69)

at com.starrocks.mysql.nio.ReadListener$Lambda$737/1304093818.run(Unknown Source)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)

at java.lang.Thread.run(Thread.java:750)

Locked ownable synchronizers:

- <0x00000004efd52070> (a java.util.concurrent.ThreadPoolExecutor$Worker)

-

Github Issue:

-

Github Fix PR:

-

Jira

-

问题版本:

- 3.1.0 ~ 3.1.4

-

修复版本:

- 3.1.5+

-

问题原因:

-

解决办法: