-

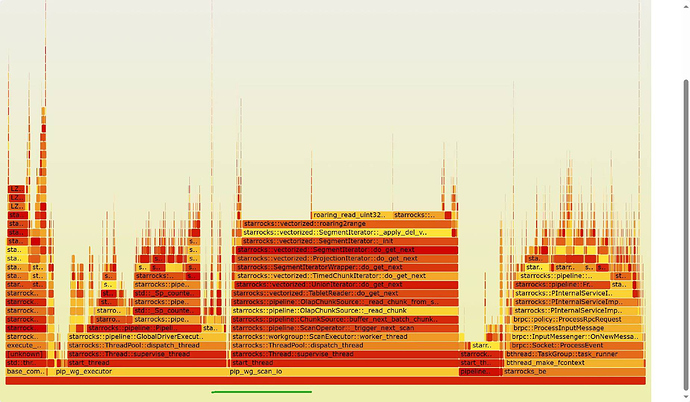

ThreadResourceMgr 锁导致 BE CPU压不上去,并发性能不行

现像:

#0 0x00007f09c61f675d in __lll_lock_wait () from /lib64/libpthread.so.0

#1 0x00007f09c61efa79 in pthread_mutex_lock () from /lib64/libpthread.so.0

#2 0x0000000001ea9242 in __gthread_mutex_lock (__mutex=0x7f09c373d688) at /usr/include/c++/10.3.0/x86_64-pc-linux-gnu/bits/gthr-default.h:749

#3 std::mutex::lock (this=0x7f09c373d688) at /usr/include/c++/10.3.0/bits/std_mutex.h:100

#4 std::unique_lock<std::mutex>::lock (this=0x7ef689befd70) at /usr/include/c++/10.3.0/bits/unique_lock.h:138

#5 std::unique_lock<std::mutex>::unique_lock (__m=..., this=0x7ef689befd70) at /usr/include/c++/10.3.0/bits/unique_lock.h:68

#6 starrocks::ThreadResourceMgr::unregister_pool (this=0x7f09c373d680, pool=0x7f05bcd373a0) at /root/starrocks/be/src/runtime/thread_resource_mgr.cpp:96

#7 0x0000000001f1c07e in starrocks::RuntimeState::~RuntimeState (this=0x7efa59c5ac10, __in_chrg=<optimized out>) at /root/starrocks/be/src/runtime/exec_env.h:141

#8 0x0000000001ead272 in std::_Sp_counted_base<(__gnu_cxx::_Lock_policy)2>::_M_release (this=0x7efa59c5ac00) at /usr/include/c++/10.3.0/ext/atomicity.h:70

#9 std::_Sp_counted_base<(__gnu_cxx::_Lock_policy)2>::_M_release (this=0x7efa59c5ac00) at /usr/include/c++/10.3.0/bits/shared_ptr_base.h:151

#10 std::__shared_count<(__gnu_cxx::_Lock_policy)2>::~__shared_count (this=<optimized out>, __in_chrg=<optimized out>) at /usr/include/c++/10.3.0/bits/shared_ptr_base.h:733

#11 std::__shared_ptr<starrocks::RuntimeState, (__gnu_cxx::_Lock_policy)2>::~__shared_ptr (this=<optimized out>, __in_chrg=<optimized out>) at /usr/include/c++/10.3.0/bits/shared_ptr_base.h:1183

#12 std::shared_ptr<starrocks::RuntimeState>::~shared_ptr (this=<optimized out>, __in_chrg=<optimized out>) at /usr/include/c++/10.3.0/bits/shared_ptr.h:121

#13 starrocks::FragmentExecState::~FragmentExecState (this=<optimized out>, __in_chrg=<optimized out>) at /root/starrocks/be/src/runtime/fragment_mgr.cpp:170

#14 0x0000000001eb67eb in std::_Sp_counted_ptr<starrocks::FragmentExecState*, (__gnu_cxx::_Lock_policy)2>::_M_dispose (this=<optimized out>) at /usr/include/c++/10.3.0/bits/shared_ptr_base.h:379

#15 0x000000000192690a in std::_Sp_counted_base<(__gnu_cxx::_Lock_policy)2>::_M_release (this=0x7ef1aeb59840) at /usr/include/c++/10.3.0/ext/atomicity.h:70

#16 std::_Sp_counted_base<(__gnu_cxx::_Lock_policy)2>::_M_release (this=0x7ef1aeb59840) at /usr/include/c++/10.3.0/bits/shared_ptr_base.h:151

#17 0x0000000001eae775 in std::__shared_count<(__gnu_cxx::_Lock_policy)2>::~__shared_count (this=0x7efa585fa010, __in_chrg=<optimized out>) at /usr/include/c++/10.3.0/bits/std_function.h:245

#18 std::__shared_ptr<starrocks::FragmentExecState, (__gnu_cxx::_Lock_policy)2>::~__shared_ptr (this=0x7efa585fa008, __in_chrg=<optimized out>) at /usr/include/c++/10.3.0/bits/shared_ptr_base.h:1183

#19 std::shared_ptr<starrocks::FragmentExecState>::~shared_ptr (this=0x7efa585fa008, __in_chrg=<optimized out>) at /usr/include/c++/10.3.0/bits/shared_ptr.h:121

#20 ~<lambda> (this=0x7efa585fa000, __in_chrg=<optimized out>) at /root/starrocks/be/src/runtime/fragment_mgr.cpp:438

#21 std::_Function_base::_Base_manager<starrocks::FragmentMgr::exec_plan_fragment(const starrocks::TExecPlanFragmentParams&, const StartSuccCallback&, const FinishCallback&)::<lambda()> >::_M_destroy (__victim=...) at /usr/include/c++/10.3.0/bits/std_function.h:176

#22 std::_Function_base::_Base_manager<starrocks::FragmentMgr::exec_plan_fragment(const starrocks::TExecPlanFragmentParams&, const StartSuccCallback&, const FinishCallback&)::<lambda()> >::_M_manager (__op=<optimized out>, __source=..., __dest=...) at /usr/include/c++/10.3.0/bits/std_function.h:200

#23 std::_Function_handler<void(), starrocks::FragmentMgr::exec_plan_fragment(const starrocks::TExecPlanFragmentParams&, const StartSuccCallback&, const FinishCallback&)::<lambda()> >::_M_manager(std::_Any_data &, const std::_Any_data &, std::_Manager_operation) (__dest=..., __source=..., __op=<optimized out>) at /usr/include/c++/10.3.0/bits/std_function.h:283

#24 0x0000000001ff7692 in std::_Function_base::~_Function_base (this=<optimized out>, __in_chrg=<optimized out>) at /usr/include/c++/10.3.0/bits/std_function.h:245

#25 std::function<void ()>::~function() (this=<optimized out>, __in_chrg=<optimized out>) at /usr/include/c++/10.3.0/bits/std_function.h:303

#26 starrocks::FunctionRunnable::~FunctionRunnable (this=<optimized out>, __in_chrg=<optimized out>) at /root/starrocks/be/src/util/threadpool.cpp:41

#27 0x0000000001ff7192 in std::_Sp_counted_base<(__gnu_cxx::_Lock_policy)2>::_M_release (this=0x7efa585fa040) at /root/starrocks/be/src/util/threadpool.cpp:471

#28 std::_Sp_counted_base<(__gnu_cxx::_Lock_policy)2>::_M_release (this=0x7efa585fa040) at /usr/include/c++/10.3.0/bits/shared_ptr_base.h:151

#29 std::__shared_count<(__gnu_cxx::_Lock_policy)2>::~__shared_count (this=<optimized out>, __in_chrg=<optimized out>) at /usr/include/c++/10.3.0/bits/shared_ptr_base.h:733

#30 std::__shared_ptr<starrocks::Runnable, (__gnu_cxx::_Lock_policy)2>::~__shared_ptr (this=<optimized out>, __in_chrg=<optimized out>) at /usr/include/c++/10.3.0/bits/shared_ptr_base.h:1183

#31 std::__shared_ptr<starrocks::Runnable, (__gnu_cxx::_Lock_policy)2>::reset (this=<synthetic pointer>) at /usr/include/c++/10.3.0/bits/shared_ptr_base.h:1301

#32 starrocks::ThreadPool::dispatch_thread (this=0x7f09c4003c00) at /root/starrocks/be/src/util/threadpool.cpp:522

#33 0x0000000001ff298a in std::function<void ()>::operator()() const (this=0x7efe056fd8d8) at /usr/include/c++/10.3.0/bits/std_function.h:248

#34 starrocks::Thread::supervise_thread (arg=0x7efe056fd8c0) at /root/starrocks/be/src/util/thread.cpp:327

#35 0x00007f09c61ed17a in start_thread () from /lib64/libpthread.so.0

#36 0x00007f09c578edf3 in clone () from /lib64/libc.so.6

-

Github Issue:

-

Github Fix PR:

-

Jira

-

问题版本:

-

2.2.0 ~ latest

-

2.3.0 ~ 2.3.4

-

2.4.0 ~ latest

-

修复版本:

-

问题原因:

-

临时解决办法: