创建hudicatalog

CREATE EXTERNAL CATALOG hudi_catalog

PROPERTIES

(

“type” = “hudi”,

“hive.metastore.type” = “hive”,

“hive.metastore.uris” = “thrift://192.168.3.43:9083”

)

查询hudi mor表报错

default> select * from by_500w_hudi_11_rt

[2025-09-06 22:45:05] [42000][1064] Failed to open the off-heap table scanner. java exception details: java.io.IOException: Failed to open the hudi MOR slice reader.

[2025-09-06 22:45:05] at com.starrocks.hudi.reader.HudiSliceScanner.open(HudiSliceScanner.java:221)

[2025-09-06 22:45:05] Caused by: java.lang.IllegalArgumentException: Field: _hoodie_operation, does not have a defined type

[2025-09-06 22:45:05] at org.apache.hudi.hadoop.HiveHoodieReaderContext.lambda$setSchemas$1(HiveHoodieReaderContext.java:113)

[2025-09-06 22:45:05] at java.base/java.util.stream.ReferencePipeline$3$1.accept(ReferencePipeline.java:197)

[2025-09-06 22:45:05] at java.base/java.util.ArrayList$ArrayListSpliterator.forEachRemaining(ArrayList.java:1625)

[2025-09-06 22:45:05] at java.base/java.util.stream.AbstractPipeline.copyInto(AbstractPipeline.java:509)

[2025-09-06 22:45:05] at java.base/java.util.stream.AbstractPipeline.wrapAndCopyInto(AbstractPipeline.java:499)

[2025-09-06 22:45:05] at java.base/java.util.stream.ReduceOps$ReduceOp.evaluateSequential(ReduceOps.java:921)

[2025-09-06 22:45:05] at java.base/java.util.stream.AbstractPipeline.evaluate(AbstractPipeline.java:234)

[2025-09-06 22:45:05] at java.base/java.util.stream.ReferencePipeline.collect(ReferencePipeline.java:682)

[2025-09-06 22:45:05] at org.apache.hudi.hadoop.HiveHoodieReaderContext.setSchemas(HiveHoodieReaderContext.java:116)

[2025-09-06 22:45:05] at org.apache.hudi.hadoop.HiveHoodieReaderContext.getFileRecordIterator(HiveHoodieReaderContext.java:155)

[2025-09-06 22:45:05] at org.apache.hudi.hadoop.HiveHoodieReaderContext.getFileRecordIterator(HiveHoodieReaderContext.java:136)

[2025-09-06 22:45:05] at org.apache.hudi.common.table.read.HoodieFileGroupReader.makeBaseFileIterator(HoodieFileGroupReader.java:201)

[2025-09-06 22:45:05] at org.apache.hudi.common.table.read.HoodieFileGroupReader.initRecordIterators(HoodieFileGroupReader.java:179)

[2025-09-06 22:45:05] at org.apache.hudi.hadoop.HoodieFileGroupReaderBasedRecordReader.(HoodieFileGroupReaderBasedRecordReader.java:148)

[2025-09-06 22:45:05] at org.apache.hudi.hadoop.HoodieParquetInputFormat.getRecordReader(HoodieParquetInputFormat.java:126)

[2025-09-06 22:45:05] at org.apache.hudi.hadoop.realtime.HoodieParquetRealtimeInputFormat.getRecordReader(HoodieParquetRealtimeInputFormat.java:77)

[2025-09-06 22:45:05] at com.starrocks.hudi.reader.HudiSliceScanner.initReader(HudiSliceScanner.java:199)

[2025-09-06 22:45:05] at com.starrocks.hudi.reader.HudiSliceScanner.open(HudiSliceScanner.java:217)

[2025-09-06 22:45:05] : BE:10004

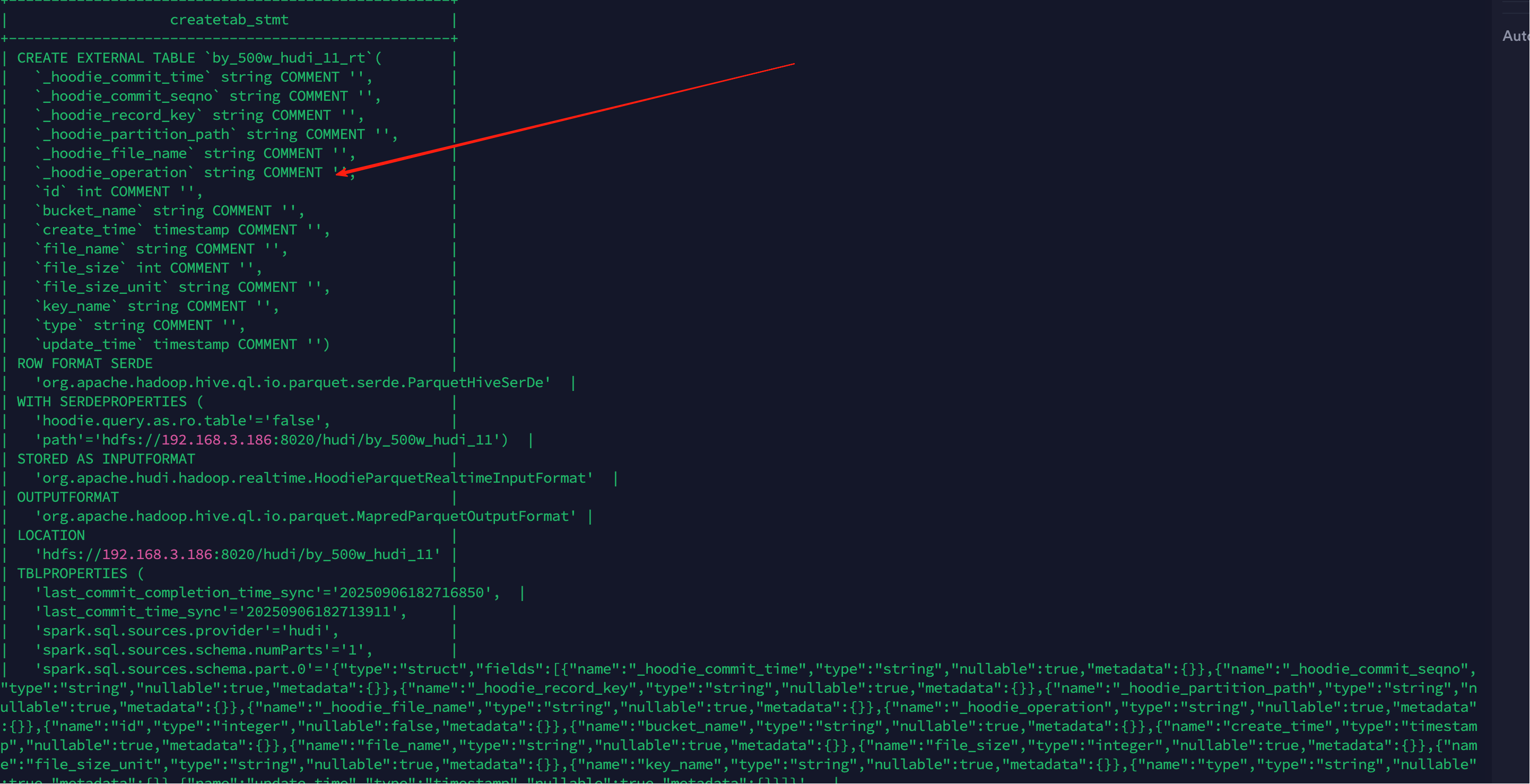

hive 里面查看表结构正常

startrocks 查询表结构

CREATE TABLE by_500w_hudi_11_rt (

_hoodie_commit_time varchar(1073741824) DEFAULT NULL,

_hoodie_commit_seqno varchar(1073741824) DEFAULT NULL,

_hoodie_record_key varchar(1073741824) DEFAULT NULL,

_hoodie_partition_path varchar(1073741824) DEFAULT NULL,

_hoodie_file_name varchar(1073741824) DEFAULT NULL,

id int(11) DEFAULT NULL,

bucket_name varchar(1073741824) DEFAULT NULL,

create_time datetime DEFAULT NULL,

file_name varchar(1073741824) DEFAULT NULL,

file_size int(11) DEFAULT NULL,

file_size_unit varchar(1073741824) DEFAULT NULL,

key_name varchar(1073741824) DEFAULT NULL,

type varchar(1073741824) DEFAULT NULL,

update_time datetime DEFAULT NULL

)

PROPERTIES (“hudi.table.serde.lib” = “org.apache.hadoop.hive.ql.io.parquet.serde.ParquetHiveSerDe”, “hudi.table.type” = “MERGE_ON_READ”, “hudi.table.base.path” = “hdfs://192.168.3.186:8020/hudi/by_500w_hudi_11”, “hudi.table.input.format” = “org.apache.hudi.hadoop.realtime.HoodieParquetRealtimeInputFormat”, “hudi.table.column.names” = “_hoodie_commit_time,_hoodie_commit_seqno,_hoodie_record_key,_hoodie_partition_path,_hoodie_file_name,id,bucket_name,create_time,file_name,file_size,file_size_unit,key_name,type,update_time”, “hudi.table.column.types” = “string#string#string#string#string#int#string#timestamp-millis#string#int#string#string#string#timestamp-millis”, “location” = “hdfs://192.168.3.186:8020/hudi/by_500w_hudi_11”, “hudi.hms.table.type” = “EXTERNAL_TABLE”);

少了 _hoodie_operation元数据操作字段