观察了fe 日志 ,频繁打印:

2025-07-30 16:00:10.843+08:00 WARN (starrocks-taskrun-pool-13|21573) [ThreadPoolManager$LogDiscardPolicy.rejectedExecution():207] Task java.util.concurrent.CompletableFuture$AsyncSupply@3acf6f31 rejected from cache-dict java.util.concurrent.ThreadPoolExecutor@7d556d31[Running, pool size = 16, active threads = 16, queued tasks = 0, completed tasks = 15521]

2025-07-30 16:00:10.843+08:00 WARN (starrocks-taskrun-pool-6|17465) [ThreadPoolManager$LogDiscardPolicy.rejectedExecution():207] Task java.util.concurrent.CompletableFuture$AsyncSupply@734cc693 rejected from cache-dict java.util.concurrent.ThreadPoolExecutor@7d556d31[Running, pool size = 16, active threads = 16, queued tasks = 0, completed tasks = 15521]

2025-07-30 16:00:10.843+08:00 WARN (starrocks-taskrun-pool-13|21573) [ThreadPoolManager$LogDiscardPolicy.rejectedExecution():207] Task java.util.concurrent.CompletableFuture$AsyncSupply@321e5e42 rejected from cache-dict java.util.concurrent.ThreadPoolExecutor@7d556d31[Running, pool size = 16, active threads = 16, queued tasks = 0, completed tasks = 15521]

2025-07-30 16:00:10.843+08:00 WARN (starrocks-taskrun-pool-6|17465) [ThreadPoolManager$LogDiscardPolicy.rejectedExecution():207] Task java.util.concurrent.CompletableFuture$AsyncSupply@76ff7637 rejected from cache-dict java.util.concurrent.ThreadPoolExecutor@7d556d31[Running, pool size = 16, active threads = 16, queued tasks = 0, completed tasks = 15521]

2025-07-30 16:00:10.843+08:00 WARN (starrocks-taskrun-pool-13|21573) [ThreadPoolManager$LogDiscardPolicy.rejectedExecution():207] Task java.util.concurrent.CompletableFuture$AsyncSupply@67bef38e rejected from cache-dict java.util.concurrent.ThreadPoolExecutor@7d556d31[Running, pool size = 16, active threads = 16, queued tasks = 0, completed tasks = 15521]

2025-07-30 16:00:10.844+08:00 WARN (starrocks-taskrun-pool-6|17465) [ThreadPoolManager$LogDiscardPolicy.rejectedExecution():207] Task java.util.concurrent.CompletableFuture$AsyncSupply@34967ae2 rejected from cache-dict java.util.concurrent.ThreadPoolExecutor@7d556d31[Running, pool size = 16, active threads = 16, queued tasks = 0, completed tasks = 15521]

2025-07-30 16:00:10.844+08:00 WARN (starrocks-taskrun-pool-13|21573) [ThreadPoolManager$LogDiscardPolicy.rejectedExecution():207] Task java.util.concurrent.CompletableFuture$AsyncSupply@2c49be2d rejected from cache-dict java.util.concurrent.ThreadPoolExecutor@7d556d31[Running, pool size = 16, active threads = 16, queued tasks = 0, completed tasks = 15521]

2025-07-30 16:00:10.844+08:00 WARN (starrocks-taskrun-pool-13|21573) [ThreadPoolManager$LogDiscardPolicy.rejectedExecution():207] Task java.util.concurrent.CompletableFuture$AsyncSupply@6d3eea72 rejected from cache-dict java.util.concurrent.ThreadPoolExecutor@7d556d31[Running, pool size = 16, active threads = 16, queued tasks = 0, completed tasks = 15521]

2025-07-30 16:00:10.844+08:00 WARN (starrocks-taskrun-pool-13|21573) [ThreadPoolManager$LogDiscardPolicy.rejectedExecution():207] Task java.util.concurrent.CompletableFuture$AsyncSupply@2d9255d9 rejected from cache-dict java.util.concurrent.ThreadPoolExecutor@7d556d31[Running, pool size = 16, active threads = 16, queued tasks = 0, completed tasks = 15521]

2025-07-30 16:00:10.844+08:00 WARN (starrocks-taskrun-pool-13|21573) [ThreadPoolManager$LogDiscardPolicy.rejectedExecution():207] Task java.util.concurrent.CompletableFuture$AsyncSupply@3da0e166 rejected from cache-dict java.util.concurrent.ThreadPoolExecutor@7d556d31[Running, pool size = 16, active threads = 16, queued tasks = 0, completed tasks = 15521]

2025-07-30 16:00:10.844+08:00 WARN (starrocks-taskrun-pool-13|21573) [ThreadPoolManager$LogDiscardPolicy.rejectedExecution():207] Task java.util.concurrent.CompletableFuture$AsyncSupply@5c799829 rejected from cache-dict java.util.concurrent.ThreadPoolExecutor@7d556d31[Running, pool size = 16, active threads = 16, queued tasks = 0, completed tasks = 15521]

2025-07-30 16:00:10.844+08:00 WARN (starrocks-taskrun-pool-13|21573) [ThreadPoolManager$LogDiscardPolicy.rejectedExecution():207] Task java.util.concurrent.CompletableFuture$AsyncSupply@460e94ae rejected from cache-dict java.util.concurrent.ThreadPoolExecutor@7d556d31[Running, pool size = 16, active threads = 16, queued tasks = 0, completed tasks = 15521]

2025-07-30 16:00:10.844+08:00 WARN (starrocks-taskrun-pool-13|21573) [ThreadPoolManager$LogDiscardPolicy.rejectedExecution():207] Task java.util.concurrent.CompletableFuture$AsyncSupply@790d2d2b rejected from cache-dict java.util.concurrent.ThreadPoolExecutor@7d556d31[Running, pool size = 16, active threads = 16, queued tasks = 0, completed tasks = 15521]

2025-07-30 16:00:10.928+08:00 WARN (scheduler-dispatch-pool-6|17623) [ShardManager.updateShardReplicaInfoInternal():1285] shard [132894398, 132894400, 132894405, 133128720, 133128722, 133129683, 133129685, 133129695, 133129701, 133129720, 133129724, 133129735, 133129738, 133129975, 133129982, 133130034, 133130037, 133130190, 133130195, 133130234, 133130237, 133130327, 133130329, 133130863, 133130871, 133130889, 133130894, 133131017, 133131019, 133131081, 133131083, 133131248, 133131249, 133131273, 133131274, 133131285, 133131286, 133131326, 133131330, 133131363, 133131364, 133131374, 133131375, 133131387, 133131393, 133131415, 133131417, 133131429, 133131432, 133131454, 133131463, 133131467, 133131473, 133131493, 133131501, 133131519, 133131526, 133131534, 133131538, 133131545, 133131553, 133131556, 133131561, 133131600, 133131605, 133131609, 133131612, 133131621, 133131625, 133131666, 133131668, 133131927, 133131928, 133131953, 133131954, 133132066, 133132071, 133132081, 133132084] not exist when update shard info from shard scheduler!

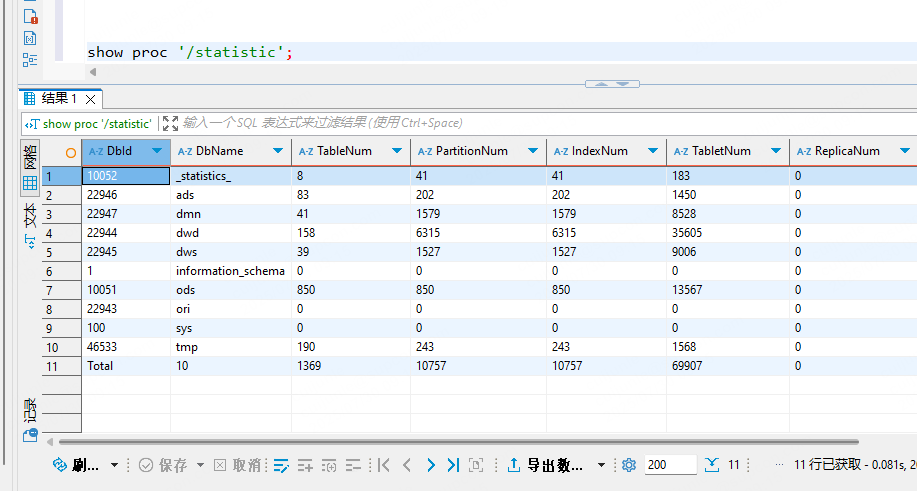

2025-07-30 16:00:11.865+08:00 WARN (starrocks-taskrun-pool-12|21474) [StatisticsCollectionTrigger.waitFinish():222] await collect statistic task failed after 1 seconds, which mean too many jobs in the queue

2025-07-30 16:00:12.882+08:00 WARN (starrocks-taskrun-pool-13|21573) [StatisticsCollectionTrigger.waitFinish():222] await collect statistic task failed after 1 seconds, which mean too many jobs in the queue

2025-07-30 16:00:13.041+08:00 WARN (starrocks-taskrun-pool-8|19768) [StatisticsCollectionTrigger.waitFinish():222] await collect statistic task failed after 1 seconds, which mean too many jobs in the queue

2025-07-30 16:00:13.077+08:00 WARN (starrocks-taskrun-pool-10|21472) [StatisticsCollectionTrigger.waitFinish():222] await collect statistic task failed after 1 seconds, which mean too many jobs in the queue

2025-07-30 16:00:13.376+08:00 WARN (starrocks-taskrun-pool-6|17465) [StatisticsCollectionTrigger.waitFinish():222] await collect statistic task failed after 1 seconds, which mean too many jobs in the queue

2025-07-30 16:00:13.581+08:00 WARN (starrocks-taskrun-pool-7|17466) [StatisticsCollectionTrigger.waitFinish():222] await collect statistic task failed after 1 seconds, which mean too many jobs in the queue

2025-07-30 16:00:15.628+08:00 WARN (starrocks-taskrun-pool-3|17392) [StatisticsCollectionTrigger.waitFinish():222] await collect statistic task failed after 1 seconds, which mean too many jobs in the queue