为了更快的定位您的问题,请提供以下信息,谢谢

【详述】使用Spark Connector工具,执行Spark DataFrame 插入到StarRocks,报错

【背景】前两天从3.3.3 升级到 3.3.12版本

【业务影响】

【是否存算分离】是

【StarRocks版本】3.3.12

【集群规模】3fe + 7be(fe与be独立部署)

【机器信息】48C/148G/万兆

【联系方式】tanheyuan@outlook.com

【附件】

- fe.log/beINFO/相应截图

- 报错信息:

org.apache.spark.SparkException: Job aborted due to stage failure:

Aborting TaskSet 80.0 because task 8 (partition 8)

cannot run anywhere due to node and executor blacklist.

Most recent failure:

Lost task 8.0 in stage 80.0 (TID 68091, xxxx.com, executor 1): java.io.IOException: Failed to load 20000 batch data on BE: http://IP:8040/api/bi_test/business_ph_on_order/_stream_load? node and exceeded the max 3 retry times.

at com.starrocks.connector.spark.sql.StarrocksSourceProvider$$anonfun$createRelation$1$$anonfun$com$starrocks$connector$spark$sql$StarrocksSourceProvider$$anonfun$$flush$1$1.apply$mcV$sp(StarrocksSourceProvider.scala:128)

at scala.util.control.Breaks.breakable(Breaks.scala:38)

at com.starrocks.connector.spark.sql.StarrocksSourceProvider$$anonfun$createRelation$1.com$starrocks$connector$spark$sql$StarrocksSourceProvider$$anonfun$$flush$1(StarrocksSourceProvider.scala:102)

at com.starrocks.connector.spark.sql.StarrocksSourceProvider$$anonfun$createRelation$1.apply(StarrocksSourceProvider.scala:92)

at com.starrocks.connector.spark.sql.StarrocksSourceProvider$$anonfun$createRelation$1.apply(StarrocksSourceProvider.scala:77)

at org.apache.spark.rdd.RDD$$anonfun$foreachPartition$1$$anonfun$apply$28.apply(RDD.scala:979)

at org.apache.spark.rdd.RDD$$anonfun$foreachPartition$1$$anonfun$apply$28.apply(RDD.scala:979)

at org.apache.spark.SparkContext$$anonfun$runJob$5.apply(SparkContext.scala:2274)

at org.apache.spark.SparkContext$$anonfun$runJob$5.apply(SparkContext.scala:2274)

at org.apache.spark.scheduler.ResultTask.runTask(ResultTask.scala:90)

at org.apache.spark.scheduler.Task.run(Task.scala:123)

at org.apache.spark.executor.Executor$TaskRunner$$anonfun$10.apply(Executor.scala:413)

at org.apache.spark.util.Utils$.tryWithSafeFinally(Utils.scala:1551)

at org.apache.spark.executor.Executor$TaskRunner.run(Executor.scala:419)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)

at java.lang.Thread.run(Thread.java:748)

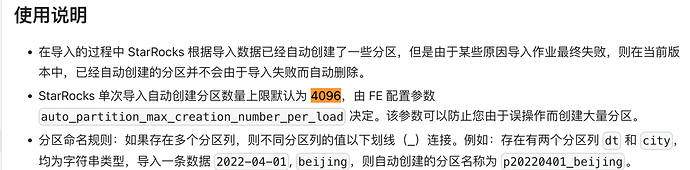

Caused by: com.starrocks.connector.spark.exception.StreamLoadException: stream load error: Automatically created partitions exceeded the maximum limit: 4096. You can modify this restriction on by setting max_automatic_partition_number larger.

at com.starrocks.connector.spark.StarRocksStreamLoad.load(StarRocksStreamLoad.java:212)

at com.starrocks.connector.spark.StarRocksStreamLoad.loadV2(StarRocksStreamLoad.java:196)

at com.starrocks.connector.spark.sql.StarrocksSourceProvider$$anonfun$createRelation$1$$anonfun$com$starrocks$connector$spark$sql$StarrocksSourceProvider$$anonfun$$flush$1$1$$anonfun$apply$mcV$sp$1.apply$mcVI$sp(StarrocksSourceProvider.scala:106)

at scala.collection.immutable.Range.foreach$mVc$sp(Range.scala:160)

at com.starrocks.connector.spark.sql.StarrocksSourceProvider$$anonfun$createRelation$1$$anonfun$com$starrocks$connector$spark$sql$StarrocksSourceProvider$$anonfun$$flush$1$1.apply$mcV$sp(StarrocksSourceProvider.scala:104)

… 16 more

Blacklisting behavior can be configured via spark.blacklist.*.

Caused by:

org.apache.spark.scheduler.DAGScheduler.org$apache$spark$scheduler$DAGScheduler$$failJobAndIndependentStages(DAGScheduler.scala:2027)

org.apache.spark.scheduler.DAGScheduler$$anonfun$abortStage$1.apply(DAGScheduler.scala:1972)

org.apache.spark.scheduler.DAGScheduler$$anonfun$abortStage$1.apply(DAGScheduler.scala:1971)

scala.collection.mutable.ResizableArray$class.foreach(ResizableArray.scala:59)

scala.collection.mutable.ArrayBuffer.foreach(ArrayBuffer.scala:48)

org.apache.spark.scheduler.DAGScheduler.abortStage(DAGScheduler.scala:1971)

org.apache.spark.scheduler.DAGScheduler$$anonfun$handleTaskSetFailed$1.apply(DAGScheduler.scala:987)

org.apache.spark.scheduler.DAGScheduler$$anonfun$handleTaskSetFailed$1.apply(DAGScheduler.scala:987)

scala.Option.foreach(Option.scala:257)

org.apache.spark.scheduler.DAGScheduler.handleTaskSetFailed(DAGScheduler.scala:987)

org.apache.spark.scheduler.DAGSchedulerEventProcessLoop.doOnReceive(DAGScheduler.scala:2207)

org.apache.spark.scheduler.DAGSchedulerEventProcessLoop.onReceive(DAGScheduler.scala:2156)

org.apache.spark.scheduler.DAGSchedulerEventProcessLoop.onReceive(DAGScheduler.scala:2145)

org.apache.spark.util.EventLoop$$anon$1.run(EventLoop.scala:49)

org.apache.spark.scheduler.DAGScheduler.runJob(DAGScheduler.scala:794)

org.apache.spark.SparkContext.runJob(SparkContext.scala:2234)

org.apache.spark.SparkContext.runJob(SparkContext.scala:2255)

org.apache.spark.SparkContext.runJob(SparkContext.scala:2274)

org.apache.spark.SparkContext.runJob(SparkContext.scala:2299)