【StarRocks版本】 2.5.22

【集群规模】3fe+3be(fe与be混部)

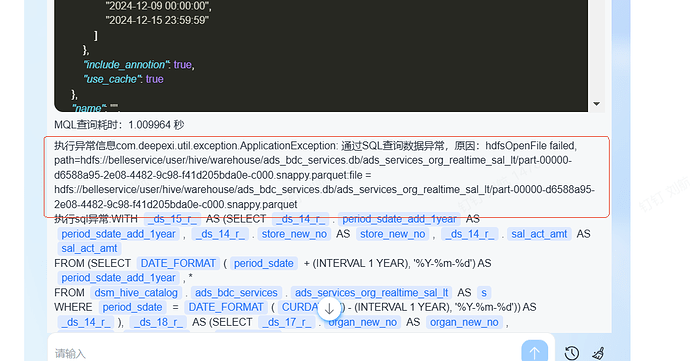

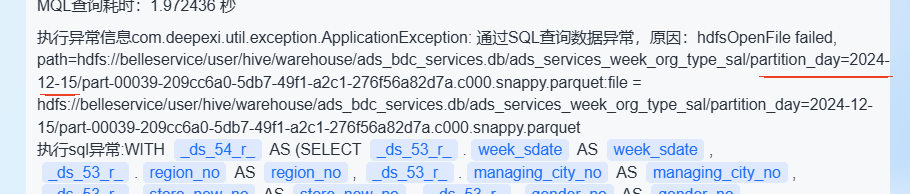

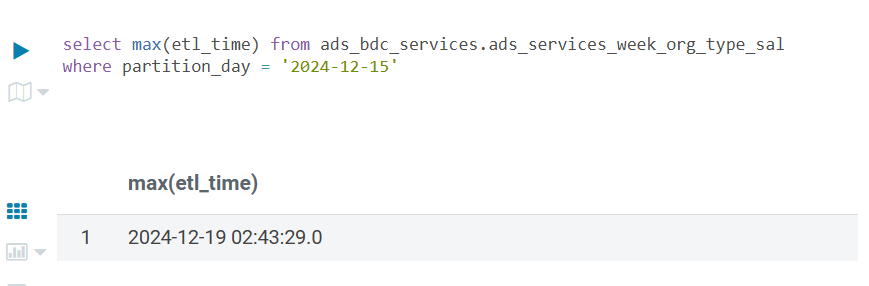

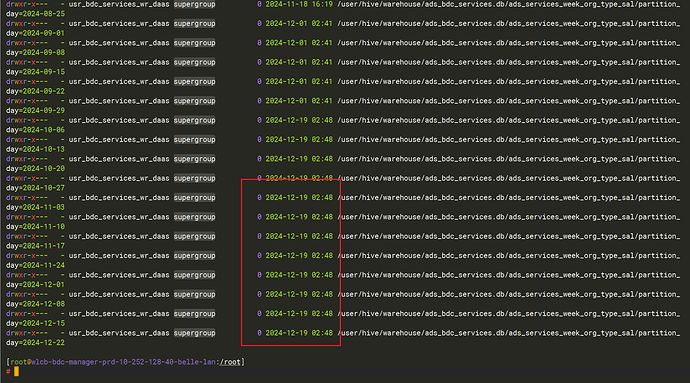

【问题描述】应用使用,StarRocks查询Hive catalog加速,但是有时候查询会报错File does not exist。

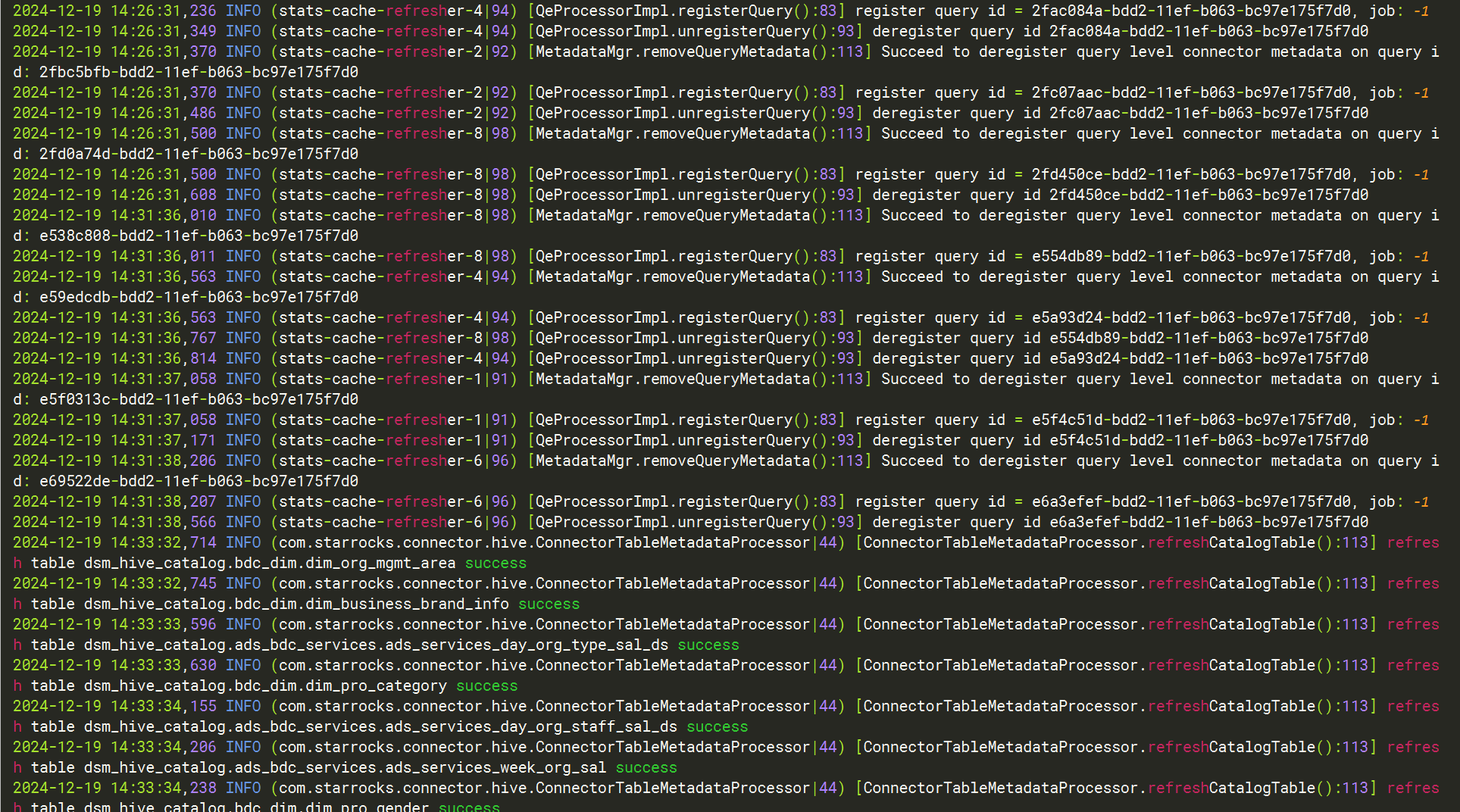

【日志报错】详细日志见附件

Caused by: org.apache.hadoop.ipc.RemoteException(java.io.FileNotFoundException): File does not exist: /user/hive/warehouse/ads_bdc_services.db/ads_services_week_org_type_sal/partition_day=2024-12-15/part-00026-0c8fc350-c9c7-4d2e-85aa-1f9774eed2f5.c000.snappy.parquet

at org.apache.hadoop.hdfs.server.namenode.INodeFile.valueOf(INodeFile.java:86)

at org.apache.hadoop.hdfs.server.namenode.INodeFile.valueOf(INodeFile.java:76)

at org.apache.hadoop.hdfs.server.namenode.FSDirStatAndListingOp.getBlockLocations(FSDirStatAndListingOp.java:156)

at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.getBlockLocations(FSNamesystem.java:2089)

at org.apache.hadoop.hdfs.server.namenode.NameNodeRpcServer.getBlockLocations(NameNodeRpcServer.java:762)

at org.apache.hadoop.hdfs.protocolPB.ClientNamenodeProtocolServerSideTranslatorPB.getBlockLocations(ClientNamenodeProtocolServerSideTranslatorPB.java:458)

at org.apache.hadoop.hdfs.protocol.proto.ClientNamenodeProtocolProtos$ClientNamenodeProtocol$2.callBlockingMethod(ClientNamenodeProtocolProtos.java)

at org.apache.hadoop.ipc.ProtobufRpcEngine2$Server$ProtoBufRpcInvoker.call(ProtobufRpcEngine2.java:604)

at org.apache.hadoop.ipc.ProtobufRpcEngine2$Server$ProtoBufRpcInvoker.call(ProtobufRpcEngine2.java:572)

at org.apache.hadoop.ipc.ProtobufRpcEngine2$Server$ProtoBufRpcInvoker.call(ProtobufRpcEngine2.java:556)

at org.apache.hadoop.ipc.RPC$Server.call(RPC.java:1093)

at org.apache.hadoop.ipc.Server$RpcCall.run(Server.java:1043)

at org.apache.hadoop.ipc.Server$RpcCall.run(Server.java:971)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:422)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1878)

at org.apache.hadoop.ipc.Server$Handler.run(Server.java:2976)

at org.apache.hadoop.ipc.Client.getRpcResponse(Client.java:1567)

at org.apache.hadoop.ipc.Client.call(Client.java:1513)

at org.apache.hadoop.ipc.Client.call(Client.java:1410)

at org.apache.hadoop.ipc.ProtobufRpcEngine2$Invoker.invoke(ProtobufRpcEngine2.java:258)

at org.apache.hadoop.ipc.ProtobufRpcEngine2$Invoker.invoke(ProtobufRpcEngine2.java:139)

at com.sun.proxy.$Proxy15.getBlockLocations(Unknown Source)

at org.apache.hadoop.hdfs.protocolPB.ClientNamenodeProtocolTranslatorPB.getBlockLocations(ClientNamenodeProtocolTranslatorPB.java:334)

at jdk.internal.reflect.GeneratedMethodAccessor3.invoke(Unknown Source)

at java.base/jdk.internal.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.base/java.lang.reflect.Method.invoke(Method.java:566)

at org.apache.hadoop.io.retry.RetryInvocationHandler.invokeMethod(RetryInvocationHandler.java:433)

at org.apache.hadoop.io.retry.RetryInvocationHandler$Call.invokeMethod(RetryInvocationHandler.java:166)

at org.apache.hadoop.io.retry.RetryInvocationHandler$Call.invoke(RetryInvocationHandler.java:158)

at org.apache.hadoop.io.retry.RetryInvocationHandler$Call.invokeOnce(RetryInvocationHandler.java:96)

be.out (18.1 MB)

感谢,看下还差哪些信息我可以提供。

感谢,看下还差哪些信息我可以提供。