如题,创建hive外部表错误。

CREATE EXTERNAL TABLE hive_t1 (

request_id varchar(100),

cityid int,

carrier int,

score int,

dt varchar(100)

)

engine = hive

properties (

"resource" = "hive0",

"database" = "ndata",

"table" = "earth_model",

"kerberos_principal" = "starrocks@TC.XXX.CN",

"kerberos_keytab" = "/etc/security/keytab/starrocks.keytab"

);

MySQL [test]> show resources;

+-------+--------------+---------------------+---------------------------------------+

| Name | ResourceType | Key | Value |

+-------+--------------+---------------------+---------------------------------------+

| hive0 | hive | hive.metastore.uris | thrift://hive-1.cvm.tc.xxxx.cn:9083 |

+-------+--------------+---------------------+---------------------------------------+

1 row in set (0.00 sec)

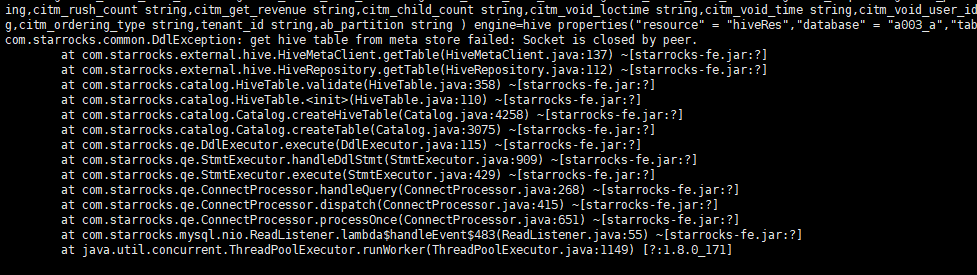

ERROR 1064 (HY000): get hive table from meta store failed: Socket is closed by peer.

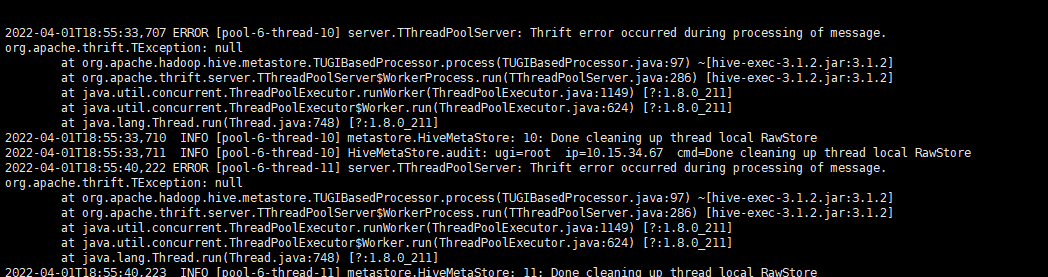

FE日志报错。

2022-02-18 15:10:07,843 WARN (starrocks-mysql-nio-pool-18|2198) [HiveMetaStoreThriftClient.open():522] set_ugi() not successful, Likely cause: new client talking to old server. Continuing without it.

org.apache.thrift.transport.TTransportException: Socket is closed by peer.

at org.apache.thrift.transport.TIOStreamTransport.read(TIOStreamTransport.java:130) ~[libthrift-0.13.0.jar:0.13.0]

at org.apache.thrift.transport.TTransport.readAll(TTransport.java:86) ~[libthrift-0.13.0.jar:0.13.0]

at org.apache.thrift.protocol.TBinaryProtocol.readStringBody(TBinaryProtocol.java:411) ~[libthrift-0.13.0.jar:0.13.0]

at org.apache.thrift.protocol.TBinaryProtocol.readMessageBegin(TBinaryProtocol.java:254) ~[libthrift-0.13.0.jar:0.13.0]

at org.apache.thrift.TServiceClient.receiveBase(TServiceClient.java:77) ~[libthrift-0.13.0.jar:0.13.0]

at org.apache.hadoop.hive.metastore.api.ThriftHiveMetastore$Client.recv_set_ugi(ThriftHiveMetastore.java:4753) ~[hive-apache-3.0.0-5.jar:3.0.0-4-4-g7dde337]

at org.apache.hadoop.hive.metastore.api.ThriftHiveMetastore$Client.set_ugi(ThriftHiveMetastore.java:4739) ~[hive-apache-3.0.0-5.jar:3.0.0-4-4-g7dde337]

at com.starrocks.external.hive.HiveMetaStoreThriftClient.open(HiveMetaStoreThriftClient.java:514) ~[starrocks-fe.jar:?]

at com.starrocks.external.hive.HiveMetaStoreThriftClient.reconnect(HiveMetaStoreThriftClient.java:400) ~[starrocks-fe.jar:?]

at org.apache.hadoop.hive.metastore.RetryingMetaStoreClient$1.run(RetryingMetaStoreClient.java:187) ~[hive-apache-3.0.0-5.jar:3.0.0-4-4-g7dde337]

at java.security.AccessController.doPrivileged(Native Method) ~[?:1.8.0_251]

at javax.security.auth.Subject.doAs(Subject.java:422) ~[?:1.8.0_251]

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1845) ~[hadoop-common-3.3.0.jar:?]

at org.apache.hadoop.hive.metastore.RetryingMetaStoreClient.invoke(RetryingMetaStoreClient.java:183) ~[hive-apache-3.0.0-5.jar:3.0.0-4-4-g7dde337]

at com.sun.proxy.$Proxy41.getTable(Unknown Source) ~[?:?]

at com.starrocks.external.hive.HiveMetaClient.getTable(HiveMetaClient.java:133) ~[starrocks-fe.jar:?]

at com.starrocks.external.hive.HiveRepository.getTable(HiveRepository.java:112) ~[starrocks-fe.jar:?]

at com.starrocks.catalog.HiveTable.validate(HiveTable.java:355) ~[starrocks-fe.jar:?]

at com.starrocks.catalog.HiveTable.<init>(HiveTable.java:108) ~[starrocks-fe.jar:?]

at com.starrocks.catalog.Catalog.createHiveTable(Catalog.java:4193) ~[starrocks-fe.jar:?]

at com.starrocks.catalog.Catalog.createTable(Catalog.java:3044) ~[starrocks-fe.jar:?]

at com.starrocks.qe.DdlExecutor.execute(DdlExecutor.java:115) ~[starrocks-fe.jar:?]

at com.starrocks.qe.StmtExecutor.handleDdlStmt(StmtExecutor.java:1219) ~[starrocks-fe.jar:?]

at com.starrocks.qe.StmtExecutor.execute(StmtExecutor.java:434) ~[starrocks-fe.jar:?]

at com.starrocks.qe.ConnectProcessor.handleQuery(ConnectProcessor.java:248) ~[starrocks-fe.jar:?]

at com.starrocks.qe.ConnectProcessor.dispatch(ConnectProcessor.java:395) ~[starrocks-fe.jar:?]

at com.starrocks.qe.ConnectProcessor.processOnce(ConnectProcessor.java:631) ~[starrocks-fe.jar:?]

at com.starrocks.mysql.nio.ReadListener.lambda$handleEvent$0(ReadListener.java:55) ~[starrocks-fe.jar:?]

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149) [?:1.8.0_251]

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624) [?:1.8.0_251]

at java.lang.Thread.run(Thread.java:748) [?:1.8.0_251]

2022-02-18 15:10:07,843 INFO (starrocks-mysql-nio-pool-18|2198) [HiveMetaStoreThriftClient.open():551] Connected to metastore.

2022-02-18 15:10:07,844 WARN (starrocks-mysql-nio-pool-18|2198) [HiveMetaClient.getTable():135] get hive table failed

org.apache.thrift.transport.TTransportException: Socket is closed by peer.

at org.apache.thrift.transport.TIOStreamTransport.read(TIOStreamTransport.java:130) ~[libthrift-0.13.0.jar:0.13.0]

at org.apache.thrift.transport.TTransport.readAll(TTransport.java:86) ~[libthrift-0.13.0.jar:0.13.0]

at org.apache.thrift.protocol.TBinaryProtocol.readAll(TBinaryProtocol.java:455) ~[libthrift-0.13.0.jar:0.13.0]

at org.apache.thrift.protocol.TBinaryProtocol.readI32(TBinaryProtocol.java:354) ~[libthrift-0.13.0.jar:0.13.0]

at org.apache.thrift.protocol.TBinaryProtocol.readMessageBegin(TBinaryProtocol.java:243) ~[libthrift-0.13.0.jar:0.13.0]

at org.apache.thrift.TServiceClient.receiveBase(TServiceClient.java:77) ~[libthrift-0.13.0.jar:0.13.0]

at org.apache.hadoop.hive.metastore.api.ThriftHiveMetastore$Client.recv_get_table(ThriftHiveMetastore.java:1993) ~[hive-apache-3.0.0-5.jar:3.0.0-4-4-g7dde337]

at org.apache.hadoop.hive.metastore.api.ThriftHiveMetastore$Client.get_table(ThriftHiveMetastore.java:1979) ~[hive-apache-3.0.0-5.jar:3.0.0-4-4-g7dde337]

at com.starrocks.external.hive.HiveMetaStoreThriftClient.getTable(HiveMetaStoreThriftClient.java:587) ~[starrocks-fe.jar:?]

at com.starrocks.external.hive.HiveMetaStoreThriftClient.getTable(HiveMetaStoreThriftClient.java:582) ~[starrocks-fe.jar:?]

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method) ~[?:1.8.0_251]

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62) ~[?:1.8.0_251]

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43) ~[?:1.8.0_251]

at java.lang.reflect.Method.invoke(Method.java:498) ~[?:1.8.0_251]

at org.apache.hadoop.hive.metastore.RetryingMetaStoreClient.invoke(RetryingMetaStoreClient.java:208) ~[hive-apache-3.0.0-5.jar:3.0.0-4-4-g7dde337]

at com.sun.proxy.$Proxy41.getTable(Unknown Source) ~[?:?]

at com.starrocks.external.hive.HiveMetaClient.getTable(HiveMetaClient.java:133) ~[starrocks-fe.jar:?]

at com.starrocks.external.hive.HiveRepository.getTable(HiveRepository.java:112) ~[starrocks-fe.jar:?]

at com.starrocks.catalog.HiveTable.validate(HiveTable.java:355) ~[starrocks-fe.jar:?]

at com.starrocks.catalog.HiveTable.<init>(HiveTable.java:108) ~[starrocks-fe.jar:?]

at com.starrocks.catalog.Catalog.createHiveTable(Catalog.java:4193) ~[starrocks-fe.jar:?]

at com.starrocks.catalog.Catalog.createTable(Catalog.java:3044) ~[starrocks-fe.jar:?]

at com.starrocks.qe.DdlExecutor.execute(DdlExecutor.java:115) ~[starrocks-fe.jar:?]

at com.starrocks.qe.StmtExecutor.handleDdlStmt(StmtExecutor.java:1219) ~[starrocks-fe.jar:?]

at com.starrocks.qe.StmtExecutor.execute(StmtExecutor.java:434) ~[starrocks-fe.jar:?]

at com.starrocks.qe.ConnectProcessor.handleQuery(ConnectProcessor.java:248) ~[starrocks-fe.jar:?]

at com.starrocks.qe.ConnectProcessor.dispatch(ConnectProcessor.java:395) ~[starrocks-fe.jar:?]

at com.starrocks.qe.ConnectProcessor.processOnce(ConnectProcessor.java:631) ~[starrocks-fe.jar:?]

at com.starrocks.mysql.nio.ReadListener.lambda$handleEvent$0(ReadListener.java:55) ~[starrocks-fe.jar:?]

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149) [?:1.8.0_251]

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624) [?:1.8.0_251]

at java.lang.Thread.run(Thread.java:748) [?:1.8.0_251]

所有RS机器都能正常解析 hive-1.cvm.tc.xxxx.cn 并能telnet 通9083端口 。

hive 版本是3.1.0