【详述】使用routine load从kafka消费数据到一张测试表中,测试表使用dynamic partition按小时分区,show data显示数据只有6G左右,维持(dynamic partition自动删除分区,只保留过去一小时),但show backends显示UsedPct一直在上涨(DataUsedCapacity和表数据占用的大小一致),直到空间不足routine load失败进入paused状态才慢慢下降,是什么原因呢?

【StarRocks版本】例如:3.3

【集群规模】1fe 1be (starrocks operator创建)

【附件】

测试表创建语句:

simple_bench | CREATE TABLE `simple_bench` (

`table_name` varchar(32) NOT NULL COMMENT "",

`time` datetime NULL COMMENT "",

`field1` varchar(32) NULL COMMENT "",

`field2` int(11) NULL COMMENT "",

`field3` varchar(32) NULL COMMENT "",

`field4` int(11) NULL COMMENT "",

`field5` varchar(32) NULL COMMENT ""

) ENGINE=OLAP

DUPLICATE KEY(`table_name`, `time`)

PARTITION BY RANGE(`time`)

(PARTITION p2024111514 VALUES [("2024-11-15 14:00:00"), ("2024-11-15 15:00:00")),

PARTITION p2024111515 VALUES [("2024-11-15 15:00:00"), ("2024-11-15 16:00:00")),

PARTITION p2024111516 VALUES [("2024-11-15 16:00:00"), ("2024-11-15 17:00:00")),

PARTITION p2024111517 VALUES [("2024-11-15 17:00:00"), ("2024-11-15 18:00:00")),

PARTITION p2024111518 VALUES [("2024-11-15 18:00:00"), ("2024-11-15 19:00:00")))

DISTRIBUTED BY RANDOM

PROPERTIES (

"bucket_size" = "4294967296",

"compression" = "ZSTD",

"dynamic_partition.enable" = "true",

"dynamic_partition.end" = "3",

"dynamic_partition.history_partition_num" = "0",

"dynamic_partition.prefix" = "p",

"dynamic_partition.start" = "-1",

"dynamic_partition.time_unit" = "HOUR",

"dynamic_partition.time_zone" = "Asia/Shanghai",

"fast_schema_evolution" = "true",

"replicated_storage" = "false",

"replication_num" = "1"

);

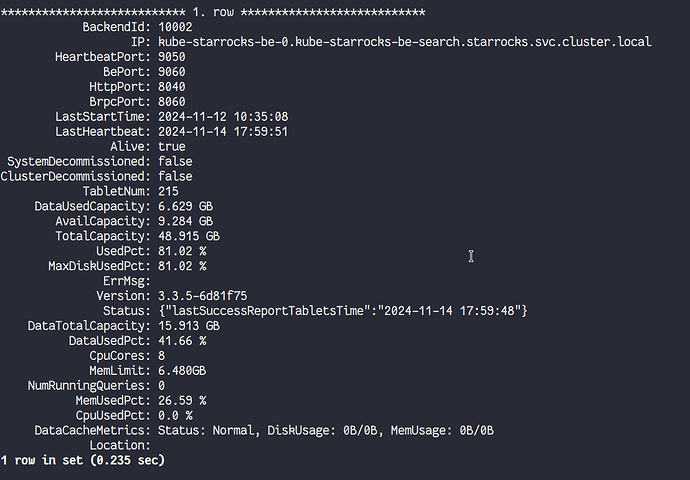

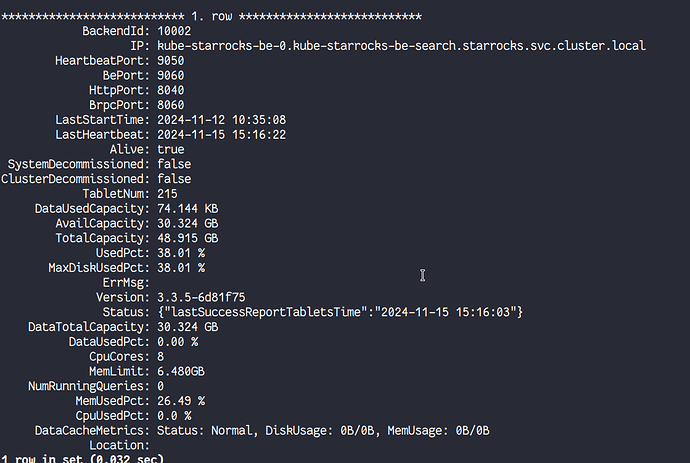

停止写入一段时间后数据为空,UsedPct/MaxDiskUsedPct还占用了较大空间,在缓慢下降

routine load :

Id: 11511

Name: test_simple_bench

CreateTime: 2024-11-14 09:20:04

PauseTime: NULL

EndTime: NULL

DbName: otlp

TableName: simple_bench

State: RUNNING

DataSourceType: KAFKA

CurrentTaskNum: 1

JobProperties: {"partial_update_mode":"null","timezone":"Asia/Shanghai","columnSeparator":"|","log_rejected_record_num":"0","taskTimeoutSecond":"60","maxFilterRatio":"1.0","strict_mode":"false","jsonpaths":"","currentTaskConcurrentNum":"1","escape":"0","enclose":"0","partitions":"*","rowDelimiter":"\n","partial_update":"false","trim_space":"false","columnToColumnExpr":"table_name,time,field1,field2,field3,field4,field5","maxBatchIntervalS":"10","whereExpr":"*","format":"csv","json_root":"","taskConsumeSecond":"15","desireTaskConcurrentNum":"5","maxErrorNum":"0","strip_outer_array":"false","maxBatchRows":"200000"}

DataSourceProperties: {"topic":"test-simple_bench","currentKafkaPartitions":"0","brokerList":"<redacted>:9092"}

CustomProperties: {"kafka_default_offsets":"OFFSET_END","group.id":"test_simple_bench_5cc79eaa-5569-4de2-91d2-1512be509b78"}

Statistic: {"receivedBytes":60459661068,"errorRows":0,"committedTaskNum":1184,"loadedRows":599798272,"loadRowsRate":33000,"abortedTaskNum":0,"totalRows":599798272,"unselectedRows":0,"receivedBytesRate":3331000,"taskExecuteTimeMs":18145466}

Progress: {"0":"4684469593"}

TimestampProgress: {"0":"1731577233211"}

ReasonOfStateChanged:

ErrorLogUrls:

TrackingSQL:

OtherMsg: [2024-11-15 15:32:06] [task id: fd2d6d48-3f90-4dd8-b4fe-184a6e86e777] [txn id: -1] there is no new data in kafka, wait for 10 seconds to schedule again

LatestSourcePosition: {"0":"4684469594"}