为了更快的定位您的问题,请提供以下信息,谢谢

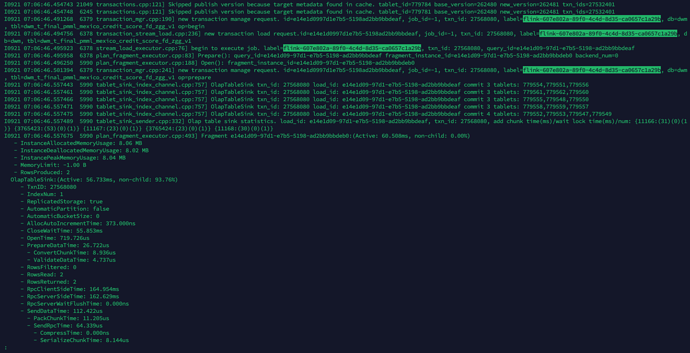

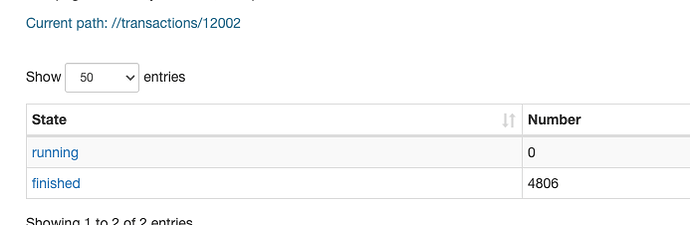

【详述】ERROR 1064 (HY000): Getting analyzing error. Detail message: fail to begin transaction. current running txns on db 24938 is 1000, larger than limit 1000.

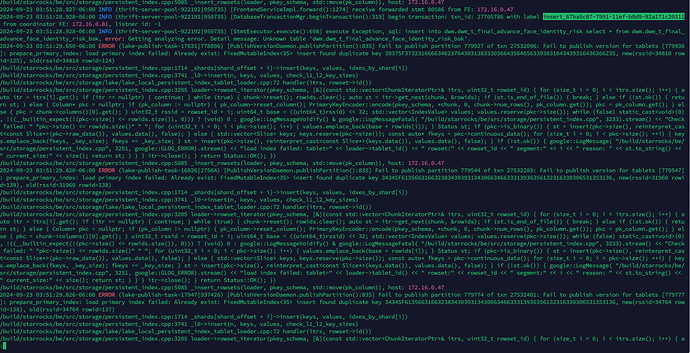

2024-09-23 03:51:29.650-06:00 ERROR (lake-publish-task-17631|778896) [PublishVersionDaemon.publishPartition():835] Fail to publish partition 779927 of txn 27532096: Fail to publish version for tablets [779936]: prepare_primary_index: load primary index failed: Already exist: FixedMutableIndex<35> insert found duplicate key 39375F3732316666346237643031383330366435646563393831643439316436366235, new(rssid=34818 rowid=125), old(rssid=34818 rowid=124)

/build/starrocks/be/src/storage/persistent_index.cpp:1714 _shards[shard_offset + i]->insert(keys, values, idxes_by_shard[i])

/build/starrocks/be/src/storage/persistent_index.cpp:3741 _l0->insert(n, keys, values, check_l1_l2_key_sizes)

/build/starrocks/be/src/storage/lake/lake_local_persistent_index_tablet_loader.cpp:72 handler(itrs, rowset->id())

/build/starrocks/be/src/storage/persistent_index.cpp:3205 loader->rowset_iterator(pkey_schema, [&](const std::vector& itrs, uint32_t rowset_id) { for (size_t i = 0; i < itrs.size(); i++) { auto itr = itrs[i].get(); if (itr == nullptr) { continue; } while (true) { chunk->reset(); rowids.clear(); auto st = itr->get_next(chunk, &rowids); if (st.is_end_of_file()) { break; } else if (!st.ok()) { return st; } else { Column* pkc = nullptr; if (pk_column != nullptr) { pk_column->reset_column(); PrimaryKeyEncoder::encode(pkey_schema, chunk, 0, chunk->num_rows(), pk_column.get()); pkc = pk_column.get(); } else { pkc = chunk->columns()[0].get(); } uint32_t rssid = rowset_id + i; uint64_t base = ((uint64_t)rssid) << 32; std::vector values; values.reserve(pkc->size()); while (false) static_cast(0), !((__builtin_expect(!(pkc->size() <= rowids.size()), 0))) ? (void) 0 : google::LogMessageVoidify() & google::LogMessageFatal( “/build/starrocks/be/src/storage/persistent_index.cpp”, 3233).stream() << "Check failed: " “pkc->size() <= rowids.size()” " "; for (uint32_t i = 0; i < pkc->size(); i++) { values.emplace_back(base + rowids[i]); } Status st; if (pkc->is_binary()) { st = insert(pkc->size(), reinterpret_cast<const Slice>(pkc->raw_data()), values.data(), false); } else { std::vector keys; keys.reserve(pkc->size()); const auto* fkeys = pkc->continuous_data(); for (size_t i = 0; i < pkc->size(); ++i) { keys.emplace_back(fkeys, _key_size); fkeys += _key_size; } st = insert(pkc->size(), reinterpret_cast<const Slice*>(keys.data()), values.data(), false); } if (!st.ok()) { google::LogMessage( “/build/starrocks/be/src/storage/persistent_index.cpp”, 3251, google::GLOG_ERROR).stream() << “load index failed: tablet=” << loader->tablet_id() << " rowset:" << rowset_id << " segment:" << i << " reason: " << st.to_string() << " current_size:" << size(); return st; } } } itr->close(); } return Status::OK(); })

/build/starrocks/be/src/storage/persistent_index.cpp:5085 _insert_rowsets(loader, pkey_schema, std::move(pk_column)), host: 172.16.0.47

2024-09-23 03:51:29.820-06:00 ERROR (lake-publish-task-16026|27564) [PublishVersionDaemon.publishPartition():835] Fail to publish partition 779544 of txn 27532283: Fail to publish version for tablets [779547]: prepare_primary_index: load primary index failed: Already exist: FixedMutableIndex<35> insert found duplicate key 34345F6135663166323834303931343066346633313936356132316330306531353136, new(rssid=31960 rowid=139), old(rssid=31960 rowid=138)

/build/starrocks/be/src/storage/persistent_index.cpp:1714 _shards[shard_offset + i]->insert(keys, values, idxes_by_shard[i])

/build/starrocks/be/src/storage/persistent_index.cpp:3741 _l0->insert(n, keys, values, check_l1_l2_key_sizes)

/build/starrocks/be/src/storage/lake/lake_local_persistent_index_tablet_loader.cpp:72 handler(itrs, rowset->id())

/build/starrocks/be/src/storage/persistent_index.cpp:3205 loader->rowset_iterator(pkey_schema, [&](const std::vector& itrs, uint32_t rowset_id) { for (size_t i = 0; i < itrs.size(); i++) { auto itr = itrs[i].get(); if (itr == nullptr) { continue; } while (true) { chunk->reset(); rowids.clear(); auto st = itr->get_next(chunk, &rowids); if (st.is_end_of_file()) { break; } else if (!st.ok()) { return st; } else { Column* pkc = nullptr; if (pk_column != nullptr) { pk_column->reset_column(); PrimaryKeyEncoder::encode(pkey_schema, chunk, 0, chunk->num_rows(), pk_column.get()); pkc = pk_column.get(); } else { pkc = chunk->columns()[0].get(); } uint32_t rssid = rowset_id + i; uint64_t base = ((uint64_t)rssid) << 32; std::vector values; values.reserve(pkc->size()); while (false) static_cast(0), !((__builtin_expect(!(pkc->size() <= rowids.size()), 0))) ? (void) 0 : google::LogMessageVoidify() & google::LogMessageFatal( “/build/starrocks/be/src/storage/persistent_index.cpp”, 3233).stream() << "Check failed: " “pkc->size() <= rowids.size()” " "; for (uint32_t i = 0; i < pkc->size(); i++) { values.emplace_back(base + rowids[i]); } Status st; if (pkc->is_binary()) { st = insert(pkc->size(), reinterpret_cast<const Slice>(pkc->raw_data()), values.data(), false); } else { std::vector keys; keys.reserve(pkc->size()); const auto* fkeys = pkc->continuous_data(); for (size_t i = 0; i < pkc->size(); ++i) { keys.emplace_back(fkeys, _key_size); fkeys += _key_size; } st = insert(pkc->size(), reinterpret_cast<const Slice*>(keys.data()), values.data(), false); } if (!st.ok()) { google::LogMessage( “/build/starrocks/be/src/storage/persistent_index.cpp”, 3251, google::GLOG_ERROR).stream() << “load index failed: tablet=” << loader->tablet_id() << " rowset:" << rowset_id << " segment:" << i << " reason: " << st.to_string() << " current_size:" << size(); return st; } } } itr->close(); } return Status::OK(); })

/build/starrocks/be/src/storage/persistent_index.cpp:5085 _insert_rowsets(loader, pkey_schema, std::move(pk_column)), host: 172.16.0.47

2024-09-23 03:51:29.826-06:00 ERROR (lake-publish-task-17947|937426) [PublishVersionDaemon.publishPartition():835] Fail to publish partition 779774 of txn 27532401: Fail to publish version for tablets [779777]: prepare_primary_index: load primary index failed: Already exist: FixedMutableIndex<35> insert found duplicate key 34345F6135663166323834303931343066346633313936356132316330306531353136, new(rssid=34764 rowid=138), old(rssid=34764 rowid=137)

【业务影响】目前涉及到dwm 结果数据的flink 任务全部停止运行

【是否存算分离】存算分离集群

【StarRocks版本】例如:3.2.6

【集群规模】例如:5fe+5cn(fe与cn混部)

【机器信息】316c64g+224c196g(临时扩容,后续全部升级到 24c196)

【联系方式】

【附件】

– fe.log

– be.log

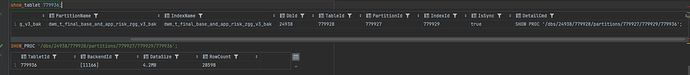

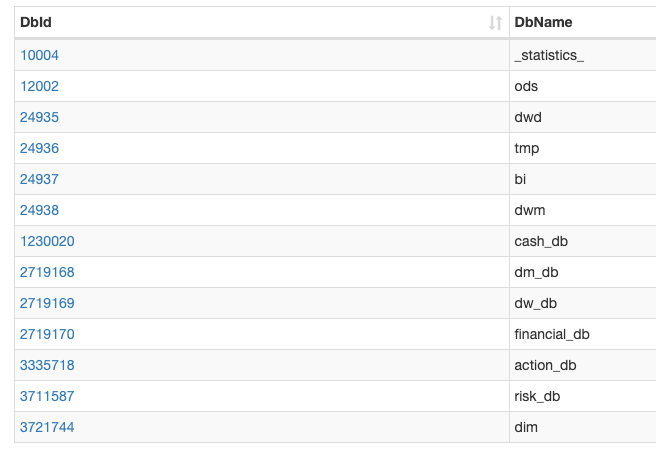

– ods库正常

– dwm库异常

![image|690x189]

(upload://fk6n72X8cWR032Etex223uB196K.png)