【详述】存算分离HDFS模式 CN注册后 过一会直接全部挂掉

【是否存算分离】是

【StarRocks版本】3.3.0

【集群规模】例如:3 fe(1leader + 2 follower)+9 cn (JDK 11)

【附件】cn.log报错截图如下

存算分离集群的 storage volumn 是否配置

enable_load_volume_from_conf= false

CREATE STORAGE VOLUME def_volume

TYPE = HDFS

LOCATIONS = (“hdfs://127.0.0.1:9000/user/starrocks/”);

SET def_volume AS DEFAULT STORAGE VOLUME;

按照这个步骤设置了也不行呢

看看 cn 的 jni-info.log 日志吧

看下 cn 进程的 JAVA_HOME 配置是否正确

进程启动后 cat /proc/$be_pid/environ | tr "\0" "\n" | grep -a JAVA_

log里面是空的 啥都没有

整个 log 目录什么都没有?

搜不到 基本上cn启动瞬间就挂了

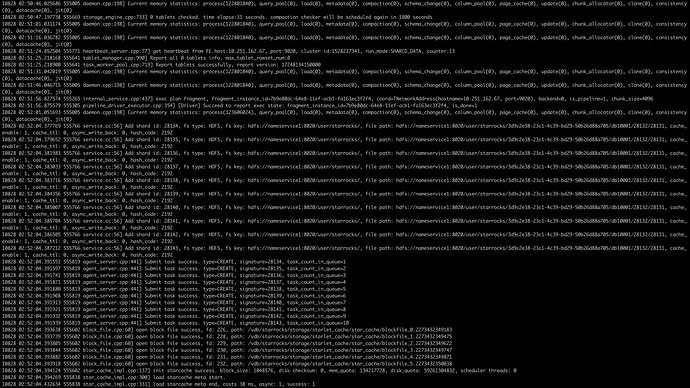

cn.INFO文件中

I0828 02:52:04.393918 555602 block_file.cpp:60] open block file success, fd: 232, path: /vdb/starrocks/storage/starlet_cache/star_cache/blockfile_5.2273432350038

I0828 02:52:04.394124 555602 star_cache_impl.cpp:137] init starcache success. block_size: 1048576, disk checksum: 0, mem_quota: 134217728, disk_quota: 59261304832, scheduler threads: 0

I0828 02:52:04.394269 555838 star_cache_impl.cpp:300] load starcache meta start.

I0828 02:52:04.432634 555838 star_cache_impl.cpp:331] load starcache meta end, costs 38 ms, async: 1, success: 1

到这里挂了

就jni.INFO.log里面啥都没有

cn.INFO里面最后几行是

I0828 02:52:04.387212 555766 service.cc:56] Add shard id: 28143, fs type: HDFS, fs key: hdfs://nameservice1:8020/user/starrocks/, file path: hdfs://nameservice1:8020/user/starrocks/3d9c2e38-23c1-4c39-bd29-50b26d88a705/db10001/28132/28131, cache_enable: 1, cache_ttl: 0, async_write_back: 0, hash_code: 2192

I0828 02:52:04.391551 555819 agent_server.cpp:441] Submit task success. type=CREATE, signature=28134, task_count_in_queue=1

I0828 02:52:04.391597 555819 agent_server.cpp:441] Submit task success. type=CREATE, signature=28135, task_count_in_queue=2

I0828 02:52:04.391741 555819 agent_server.cpp:441] Submit task success. type=CREATE, signature=28136, task_count_in_queue=3

I0828 02:52:04.391871 555819 agent_server.cpp:441] Submit task success. type=CREATE, signature=28137, task_count_in_queue=4

I0828 02:52:04.391880 555819 agent_server.cpp:441] Submit task success. type=CREATE, signature=28138, task_count_in_queue=5

I0828 02:52:04.391908 555819 agent_server.cpp:441] Submit task success. type=CREATE, signature=28139, task_count_in_queue=6

I0828 02:52:04.391913 555819 agent_server.cpp:441] Submit task success. type=CREATE, signature=28140, task_count_in_queue=7

I0828 02:52:04.391921 555819 agent_server.cpp:441] Submit task success. type=CREATE, signature=28141, task_count_in_queue=8

I0828 02:52:04.391932 555819 agent_server.cpp:441] Submit task success. type=CREATE, signature=28142, task_count_in_queue=9

I0828 02:52:04.391939 555819 agent_server.cpp:441] Submit task success. type=CREATE, signature=28143, task_count_in_queue=10

I0828 02:52:04.393638 555602 block_file.cpp:60] open block file success, fd: 226, path: /vdb/starrocks/storage/starlet_cache/star_cache/blockfile_0.2273432349183

I0828 02:52:04.393739 555602 block_file.cpp:60] open block file success, fd: 228, path: /vdb/starrocks/storage/starlet_cache/star_cache/blockfile_1.2273432349475

I0828 02:52:04.393805 555602 block_file.cpp:60] open block file success, fd: 229, path: /vdb/starrocks/storage/starlet_cache/star_cache/blockfile_2.2273432349622

I0828 02:52:04.393844 555602 block_file.cpp:60] open block file success, fd: 230, path: /vdb/starrocks/storage/starlet_cache/star_cache/blockfile_3.2273432349747

I0828 02:52:04.393882 555602 block_file.cpp:60] open block file success, fd: 231, path: /vdb/starrocks/storage/starlet_cache/star_cache/blockfile_4.2273432349871

I0828 02:52:04.393918 555602 block_file.cpp:60] open block file success, fd: 232, path: /vdb/starrocks/storage/starlet_cache/star_cache/blockfile_5.2273432350038

I0828 02:52:04.394124 555602 star_cache_impl.cpp:137] init starcache success. block_size: 1048576, disk checksum: 0, mem_quota: 134217728, disk_quota: 59261304832, scheduler threads: 0

I0828 02:52:04.394269 555838 star_cache_impl.cpp:300] load starcache meta start.

I0828 02:52:04.432634 555838 star_cache_impl.cpp:331] load starcache meta end, costs 38 ms, async: 1, success: 1

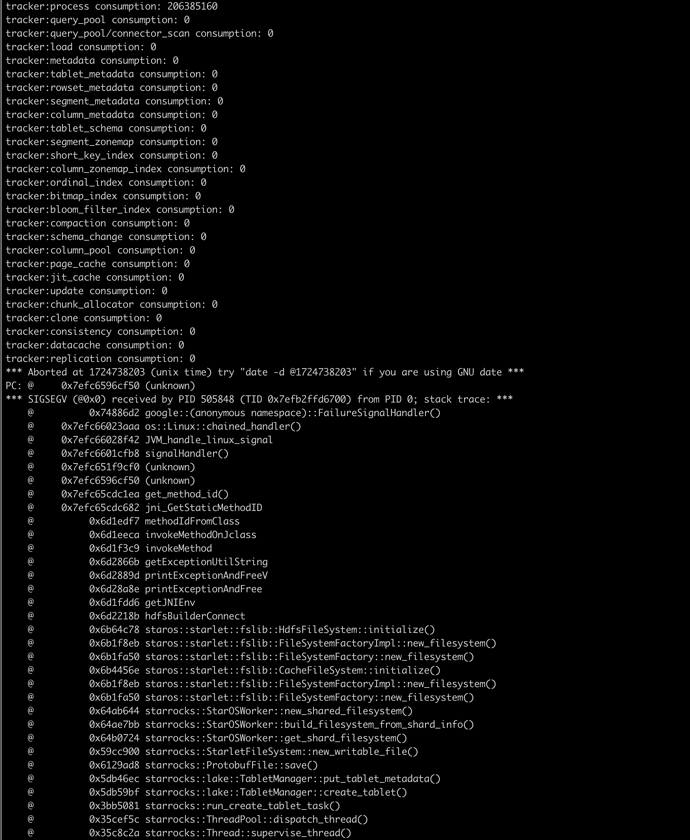

看看 cn.out 里面的日志

看着是JVM配置的不对

java -version 我看jdk都在的呢

改下启动脚本,把JAVA_HOME echo 出来看看

确认下JAVA_HOME是期望值吗

是的 /usr/lib/jvm/jdk-11-oracle-x64

这是在HDFS本地库存里调用org.apache.hadoop.fs.FileSystem.loadFileSystems()失败了。检查一下be/lib/hadoop/hdfs下有没有正确的hdfs jar包?环境里有没有错误版本的hdfs jar包?HDFS服务的版本与StarRocks使用的hdfs jar包版本是否兼容?

be/lib/hadoop/hdfs下有没有正确的hdfs jar包下面有依赖包,StarRocks使用的hdfs jar包版本高于HDFS服务的版本

这个问题是在HDFS本地库存里调用org.apache.hadoop.fs.FileSystem.loadFileSystems()失败了。原因可能有:找不到类或方法;调用org.apache.hadoop.fs.FileSystem.loadFileSystems()出错了。对于找类它是从CLASSPATH环境变量加载类的,你可以看看系统里有没有配置$HADOOP_CLASSPATH或$CLASSPATH,如果没有你自己加一下CLASSPATH环境变量把be/lib/hadoop下相关的jar加上去试试;对于调用FileSystem.loadFileSystems()出错,你可以自己写个java测试类去用反射(因为方法是私有方法所以要用反射去调用)调一下org.apache.hadoop.fs.FileSystem.loadFileSystems()方法看看有没有报错。