为了更快的定位您的问题,请提供以下信息,谢谢

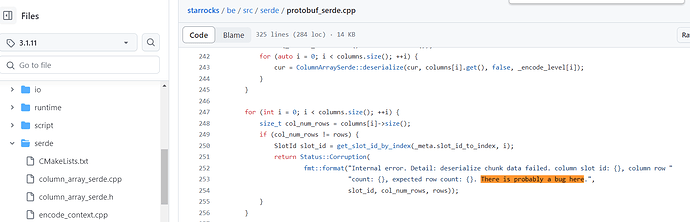

【详述】starrocks cn隔一段时间就重启

【背景】

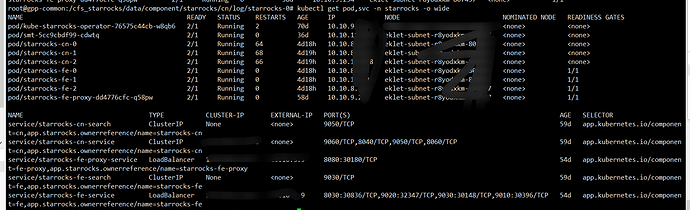

k8s部署starrocks,3fe + 3cn 存算分离 腾讯的eks(k8s) + cos对象存储 + cfs挂载日志和cn缓存

3.1.7版本,后来升级到3.1.11 就开始反馈有cn间断重启了,貌似不升级的时候是没问题的。不知道是不是升级的时候导致的问题

【业务影响】数仓任务通过jdbc执行insert任务 任务异常

到处找出来的一些日志

任务调度异常日志:

[ERROR] 2024-06-17 08:48:20.630 - [taskAppId=TASK-1144-589035-3175704]:[254] - task scheduler failure

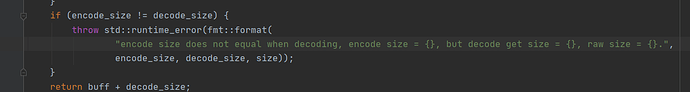

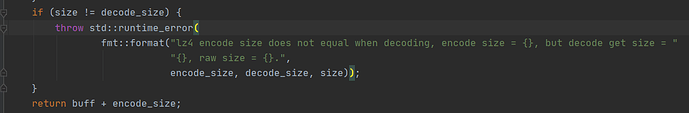

java.lang.RuntimeException: Runtime error: encode size does not equal when decoding, encode size = 4294967296, but decode get size = 1265, raw size = 2855.

[ERROR] 2024-06-17 08:37:14.666 - [taskAppId=TASK-1142-589033-3175558]:[372] - Failed to get primary backend. shard id: 2648066

java.sql.SQLSyntaxErrorException: Failed to get primary backend. shard id: 2648066

[ERROR] 2024-06-17 08:37:19.086 - [taskAppId=TASK-1144-589035-3175555]:[254] - task scheduler failure

java.lang.RuntimeException: rpc failed, host: starrocks-cn-2.starrocks-cn-search.starrocks.svc.cluster.local

有好几个sql task都会出现上面的异常,绝大部分任务都正常。

不清楚原因到底是什么 哪个异常导致的哪个异常。

有个sql经常发生异常,拷贝出来,手动执行,貌似又没有问题。

insert overwrite db_elderly_care_ads.ads_clique_operation_client_dap partition(part_date=‘2024’) – 客户

with

ac_client as

( select t.org_id , 1 public_client_cmt – 公海客户

, case when charger_id != ‘’ or sharer_id != ‘’ then 1 else 0 end client_cmt – 客户数

, case when user_id != ‘’ then 1 else 0 end user_cmt – 用户数

, null order_cmt , null finish_order_cmt from db_elderly_care_ods.ods_tb_client_info_dap t where t.delete_status = 0 and date(t.create_time) < date_trunc(‘year’,add_months(‘2024-06-13’, 12)) – 时间 – 时间区级

) , – 订单

ac_order as

( select t.org_id , 0 public_client_cmt – 公海客户

, 0 client_cmt – 客户数

, 0 user_cmt – 用户数

, t.client_id order_cmt – 订单数

, case when o.order_status = 5 then t.client_id else null end finish_order_cmt – 完成订单数

from db_elderly_care_ods.ods_tb_client_info_dap t left join db_elderly_care_ods.ods_tb_order_dap as o on t.user_id = o.customer_id where t.delete_status = 0 and o.delete_status = 0 and o.order_type = 0 and date(t.create_time) < date_trunc(‘year’,add_months(‘2024-06-13’, 12)) – 时间 – 时间区级

) , – 数据汇总 + 关联维度

se_vi as

( select one_level , two_level , three_level , four_level , five_level , public_client_cmt , client_cmt , user_cmt , order_cmt , finish_order_cmt from ( select * from ac_client union all select * from ac_order ) s join db_elderly_care_dim.dim_org_level_dap e on s.org_id = e.org_id and org_type_id in (3,4, 5, 6, 7, 8, 9) – 长者跟养老机构挂钩,与集团本身无关

) ,

ac_re as

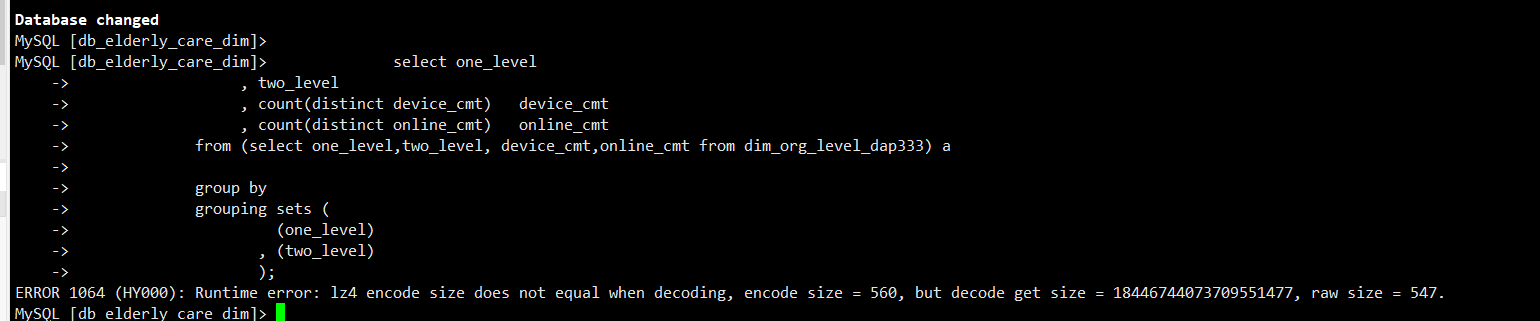

( select one_level , two_level , three_level , four_level , five_level , sum(public_client_cmt) public_client_cmt , sum(client_cmt) client_cmt , sum(user_cmt) user_cmt , count(distinct order_cmt) order_cmt , count(distinct finish_order_cmt) finish_order_cmt from se_vi group by grouping sets ( (one_level) , (two_level) , (three_level) , (four_level) , (five_level) ) ),

ac_bb as

( select case when one_level != ‘’ then one_level when two_level != ‘’ then two_level when three_level != ‘’ then three_level when four_level != ‘’ then four_level when five_level != ‘’ then five_level end org_id , public_client_cmt , client_cmt , user_cmt , order_cmt , finish_order_cmt from ac_re

),

ac_dd as

( select org_id , public_client_cmt , client_cmt , user_cmt , order_cmt , finish_order_cmt , ifnull(client_cmt / public_client_cmt, 0.00) client_cmt_rate , ifnull(user_cmt / client_cmt, 0.00) user_cmt_rate , ifnull(order_cmt / user_cmt, 0.00) order_cmt_rate , ifnull(finish_order_cmt / order_cmt, 0.00) finish_order_cmt_rate from ac_bb where org_id != ‘null’ ),

ac_cc as

( select b.org_id , o.org_name , o.tenant_id , public_client_cmt , client_cmt , user_cmt , order_cmt , finish_order_cmt , client_cmt_rate , user_cmt_rate , order_cmt_rate , finish_order_cmt_rate , substr(current_timestamp(),1, 19) , ‘y’ , substring(‘2024-06-13’, 1, 4) from ac_dd b join db_elderly_care_ods.ods_tb_org_dap o on b.org_id = o.org_id

)

select * from ac_cc

cn.out日志:

start time: Mon Jun 17 08:37:32 CST 2024

3.1.11 RELEASE (build 34f131b)

query_id:d286b8c1-2c43-11ef-a1b2-525400df7046, fragment_instance:d286b8c1-2c43-11ef-a1b2-525400df7060

tracker:process consumption: 4145881744

tracker:query_pool consumption: 712584224

tracker:load consumption: 0

tracker:metadata consumption: 20034043

tracker:tablet_metadata consumption: 162087

tracker:rowset_metadata consumption: 0

tracker:segment_metadata consumption: 3129185

tracker:column_metadata consumption: 16742771

tracker:tablet_schema consumption: 162087

tracker:segment_zonemap consumption: 1950236

tracker:short_key_index consumption: 238325

tracker:column_zonemap_index consumption: 4199267

tracker:ordinal_index consumption: 4431752

tracker:bitmap_index consumption: 2400

tracker:bloom_filter_index consumption: 0

tracker:compaction consumption: 0

tracker:schema_change consumption: 0

tracker:column_pool consumption: 0

tracker:page_cache consumption: 2721436736

tracker:update consumption: 34080

tracker:chunk_allocator consumption: 320995192

tracker:clone consumption: 0

tracker:consistency consumption: 0

tracker:datacache consumption: 0

tracker:replication consumption: 0

*** Aborted at 1718585528 (unix time) try “date -d @1718585528” if you are using GNU date ***

PC: @ 0x85579c5 svb_decode_avx_simple

*** SIGSEGV (@0x7f60539d4000) received by PID 27 (TID 0x7f60c26c4640) from PID 1402814464; stack trace: ***

@ 0x7cfae6a google::(anonymous namespace)::FailureSignalHandler()

@ 0x7f6127cd1520 (unknown)

@ 0x85579c5 svb_decode_avx_simple

@ 0x8557d21 streamvbyte_decode

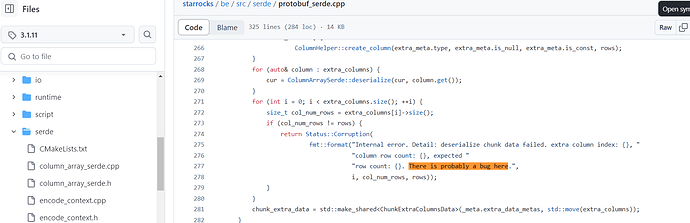

@ 0x55619ff starrocks::serde::(anonymous namespace)::decode_integers<>()

@ 0x5563a5b starrocks::ColumnVisitorMutableAdapter<>::visit()

@ 0x3d1001d starrocks::ColumnFactory<>::accept_mutable()

@ 0x55669ea starrocks::serde::ColumnArraySerde::deserialize()

@ 0x5566ccf starrocks::ColumnVisitorMutableAdapter<>::visit()

@ 0x3d638dd starrocks::ColumnFactory<>::accept_mutable()

@ 0x55669ea starrocks::serde::ColumnArraySerde::deserialize()

@ 0x5566c83 starrocks::ColumnVisitorMutableAdapter<>::visit()

@ 0x3d6561d starrocks::ColumnFactory<>::accept_mutable()

@ 0x55669ea starrocks::serde::ColumnArraySerde::deserialize()

@ 0x65620ba starrocks::serde::ProtobufChunkDeserializer::deserialize()

@ 0x662074b starrocks::DataStreamRecvr::SenderQueue::_deserialize_chunk()

@ 0x66249c7 starrocks::DataStreamRecvr::PipelineSenderQueue::get_chunk()

@ 0x66179d6 starrocks::DataStreamRecvr::get_chunk_for_pipeline()

@ 0x60a9493 starrocks::pipeline::ExchangeSourceOperator::pull_chunk()

@ 0x5c7e4d0 starrocks::pipeline::PipelineDriver::process()

@ 0x6508830 starrocks::pipeline::GlobalDriverExecutor::_worker_thread()

@ 0x68ac92c starrocks::ThreadPool::dispatch_thread()

@ 0x68a5d6a starrocks: :supervise_thread()

:supervise_thread()

@ 0x7f6127d23ac3 (unknown)

@ 0x7f6127db5850 (unknown)

@ 0x0 (unknown)

start time: Mon Jun 17 08:52:35 CST 2024

cn.INFO.xxx日志:

I0617 08:52:08.569945 365 pipeline_driver_executor.cpp:326] [Driver] Fail to report exec state due to query not found: fragment_instance_id=d286b8c1-2c43-11ef-a1b2-525400df7048

I0617 08:52:08.574666 368 tablet_sink.cpp:2008] Olap table sink statistics. load_id: d286b8c1-2c43-11ef-a1b2-525400df7046, txn_id: 9853481, add chunk time(ms)/wait lock time(ms)/num: {2649730:(0)(0)(1)} {2612629:(0)(0)(1)} {2612632:(0)(0)(1)}

I0617 08:52:08.576160 365 pipeline_driver_executor.cpp:326] [Driver] Fail to report exec state due to query not found: fragment_instance_id=d286b8c1-2c43-11ef-a1b2-525400df705d

I0617 08:52:08.576581 365 pipeline_driver_executor.cpp:326] [Driver] Fail to report exec state due to query not found: fragment_instance_id=d286b8c1-2c43-11ef-a1b2-525400df7059

W0617 08:52:08.586138 369 stack_util.cpp:350] 2024-06-17 08:52:08.586106, query_id=d286b8c1-2c43-11ef-a1b2-525400df7046, fragment_instance_id=d286b8c1-2c43-11ef-a1b2-525400df7060 throws exception: std::runtime_error, trace:

W0617 08:52:08.586223 369 pipeline_driver.cpp:308] pull_chunk returns not ok status Runtime error: Runtime error: encode size does not equal when decoding, encode size = 4294973428, but decode get size = 1989, raw size = 6120.

W0617 08:52:08.586256 369 pipeline_driver_executor.cpp:166] [Driver] Process error, query_id=d286b8c1-2c43-11ef-a1b2-525400df7046, instance_id=d286b8c1-2c43-11ef-a1b2-525400df7060, status=Runtime error: Runtime error: encode size does not equal when decoding, encode size = 4294973428, but decode get size = 1989, raw size = 6120.

W0617 08:52:08.599530 371 stack_util.cpp:350] 2024-06-17 08:52:08.599493, query_id=d286b8c1-2c43-11ef-a1b2-525400df7046, fragment_instance_id=d286b8c1-2c43-11ef-a1b2-525400df7057 throws exception: std::runtime_error, trace:

W0617 08:52:08.601045 371 pipeline_driver.cpp:308] pull_chunk returns not ok status Runtime error: Runtime error: encode size does not equal when decoding, encode size = 9178344165883248640, but decode get size = 11944910, raw size = 27852800.

W0617 08:52:08.601069 371 pipeline_driver_executor.cpp:166] [Driver] Process error, query_id=d286b8c1-2c43-11ef-a1b2-525400df7046, instance_id=d286b8c1-2c43-11ef-a1b2-525400df7057, status=Runtime error: Runtime error: encode size does not equal when decoding, encode size = 9178344165883248640, but decode get size = 11944910, raw size = 27852800.

I0617 08:52:08.601572 365 pipeline_driver_executor.cpp:326] [Driver] Fail to report exec state due to query not found: fragment_instance_id=d286b8c1-2c43-11ef-a1b2-525400df7057

I0617 08:52:35.489236 27 daemon.cpp:289] version 3.1.11-34f131b

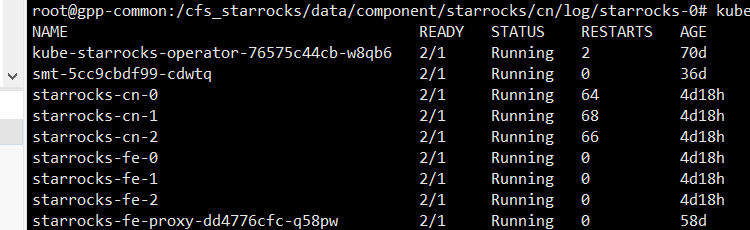

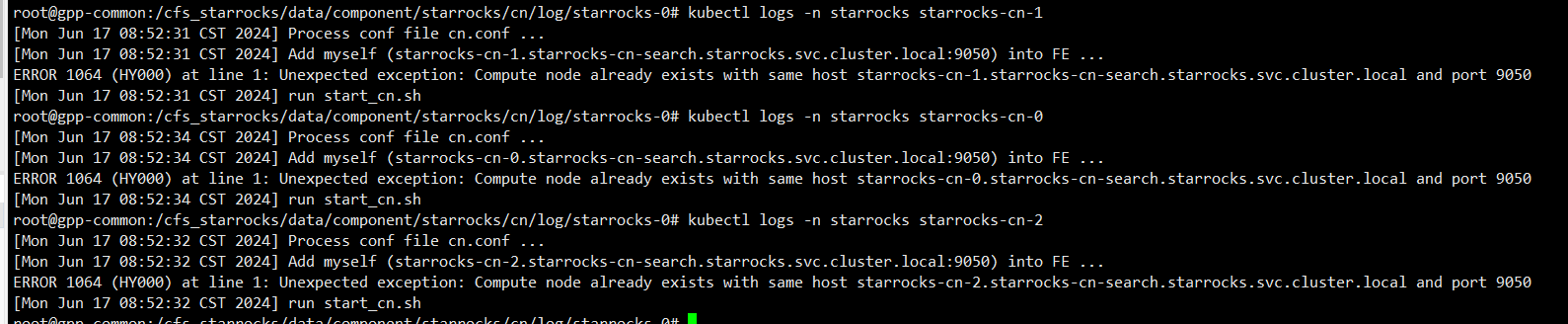

k8s cn pod日志: