为了更快的定位您的问题,请提供以下信息,谢谢

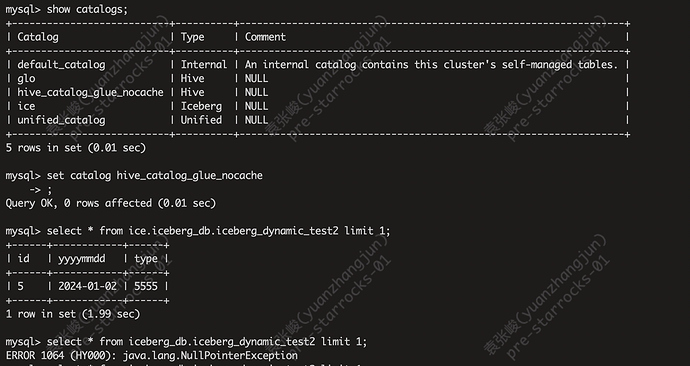

【详述】使用hive catalog查询iceberg表,直接报空指针问题[CachingHiveMetastore.get():625] Error occurred when loading cache

com.google.common.util.concurrent.UncheckedExecutionException: java.lang.NullPointerException

【背景】

【业务影响】

【是否存算分离】否

【StarRocks版本】3.2.6

【集群规模】例如:3fe(1 follower+2observer)+5be(fe与be混部)

【机器信息】CPU虚拟核/内存/网卡,例如:48C/64G/万兆

【联系方式】为了在解决问题过程中能及时联系到您获取一些日志信息,请补充下您的联系方式,例如:社区群4-小李或者邮箱,谢谢

【附件】

报错信息

2024-05-20 03:42:03.864Z ERROR (starrocks-mysql-nio-pool-212|60683) [CachingHiveMetastore.get():625] Error occurred when loading cache

com.google.common.util.concurrent.UncheckedExecutionException: java.lang.NullPointerException

at com.google.common.cache.LocalCache$Segment.get(LocalCache.java:2085) ~[spark-dpp-1.0.0.jar:?]

at com.google.common.cache.LocalCache.get(LocalCache.java:4011) ~[spark-dpp-1.0.0.jar:?]

at com.google.common.cache.LocalCache.getOrLoad(LocalCache.java:4034) ~[spark-dpp-1.0.0.jar:?]

at com.google.common.cache.LocalCache$LocalLoadingCache.get(LocalCache.java:5010) ~[spark-dpp-1.0.0.jar:?]

at com.google.common.cache.LocalCache$LocalLoadingCache.getUnchecked(LocalCache.java:5017) ~[spark-dpp-1.0.0.jar:?]

at com.starrocks.connector.hive.CachingHiveMetastore.get(CachingHiveMetastore.java:623) ~[starrocks-fe.jar:?]

at com.starrocks.connector.hive.CachingHiveMetastore.getPartition(CachingHiveMetastore.java:304) ~[starrocks-fe.jar:?]

at com.starrocks.connector.hive.HiveMetastoreOperations.getPartition(HiveMetastoreOperations.java:238) ~[starrocks-fe.jar:?]

at com.starrocks.connector.hive.HiveStatisticsProvider.getEstimatedRowCount(HiveStatisticsProvider.java:148) ~[starrocks-fe.jar:?]

at com.starrocks.connector.hive.HiveStatisticsProvider.createUnpartitionedStats(HiveStatisticsProvider.java:130) ~[starrocks-fe.jar:?]

at com.starrocks.connector.hive.HiveStatisticsProvider.getTableStatistics(HiveStatisticsProvider.java:82) ~[starrocks-fe.jar:?]

at com.starrocks.connector.hive.HiveMetadata.getTableStatistics(HiveMetadata.java:268) ~[starrocks-fe.jar:?]

at com.starrocks.connector.CatalogConnectorMetadata.getTableStatistics(CatalogConnectorMetadata.java:150) ~[starrocks-fe.jar:?]

at com.starrocks.server.MetadataMgr.lambda$getTableStatistics$8(MetadataMgr.java:455) ~[starrocks-fe.jar:?]

at java.util.Optional.map(Optional.java:215) ~[?:1.8.0_191]

at com.starrocks.server.MetadataMgr.getTableStatistics(MetadataMgr.java:455) ~[starrocks-fe.jar:?]

at com.starrocks.server.MetadataMgr.getTableStatistics(MetadataMgr.java:469) ~[starrocks-fe.jar:?]

at com.starrocks.sql.optimizer.statistics.StatisticsCalculator.computeHMSTableScanNode(StatisticsCalculator.java:487) ~[starrocks-fe.jar:?]

at com.starrocks.sql.optimizer.statistics.StatisticsCalculator.visitLogicalHiveScan(StatisticsCalculator.java:465) ~[starrocks-fe.jar:?]

at com.starrocks.sql.optimizer.statistics.StatisticsCalculator.visitLogicalHiveScan(StatisticsCalculator.java:168) ~[starrocks-fe.jar:?]

at com.starrocks.sql.optimizer.operator.logical.LogicalHiveScanOperator.accept(LogicalHiveScanOperator.java:78) ~[starrocks-fe.jar:?]

at com.starrocks.sql.optimizer.statistics.StatisticsCalculator.estimatorStats(StatisticsCalculator.java:184) ~[starrocks-fe.jar:?]

at com.starrocks.sql.optimizer.task.DeriveStatsTask.execute(DeriveStatsTask.java:63) ~[starrocks-fe.jar:?]

at com.starrocks.sql.optimizer.task.SeriallyTaskScheduler.executeTasks(SeriallyTaskScheduler.java:65) ~[starrocks-fe.jar:?]

at com.starrocks.sql.optimizer.Optimizer.memoOptimize(Optimizer.java:719) ~[starrocks-fe.jar:?]

at com.starrocks.sql.optimizer.Optimizer.optimizeByCost(Optimizer.java:251) ~[starrocks-fe.jar:?]

at com.starrocks.sql.optimizer.Optimizer.optimize(Optimizer.java:174) ~[starrocks-fe.jar:?]

at com.starrocks.sql.StatementPlanner.createQueryPlan(StatementPlanner.java:203) ~[starrocks-fe.jar:?]

at com.starrocks.sql.StatementPlanner.plan(StatementPlanner.java:134) ~[starrocks-fe.jar:?]

at com.starrocks.sql.StatementPlanner.plan(StatementPlanner.java:91) ~[starrocks-fe.jar:?]

at com.starrocks.qe.StmtExecutor.execute(StmtExecutor.java:520) ~[starrocks-fe.jar:?]

at com.starrocks.qe.ConnectProcessor.handleQuery(ConnectProcessor.java:413) ~[starrocks-fe.jar:?]

at com.starrocks.qe.ConnectProcessor.dispatch(ConnectProcessor.java:607) ~[starrocks-fe.jar:?]

at com.starrocks.qe.ConnectProcessor.processOnce(ConnectProcessor.java:901) ~[starrocks-fe.jar:?]

at com.starrocks.mysql.nio.ReadListener.lambda$handleEvent$0(ReadListener.java:69) ~[starrocks-fe.jar:?]

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149) ~[?:1.8.0_191]

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624) ~[?:1.8.0_191]

at java.lang.Thread.run(Thread.java:748) ~[?:1.8.0_191]

Caused by: java.lang.NullPointerException

at com.starrocks.connector.hive.HiveMetastoreApiConverter.toPartition(HiveMetastoreApiConverter.java:322) ~[starrocks-fe.jar:?]

at com.starrocks.connector.hive.HiveMetastore.getPartition(HiveMetastore.java:178) ~[starrocks-fe.jar:?]

at com.starrocks.connector.hive.CachingHiveMetastore.loadPartition(CachingHiveMetastore.java:308) ~[starrocks-fe.jar:?]

at com.starrocks.connector.hive.CachingHiveMetastore$1.load(CachingHiveMetastore.java:137) ~[starrocks-fe.jar:?]

at com.starrocks.connector.hive.CachingHiveMetastore$1.load(CachingHiveMetastore.java:134) ~[starrocks-fe.jar:?]

at com.google.common.cache.CacheLoader$1.load(CacheLoader.java:192) ~[spark-dpp-1.0.0.jar:?]

at com.google.common.cache.LocalCache$LoadingValueReference.loadFuture(LocalCache.java:3570) ~[spark-dpp-1.0.0.jar:?]

at com.google.common.cache.LocalCache$Segment.loadSync(LocalCache.java:2312) ~[spark-dpp-1.0.0.jar:?]

at com.google.common.cache.LocalCache$Segment.lockedGetOrLoad(LocalCache.java:2189) ~[spark-dpp-1.0.0.jar:?]

at com.google.common.cache.LocalCache$Segment.get(LocalCache.java:2079) ~[spark-dpp-1.0.0.jar:?]

… 37 more

2024-05-20 03:42:03.865Z WARN (starrocks-mysql-nio-pool-212|60683) [HiveMetadata.getTableStatistics():275] Failed to get table column statistics on [HiveTable{catalogName=‘hive_catalog_glue_nocache’, hiveDbName=‘iceberg_db’, hiveTableName=‘iceberg_dynamic_test2’, resourceName=‘hive_catalog_glue_nocache’, id=100002230, name=‘iceberg_dynamic_test2’, type=HIVE, createTime=1709084883}]. error : com.google.common.util.concurrent.UncheckedExecutionException: java.lang.NullPointerException

2024-05-20 03:42:03.951Z ERROR (starrocks-mysql-nio-pool-212|60683) [CachingHiveMetastore.get():625] Error occurred when loading cache

com.google.common.util.concurrent.UncheckedExecutionException: java.lang.NullPointerException

at com.google.common.cache.LocalCache$Segment.get(LocalCache.java:2085) ~[spark-dpp-1.0.0.jar:?]

at com.google.common.cache.LocalCache.get(LocalCache.java:4011) ~[spark-dpp-1.0.0.jar:?]

at com.google.common.cache.LocalCache.getOrLoad(LocalCache.java:4034) ~[spark-dpp-1.0.0.jar:?]

at com.google.common.cache.LocalCache$LocalLoadingCache.get(LocalCache.java:5010) ~[spark-dpp-1.0.0.jar:?]

at com.google.common.cache.LocalCache$LocalLoadingCache.getUnchecked(LocalCache.java:5017) ~[spark-dpp-1.0.0.jar:?]

at com.starrocks.connector.hive.CachingHiveMetastore.get(CachingHiveMetastore.java:623) ~[starrocks-fe.jar:?]

at com.starrocks.connector.hive.CachingHiveMetastore.getPartition(CachingHiveMetastore.java:304) ~[starrocks-fe.jar:?]

at com.starrocks.connector.hive.HiveMetastoreOperations.getPartition(HiveMetastoreOperations.java:238) ~[starrocks-fe.jar:?]

at com.starrocks.connector.hive.HiveStatisticsProvider.getEstimatedRowCount(HiveStatisticsProvider.java:148) ~[starrocks-fe.jar:?]

at com.starrocks.connector.hive.HiveStatisticsProvider.createUnknownStatistics(HiveStatisticsProvider.java:186) ~[starrocks-fe.jar:?]

at com.starrocks.connector.hive.HiveMetadata.getTableStatistics(HiveMetadata.java:281) ~[starrocks-fe.jar:?]

at com.starrocks.connector.CatalogConnectorMetadata.getTableStatistics(CatalogConnectorMetadata.java:150) ~[starrocks-fe.jar:?]

at com.starrocks.server.MetadataMgr.lambda$getTableStatistics$8(MetadataMgr.java:455) ~[starrocks-fe.jar:?]

at java.util.Optional.map(Optional.java:215) ~[?:1.8.0_191]

at com.starrocks.server.MetadataMgr.getTableStatistics(MetadataMgr.java:455) ~[starrocks-fe.jar:?]

at com.starrocks.server.MetadataMgr.getTableStatistics(MetadataMgr.java:469) ~[starrocks-fe.jar:?]

at com.starrocks.sql.optimizer.statistics.StatisticsCalculator.computeHMSTableScanNode(StatisticsCalculator.java:487) ~[starrocks-fe.jar:?]

at com.starrocks.sql.optimizer.statistics.StatisticsCalculator.visitLogicalHiveScan(StatisticsCalculator.java:465) ~[starrocks-fe.jar:?]

at com.starrocks.sql.optimizer.statistics.StatisticsCalculator.visitLogicalHiveScan(StatisticsCalculator.java:168) ~[starrocks-fe.jar:?]

at com.starrocks.sql.optimizer.operator.logical.LogicalHiveScanOperator.accept(LogicalHiveScanOperator.java:78) ~[starrocks-fe.jar:?]

at com.starrocks.sql.optimizer.statistics.StatisticsCalculator.estimatorStats(StatisticsCalculator.java:184) ~[starrocks-fe.jar:?]

at com.starrocks.sql.optimizer.task.DeriveStatsTask.execute(DeriveStatsTask.java:63) ~[starrocks-fe.jar:?]

at com.starrocks.sql.optimizer.task.SeriallyTaskScheduler.executeTasks(SeriallyTaskScheduler.java:65) ~[starrocks-fe.jar:?]

at com.starrocks.sql.optimizer.Optimizer.memoOptimize(Optimizer.java:719) ~[starrocks-fe.jar:?]

at com.starrocks.sql.optimizer.Optimizer.optimizeByCost(Optimizer.java:251) ~[starrocks-fe.jar:?]

at com.starrocks.sql.optimizer.Optimizer.optimize(Optimizer.java:174) ~[starrocks-fe.jar:?]

at com.starrocks.sql.StatementPlanner.createQueryPlan(StatementPlanner.java:203) ~[starrocks-fe.jar:?]

at com.starrocks.sql.StatementPlanner.plan(StatementPlanner.java:134) ~[starrocks-fe.jar:?]

at com.starrocks.sql.StatementPlanner.plan(StatementPlanner.java:91) ~[starrocks-fe.jar:?]

at com.starrocks.qe.StmtExecutor.execute(StmtExecutor.java:520) ~[starrocks-fe.jar:?]

at com.starrocks.qe.ConnectProcessor.handleQuery(ConnectProcessor.java:413) ~[starrocks-fe.jar:?]

at com.starrocks.qe.ConnectProcessor.dispatch(ConnectProcessor.java:607) ~[starrocks-fe.jar:?]

at com.starrocks.qe.ConnectProcessor.processOnce(ConnectProcessor.java:901) ~[starrocks-fe.jar:?]

at com.starrocks.mysql.nio.ReadListener.lambda$handleEvent$0(ReadListener.java:69) ~[starrocks-fe.jar:?]

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149) ~[?:1.8.0_191]

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624) ~[?:1.8.0_191]

at java.lang.Thread.run(Thread.java:748) ~[?:1.8.0_191]

Caused by: java.lang.NullPointerException

at com.starrocks.connector.hive.HiveMetastoreApiConverter.toPartition(HiveMetastoreApiConverter.java:322) ~[starrocks-fe.jar:?]

at com.starrocks.connector.hive.HiveMetastore.getPartition(HiveMetastore.java:178) ~[starrocks-fe.jar:?]

at com.starrocks.connector.hive.CachingHiveMetastore.loadPartition(CachingHiveMetastore.java:308) ~[starrocks-fe.jar:?]

at com.starrocks.connector.hive.CachingHiveMetastore$1.load(CachingHiveMetastore.java:137) ~[starrocks-fe.jar:?]

at com.starrocks.connector.hive.CachingHiveMetastore$1.load(CachingHiveMetastore.java:134) ~[starrocks-fe.jar:?]

at com.google.common.cache.CacheLoader$1.load(CacheLoader.java:192) ~[spark-dpp-1.0.0.jar:?]

at com.google.common.cache.LocalCache$LoadingValueReference.loadFuture(LocalCache.java:3570) ~[spark-dpp-1.0.0.jar:?]

at com.google.common.cache.LocalCache$Segment.loadSync(LocalCache.java:2312) ~[spark-dpp-1.0.0.jar:?]

at com.google.common.cache.LocalCache$Segment.lockedGetOrLoad(LocalCache.java:2189) ~[spark-dpp-1.0.0.jar:?]

at com.google.common.cache.LocalCache$Segment.get(LocalCache.java:2079) ~[spark-dpp-1.0.0.jar:?]

… 36 more