为了更快的定位您的问题,请提供以下信息,谢谢

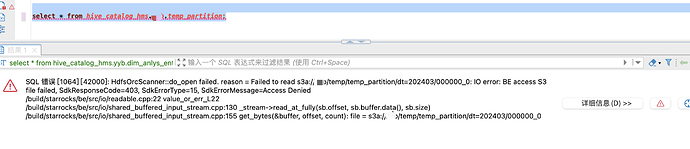

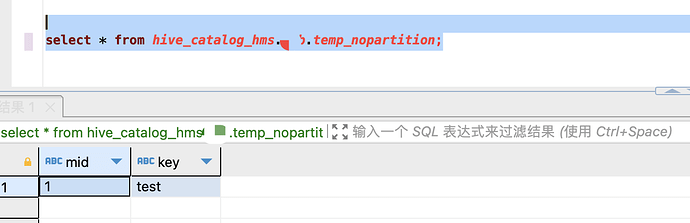

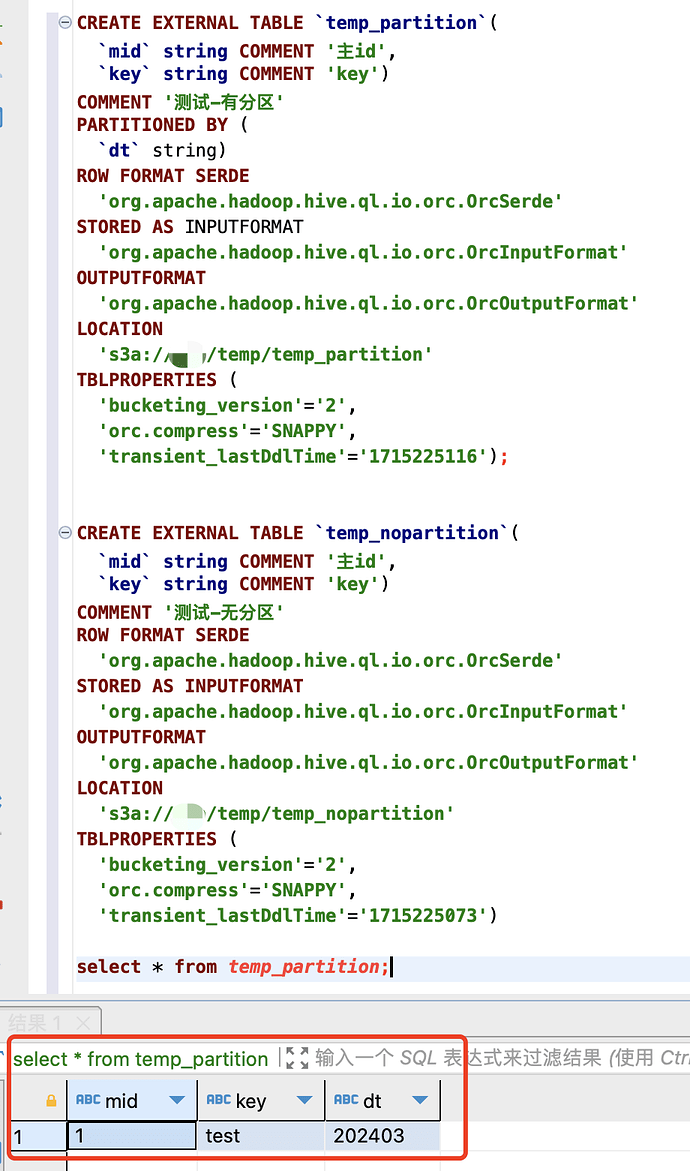

【详述】s3a的路径中没有=时能正常查询和导入,但如果带了=时,比如dt=20240301就会失败

【背景】

SELECT * FROM FILES

(

“aws.s3.endpoint” = “…”,

“path” = “s3a://bucket/datawarehouse/tables/dwd_financial_be_fee_mi/dt=202403/*”,

“aws.s3.enable_ssl” = “false”,

“aws.s3.access_key” = “…”,

“aws.s3.secret_key” = “…”,

“format” = “orc”,

“aws.s3.use_aws_sdk_default_behavior” = “false”,

“aws.s3.use_instance_profile” = “false”,

“aws.s3.enable_path_style_access” = “true”

)

LIMIT 3

;

抓包看到是使用HTTP接口,调用请求是:GET /bucket/?list-type=2&delimiter=%2F&max-keys=5000&prefix=datawarehouse%2Ftables%2Fdwd_financial_be_fee_mi%2Fdt%3D202403%2F&fetch-owner=false HTTP/1.1\r\n

针对http接口,=应该是敏感字符吧,路径中的=会导致解析出问题。

【业务影响】无法成功导入分区数据

【是否存算分离】否

【StarRocks版本】例如:3.1.9

【集群规模】例如:1fe+2be

【机器信息】8C/32G/万兆

【导入或者导出方式】files

【联系方式】55309107@qq.com

【附件】