存算分离 3.3.8 版本, 也有此问题, 我升级到 3.3.9 之后, 当天没有发现类似的问题

当时第二天又开始出现这个问题了,

数据是每小时写一次, 使用hive catalog 方式导入数据

表结构:

CREATE TABLE `rtb_oks_conv_report` (

`__dt` datetime NULL COMMENT "",

`__time` bigint(20) NULL COMMENT "",

`tid` int(11) NULL COMMENT "",

`oks_cid` int(11) NULL COMMENT "",

`exchange_id` int(11) NULL COMMENT "",

`country` varchar(3) NULL COMMENT "",

`bundle` varchar(300) NULL COMMENT "",

`position` varchar(64) NULL COMMENT "",

`billing_type` int(11) NULL COMMENT "",

`interstitial` int(11) NULL COMMENT "",

`adm_tech` int(11) NULL COMMENT "",

`oks_conv` bigint(20) SUM NULL COMMENT "",

`oks_impr` bigint(20) SUM NULL COMMENT ""

) ENGINE=OLAP

AGGREGATE KEY(`__dt`, `__time`, `tid`, `oks_cid`, `exchange_id`, `country`, `bundle`, `position`, `billing_type`, `interstitial`, `adm_tech`)

COMMENT "OLAP"

PARTITION BY date_trunc('hour', __dt)

DISTRIBUTED BY HASH(`tid`, `oks_cid`) BUCKETS 16

PROPERTIES (

"compression" = "LZ4",

"datacache.enable" = "true",

"datacache.partition_duration" = "1 months",

"enable_async_write_back" = "false",

"partition_live_number" = "840",

"replication_num" = "1",

"storage_volume" = "volume_hdfs"

);

每小时从 hive 的 catalog 导入一次数据,

SET session query_timeout=3600;

REFRESH EXTERNAL TABLE cl_hive.dms.rtb_oks_conv_report;

INSERT OVERWRITE rtb.rtb_oks_conv_report PARTITION(__dt ='2025-03-10 01:00:00')

WITH LABEL `rtb_oks_conv_report_2025031009_1741574148750630090`

SELECT

'2025-03-10 01:00:00' AS __dt,

ts AS __time,

tid,

oks_cid,

exchange_id,

country,

bundle,

substr(`position`,0,64) as `position`,

billing_type,

interstitial,

adm_tech,

oks_conv,

oks_impr

FROM cl_hive.dms.rtb_oks_conv_report

WHERE d = '20250310' AND h = '09'

每个分区数据不到 2M 大小,

fe.warning.log 每小时在执行完导入任务后打印警告日志

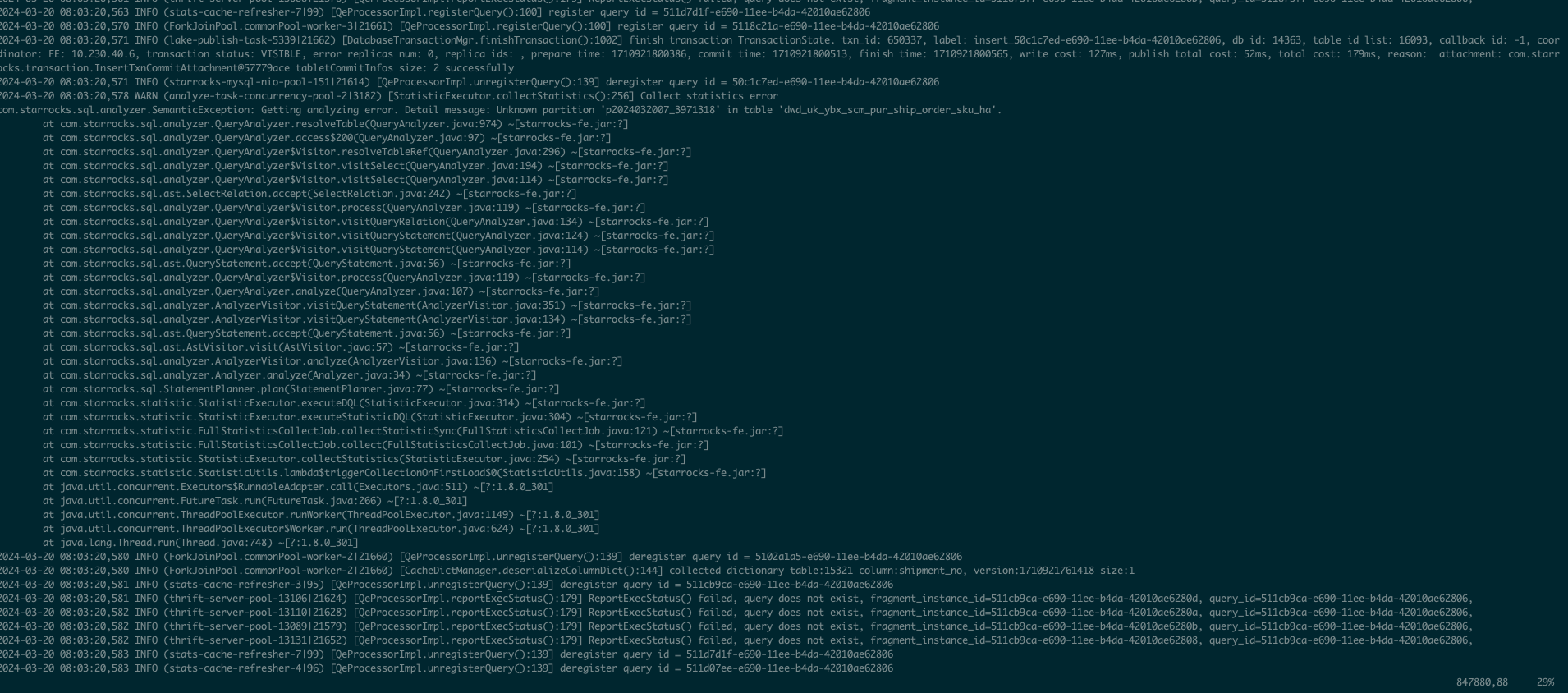

2025-03-10 01:24:00.976Z WARN (analyze-task-concurrency-pool-2|1362) [FullStatisticsCollectJob.collect():148] collect statistics task failed in job: FullStatisticsCollectJob{type=FULL, scheduleType=ONCE, db=com.starrocks.catalog.Database@2bb6, table=Table [id=545234, name=rtb_oks_conv_report, type=CLOUD_NATIVE], partitionIdList=[600084], columnNames=[__dt, __time, tid, oks_cid, exchange_id, country, bundle, position, billing_type, interstitial, adm_tech], properties={}}, SELECT cast(4 as INT), cast(600084 as BIGINT), '__dt', cast(COUNT(1) as BIGINT), cast(COUNT(1) * 8 as BIGINT), hex(hll_serialize(IFNULL(hll_raw(`column_key`), hll_empty()))), cast(COUNT(1) - COUNT(`column_key`) as BIGINT), IFNULL(MAX(`column_key`), ''), IFNULL(MIN(`column_key`), '') FROM (select `__dt` as column_key from `rtb`.`rtb_oks_conv_report` partition `p2025031000_600066`) tt

com.starrocks.sql.analyzer.SemanticException: Getting analyzing error. Detail message: Unknown partition 'p2025031000_600066' in table 'rtb_oks_conv_report'.

at com.starrocks.sql.analyzer.QueryAnalyzer.resolveTable(QueryAnalyzer.java:1419)

at com.starrocks.sql.analyzer.QueryAnalyzer$Visitor.resolveTableRef(QueryAnalyzer.java:482)

at com.starrocks.sql.analyzer.QueryAnalyzer$Visitor.visitSelect(QueryAnalyzer.java:363)

at com.starrocks.sql.analyzer.QueryAnalyzer$Visitor.visitSelect(QueryAnalyzer.java:283)

at com.starrocks.sql.ast.SelectRelation.accept(SelectRelation.java:232)

at com.starrocks.sql.analyzer.QueryAnalyzer$Visitor.process(QueryAnalyzer.java:288)

at com.starrocks.sql.analyzer.QueryAnalyzer$Visitor.visitQueryRelation(QueryAnalyzer.java:303)

at com.starrocks.sql.analyzer.QueryAnalyzer$Visitor.visitQueryStatement(QueryAnalyzer.java:293)

at com.starrocks.sql.analyzer.QueryAnalyzer$Visitor.visitQueryStatement(QueryAnalyzer.java:283)

at com.starrocks.sql.ast.QueryStatement.accept(QueryStatement.java:70)

at com.starrocks.sql.analyzer.QueryAnalyzer$Visitor.process(QueryAnalyzer.java:288)

at com.starrocks.sql.analyzer.QueryAnalyzer$Visitor.visitSubquery(QueryAnalyzer.java:907)

at com.starrocks.sql.analyzer.QueryAnalyzer$Visitor.visitSubquery(QueryAnalyzer.java:283)

at com.starrocks.sql.ast.SubqueryRelation.accept(SubqueryRelation.java:66)

at com.starrocks.sql.analyzer.QueryAnalyzer$Visitor.process(QueryAnalyzer.java:288)

at com.starrocks.sql.analyzer.QueryAnalyzer$Visitor.visitSelect(QueryAnalyzer.java:370)

at com.starrocks.sql.analyzer.QueryAnalyzer$Visitor.visitSelect(QueryAnalyzer.java:283)

at com.starrocks.sql.ast.SelectRelation.accept(SelectRelation.java:232)

at com.starrocks.sql.analyzer.QueryAnalyzer$Visitor.process(QueryAnalyzer.java:288)

at com.starrocks.sql.analyzer.QueryAnalyzer$Visitor.visitQueryRelation(QueryAnalyzer.java:303)

at com.starrocks.sql.analyzer.QueryAnalyzer$Visitor.visitQueryStatement(QueryAnalyzer.java:293)

at com.starrocks.sql.analyzer.QueryAnalyzer$Visitor.visitQueryStatement(QueryAnalyzer.java:283)

at com.starrocks.sql.ast.QueryStatement.accept(QueryStatement.java:70)

at com.starrocks.sql.analyzer.QueryAnalyzer$Visitor.process(QueryAnalyzer.java:288)

at com.starrocks.sql.analyzer.QueryAnalyzer.analyze(QueryAnalyzer.java:121)

at com.starrocks.sql.analyzer.Analyzer$AnalyzerVisitor.visitQueryStatement(Analyzer.java:446)

at com.starrocks.sql.analyzer.Analyzer$AnalyzerVisitor.visitQueryStatement(Analyzer.java:176)

at com.starrocks.sql.ast.QueryStatement.accept(QueryStatement.java:70)

at com.starrocks.sql.ast.AstVisitor.visit(AstVisitor.java:80)

at com.starrocks.sql.analyzer.Analyzer.analyze(Analyzer.java:173)

at com.starrocks.sql.StatementPlanner.analyzeStatement(StatementPlanner.java:191)

at com.starrocks.sql.StatementPlanner.plan(StatementPlanner.java:113)

at com.starrocks.statistic.StatisticExecutor.executeDQL(StatisticExecutor.java:491)

at com.starrocks.statistic.StatisticExecutor.executeStatisticDQL(StatisticExecutor.java:448)

at com.starrocks.statistic.FullStatisticsCollectJob.collectStatisticSync(FullStatisticsCollectJob.java:192)

at com.starrocks.statistic.FullStatisticsCollectJob.collect(FullStatisticsCollectJob.java:145)

at com.starrocks.statistic.StatisticExecutor.collectStatistics(StatisticExecutor.java:351)

at com.starrocks.statistic.StatisticsCollectionTrigger.lambda$execute$0(StatisticsCollectionTrigger.java:191)

at java.base/java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:515)

at java.base/java.util.concurrent.FutureTask.run(FutureTask.java:264)

at java.base/java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1128)

at java.base/java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:628)

at java.base/java.lang.Thread.run(Thread.java:829)

2025-03-10 01:24:00.977Z WARN (analyze-task-concurrency-pool-2|1362) [StatisticExecutor.collectStatistics():354] execute statistics job failed: FullStatisticsCollectJob{type=FULL, scheduleType=ONCE, db=com.starrocks.catalog.Database@2bb6, table=Table [id=

545234, name=rtb_oks_conv_report, type=CLOUD_NATIVE], partitionIdList=[600084], columnNames=[__dt, __time, tid, oks_cid, exchange_id, country, bundle, position, billing_type, interstitial, adm_tech], properties={}}

com.starrocks.sql.analyzer.SemanticException: Getting analyzing error. Detail message: Unknown partition 'p2025031000_600066' in table 'rtb_oks_conv_report'.

at com.starrocks.sql.analyzer.QueryAnalyzer.resolveTable(QueryAnalyzer.java:1419)