当前外表catalog涉及的kerberos 认证是读取机器的 kinit 认证信息,当本机 tgt过期后,重新 kinit,需要重启服务才能加载到新的 tgt信息,除了重启服务还有其他更好的运维方式么?

自问自答先说结论,external catalog使用 kerberos认证时, kerberos tgt 过期后不用重启服务

starrocks社区版 3.2.4, hadoop 版本 3.3.6

FE节点

只要任一创建了有 Ranger的 catalog并且和 metastore 使用相同的 keytab, 能保证tgt过期后自动relogin,所以tgt过期后也不需要重启 FE

如果是下面两种情况, tgt过期后kinit也不能正常relogin,

- 只创建了不带Ranger的 catalog

- 创建带有Ranger的 catalog但是和 metastore 使用不同的 keytab

方法 1:需要显示设置 metastore的keytab 路径和 principal(见 BE/CN 配置)。ranger的已经在 FE 配置文件中配置过了(ranger_spnego_kerberos_principal、ranger_spnego_kerberos_keytab )

方法 2: 配置启动参数-Dhadoop.kerberos.keytab.login.autorenewal.enabled=true

BE/CN节点

对于 BE/CN 节点不需要特殊配置,只要重新 kinit,hadoop client或者TGT Renewer 的失败重试都能在tgt过期后不用重启服务的情况下重新 login。但是这样需要有一个 crontab在 tgt 有效期内(ticket_lifetime)重新 kinit

如果希望不额外配置 crontab任务,可以设置系统变量实现 relogin

export KRB5KEYTAB=/tmp/xxx.keytab

export KRB5PRINCIPAL=xxx@xxxx

Kerberos认证原理探究

BE/CN

BE/CN 节点 tgt过期后在下面两个地方尝试relogin

// 一个是TGT Renewer

ts=2024-04-19 16:37:29;thread_name=TGT Renewer for alluxio@HADOOP.QIYI.COM;id=18;is_daemon=true;priority=5;TCCL=sun.misc.Launcher$AppClassLoader@764c12b6

@org.apache.hadoop.security.UserGroupInformation.reloginFromTicketCache()

at org.apache.hadoop.security.UserGroupInformation$TicketCacheRenewalRunnable.relogin(UserGroupInformation.java:1066)

at org.apache.hadoop.security.UserGroupInformation$AutoRenewalForUserCredsRunnable.run(UserGroupInformation.java:975)

at java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:511)

at java.util.concurrent.FutureTask.run(FutureTask.java:266)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)

at java.lang.Thread.run(Thread.java:748)

// 一个是 hadoop client有失败重试 org.apache.hadoop.ipc.Client$Connection.handleSaslConnectionFailure

"main@1" prio=5 tid=0x1 nid=NA runnable

java.lang.Thread.State: RUNNABLE

at org.apache.hadoop.security.UserGroupInformation.unprotectedRelogin(UserGroupInformation.java:1340)

at org.apache.hadoop.security.UserGroupInformation.relogin(UserGroupInformation.java:1309)

- locked <0x1288> (a java.util.Collections$SynchronizedSet)

at org.apache.hadoop.security.UserGroupInformation.reloginFromTicketCache(UserGroupInformation.java:1298)

at org.apache.hadoop.ipc.Client$Connection$1.run(Client.java:706)

at java.security.AccessController.executePrivileged(AccessController.java:807)

at java.security.AccessController.doPrivileged(AccessController.java:712)

at javax.security.auth.Subject.doAs(Subject.java:439)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1899)

at org.apache.hadoop.ipc.Client$Connection.handleSaslConnectionFailure(Client.java:693)

- locked <0x1289> (a org.apache.hadoop.ipc.Client$Connection)

at org.apache.hadoop.ipc.Client$Connection.setupIOstreams(Client.java:796)

at org.apache.hadoop.ipc.Client$Connection.access$3800(Client.java:347)

at org.apache.hadoop.ipc.Client.getConnection(Client.java:1632)

at org.apache.hadoop.ipc.Client.call(Client.java:1457)

at org.apache.hadoop.ipc.Client.call(Client.java:1410)

at org.apache.hadoop.ipc.ProtobufRpcEngine2$Invoker.invoke(ProtobufRpcEngine2.java:258)

at org.apache.hadoop.ipc.ProtobufRpcEngine2$Invoker.invoke(ProtobufRpcEngine2.java:139)

at jdk.proxy2.$Proxy26.getBlockLocations(Unknown Source:-1)

at org.apache.hadoop.hdfs.protocolPB.ClientNamenodeProtocolTranslatorPB.getBlockLocations(ClientNamenodeProtocolTranslatorPB.java:334)

at jdk.internal.reflect.NativeMethodAccessorImpl.invoke0(NativeMethodAccessorImpl.java:-1)

at jdk.internal.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:77)

at jdk.internal.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:568)

at org.apache.hadoop.io.retry.RetryInvocationHandler.invokeMethod(RetryInvocationHandler.java:433)

at org.apache.hadoop.io.retry.RetryInvocationHandler$Call.invokeMethod(RetryInvocationHandler.java:166)

at org.apache.hadoop.io.retry.RetryInvocationHandler$Call.invoke(RetryInvocationHandler.java:158)

at org.apache.hadoop.io.retry.RetryInvocationHandler$Call.invokeOnce(RetryInvocationHandler.java:96)

- locked <0x128a> (a org.apache.hadoop.io.retry.RetryInvocationHandler$Call)

at org.apache.hadoop.io.retry.RetryInvocationHandler.invoke(RetryInvocationHandler.java:362)

at jdk.proxy2.$Proxy27.getBlockLocations(Unknown Source:-1)

at org.apache.hadoop.hdfs.DFSClient.callGetBlockLocations(DFSClient.java:900)

at org.apache.hadoop.hdfs.DFSClient.getLocatedBlocks(DFSClient.java:889)

at org.apache.hadoop.hdfs.DFSClient.getLocatedBlocks(DFSClient.java:878)

at org.apache.hadoop.hdfs.DFSClient.open(DFSClient.java:1046)

at org.apache.hadoop.hdfs.DistributedFileSystem$4.doCall(DistributedFileSystem.java:343)

at org.apache.hadoop.hdfs.DistributedFileSystem$4.doCall(DistributedFileSystem.java:339)

at org.apache.hadoop.fs.FileSystemLinkResolver.resolve(FileSystemLinkResolver.java:81)

at org.apache.hadoop.hdfs.DistributedFileSystem.open(DistributedFileSystem.java:356)

at jdk.internal.reflect.NativeMethodAccessorImpl.invoke0(NativeMethodAccessorImpl.java:-1)

at jdk.internal.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:77)

at jdk.internal.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:568)

at com.iqiyi.bigdata.qbfs.proxy.AbstractFsProxy.invokeMethod(AbstractFsProxy.java:33)

at com.iqiyi.bigdata.qbfs.proxy.DefaultFsProxy.invoke(DefaultFsProxy.java:25)

at com.iqiyi.bigdata.qbfs.proxy.FsProxy.invokeSingle(FsProxy.java:13)

at com.iqiyi.bigdata.qbfs.AbstractQBFSFileSystem.open(AbstractQBFSFileSystem.java:63)

at com.iqiyi.bigdata.qbfs.QBFS.open(QBFS.java:171)

其中AutoRenewalForUserCredsRunnable的创建时机是初始化 FS 的时候

"main@1" prio=5 tid=0x1 nid=NA runnable

java.lang.Thread.State: RUNNABLE

at org.apache.hadoop.security.UserGroupInformation.spawnAutoRenewalThreadForUserCreds(UserGroupInformation.java:886)

at org.apache.hadoop.security.UserGroupInformation.getLoginUser(UserGroupInformation.java:680)

at org.apache.hadoop.security.UserGroupInformation.getCurrentUser(UserGroupInformation.java:579)

at org.apache.hadoop.fs.viewfs.ViewFileSystem.<init>(ViewFileSystem.java:279)

at jdk.internal.reflect.NativeConstructorAccessorImpl.newInstance0(NativeConstructorAccessorImpl.java:-1)

at jdk.internal.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:77)

at jdk.internal.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45)

at java.lang.reflect.Constructor.newInstanceWithCaller(Constructor.java:499)

at java.lang.reflect.Constructor.newInstance(Constructor.java:480)

at java.util.ServiceLoader$ProviderImpl.newInstance(ServiceLoader.java:789)

at java.util.ServiceLoader$ProviderImpl.get(ServiceLoader.java:729)

at java.util.ServiceLoader$3.next(ServiceLoader.java:1403)

at org.apache.hadoop.fs.FileSystem.loadFileSystems(FileSystem.java:3647)

- locked <0x23a> (a java.lang.Class)

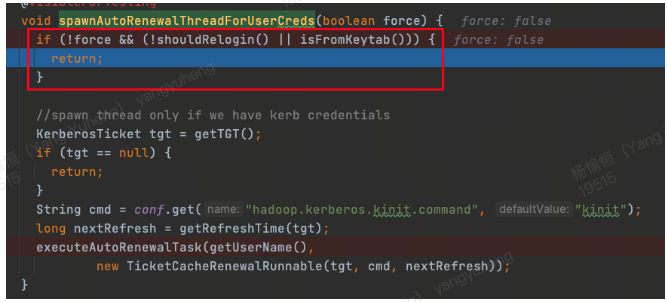

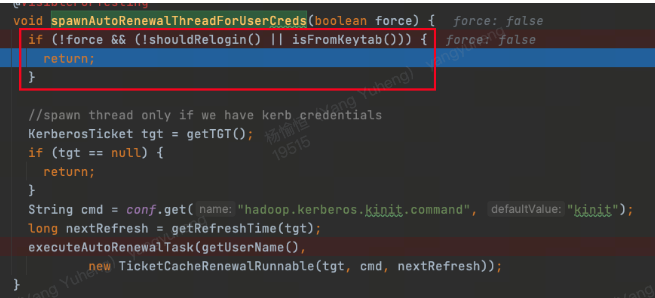

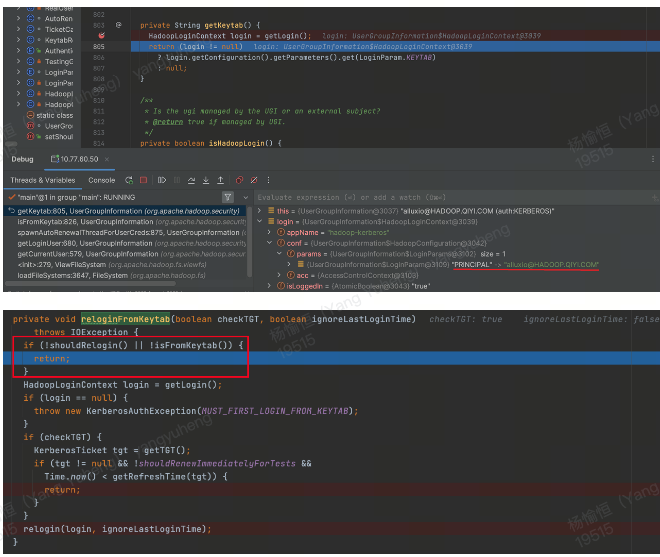

spawnAutoRenewalThreadForUserCreds(force) 方法用于为那些没有通过Keytab文件进行身份验证的用户启动一个线程来自动更新他们的Kerberos Ticket-Granting Ticket(TGT)。

org.apache.hadoop.ipc.Client.Connection#handleSaslConnectionFailure这个方法里看到,是通过有没有设置 keytab路径信息决定走哪种 login

private synchronized void handleSaslConnectionFailure(

final int currRetries, final int maxRetries, final IOException ex,

final Random rand, final UserGroupInformation ugi) throws IOException,

InterruptedException {

ugi.doAs(new PrivilegedExceptionAction<Object>() {

@Override

public Object run() throws IOException, InterruptedException {

final short MAX_BACKOFF = 5000;

closeConnection();

disposeSasl();

if (shouldAuthenticateOverKrb()) {

if (currRetries < maxRetries) {

LOG.debug("Exception encountered while connecting to the server {}", remoteId, ex);

// try re-login

if (UserGroupInformation.isLoginKeytabBased()) {

UserGroupInformation.getLoginUser().reloginFromKeytab();

} else if (UserGroupInformation.isLoginTicketBased()) {

UserGroupInformation.getLoginUser().reloginFromTicketCache();

}

// have granularity of milliseconds

reloginFromKeytab、reloginFromTicketCache最后都走到org.apache.hadoop.security.UserGroupInformation.unprotectedRelogin的 login

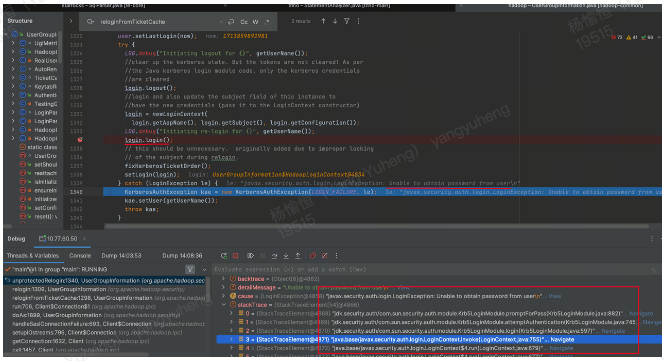

tgt 过期后,什么都不做这两个地方relogin均失败 ,org.apache.hadoop.security.UserGroupInformation#unprotectedRelogin的login.login()报错javax.security.auth.login.LoginException: Unable to obtain password from user

对于 Krb5LoginModule ,其在尝试登录时,会按照配置先后尝试以下方式:

-

如果指定了Keytab文件路径,并且指定了principal(Kerberos的认证主体),那么

Krb5LoginModule会尝试使用Keytab文件来进行认证。 -

如果没有指定Keytab文件路径,

Krb5LoginModule会尝试从Kerberos的Ticket Cache(票据缓存)中读取TGT(Ticket-Granting Ticket)来进行认证。

到这一步手动 kinit 一下,再次login就能正常执行, 意味着配置定时 kinit 任务即可

如果希望不额外配置 crontab任务,可以设置系统变量实现 relogin, 具体原理见FE

export KRB5KEYTAB=/data1/alluxio.keytab

export KRB5PRINCIPAL=alluxio@HADOOP.QIYI.COM

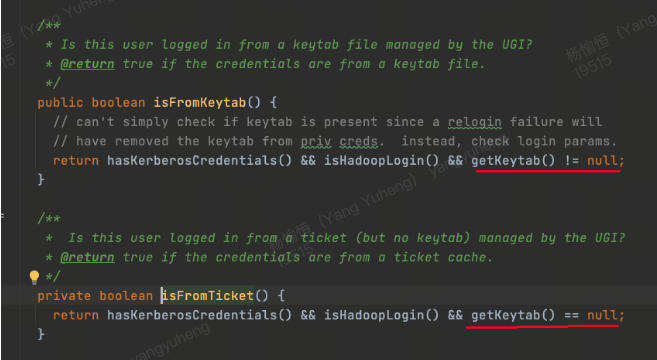

尝试设置系统变量后,不再创建AutoRenewalForUserCredsRunnable, 因为isFromKeytab为 true, 但是并不影响 BE/CN 的 relogin

会有如下调用链条捕捉到javax.security.sasl.SaslException: GSS initiate failed [Caused by GSSException: No valid credentials provided (Mechanism level: Failed to find any Kerberos tgt)]会尝试 relogin

"Thread-12@4890" prio=5 tid=0x2f nid=NA runnable

java.lang.Thread.State: RUNNABLE

at org.apache.hadoop.security.UserGroupInformation.reloginFromKeytab(UserGroupInformation.java:1277)

at org.apache.hadoop.security.UserGroupInformation.reloginFromKeytab(UserGroupInformation.java:1258)

at org.apache.hadoop.security.UserGroupInformation.reloginFromKeytab(UserGroupInformation.java:1237)

at org.apache.hadoop.ipc.Client$Connection$1.run(Client.java:704)

at java.security.AccessController.executePrivileged(AccessController.java:807)

at java.security.AccessController.doPrivileged(AccessController.java:712)

at javax.security.auth.Subject.doAs(Subject.java:439)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1899)

at org.apache.hadoop.ipc.Client$Connection.handleSaslConnectionFailure(Client.java:693)

- locked <0x1332> (a org.apache.hadoop.ipc.Client$Connection)

at org.apache.hadoop.ipc.Client$Connection.setupIOstreams(Client.java:796)

at org.apache.hadoop.ipc.Client$Connection.access$3800(Client.java:347)

at org.apache.hadoop.ipc.Client.getConnection(Client.java:1632)

at org.apache.hadoop.ipc.Client.call(Client.java:1457)

at org.apache.hadoop.ipc.Client.call(Client.java:1410)

at org.apache.hadoop.ipc.ProtobufRpcEngine2$Invoker.invoke(ProtobufRpcEngine2.java:258)

at org.apache.hadoop.ipc.ProtobufRpcEngine2$Invoker.invoke(ProtobufRpcEngine2.java:139)

at jdk.proxy2.$Proxy26.getBlockLocations(Unknown Source:-1)

at org.apache.hadoop.hdfs.protocolPB.ClientNamenodeProtocolTranslatorPB.getBlockLocations(ClientNamenodeProtocolTranslatorPB.java:334)

at jdk.internal.reflect.NativeMethodAccessorImpl.invoke0(NativeMethodAccessorImpl.java:-1)

at jdk.internal.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:77)

at jdk.internal.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:568)

at org.apache.hadoop.io.retry.RetryInvocationHandler.invokeMethod(RetryInvocationHandler.java:433)

at org.apache.hadoop.io.retry.RetryInvocationHandler$Call.invokeMethod(RetryInvocationHandler.java:166)

at org.apache.hadoop.io.retry.RetryInvocationHandler$Call.invoke(RetryInvocationHandler.java:158)

at org.apache.hadoop.io.retry.RetryInvocationHandler$Call.invokeOnce(RetryInvocationHandler.java:96)

- locked <0x1333> (a org.apache.hadoop.io.retry.RetryInvocationHandler$Call)

at org.apache.hadoop.io.retry.RetryInvocationHandler.invoke(RetryInvocationHandler.java:362)

at jdk.proxy2.$Proxy27.getBlockLocations(Unknown Source:-1)

at org.apache.hadoop.hdfs.DFSClient.callGetBlockLocations(DFSClient.java:900)

at org.apache.hadoop.hdfs.DFSClient.getLocatedBlocks(DFSClient.java:889)

at org.apache.hadoop.hdfs.DFSClient.getLocatedBlocks(DFSClient.java:878)

at org.apache.hadoop.hdfs.DFSClient.open(DFSClient.java:1046)

at org.apache.hadoop.hdfs.DistributedFileSystem$4.doCall(DistributedFileSystem.java:343)

at org.apache.hadoop.hdfs.DistributedFileSystem$4.doCall(DistributedFileSystem.java:339)

at org.apache.hadoop.fs.FileSystemLinkResolver.resolve(FileSystemLinkResolver.java:81)

at org.apache.hadoop.hdfs.DistributedFileSystem.open(DistributedFileSystem.java:356)

at jdk.internal.reflect.NativeMethodAccessorImpl.invoke0(NativeMethodAccessorImpl.java:-1)

at jdk.internal.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:77)

at jdk.internal.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:568)

at com.iqiyi.bigdata.qbfs.proxy.AbstractFsProxy.invokeMethod(AbstractFsProxy.java:33)

at com.iqiyi.bigdata.qbfs.proxy.DefaultFsProxy.invoke(DefaultFsProxy.java:25)

at com.iqiyi.bigdata.qbfs.proxy.FsProxy.invokeSingle(FsProxy.java:13)

at com.iqiyi.bigdata.qbfs.AbstractQBFSFileSystem.open(AbstractQBFSFileSystem.java:63)

at com.iqiyi.bigdata.qbfs.QBFS.open(QBFS.java:171)

FE节点

HiveMetaStore

org.apache.hadoop.hive.metastore.RetryingMetaStoreClient 自己会尝试 relogin

ts=2024-04-19 16:16:27;thread_name=starrocks-mysql-nio-pool-0;id=be;is_daemon=true;priority=5;TCCL=jdk.internal.loade

r.ClassLoaders$AppClassLoader@5cb0d902

@org.apache.hadoop.security.UserGroupInformation.reloginFromKeytab()

at org.apache.hadoop.security.UserGroupInformation.checkTGTAndReloginFromKeytab(UserGroupInformation.java:118

7)

at org.apache.hadoop.hive.metastore.RetryingMetaStoreClient.reloginExpiringKeytabUser(RetryingMetaStoreClient

.java:332)

at org.apache.hadoop.hive.metastore.RetryingMetaStoreClient.invoke(RetryingMetaStoreClient.java:174)

at jdk.proxy2.$Proxy50.getTable(null:-1)

at jdk.internal.reflect.GeneratedMethodAccessor76.invoke(null:-1)

at jdk.internal.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:568)

at com.starrocks.connector.hive.HiveMetaClient.callRPC(HiveMetaClient.java:161)

at com.starrocks.connector.hive.HiveMetaClient.callRPC(HiveMetaClient.java:150)

at com.starrocks.connector.hive.HiveMetaClient.getTable(HiveMetaClient.java:258)

at com.starrocks.connector.hive.HiveMetastore.getTableStatistics(HiveMetastore.java:223)

at com.starrocks.connector.hive.CachingHiveMetastore.loadTableStatistics(CachingHiveMetastore.java:375)

at com.google.common.cache.CacheLoader$FunctionToCacheLoader.load(CacheLoader.java:169)

at com.google.common.cache.CacheLoader$1.load(CacheLoader.java:192)

at com.google.common.cache.LocalCache$LoadingValueReference.loadFuture(LocalCache.java:3570)

at com.google.common.cache.LocalCache$Segment.loadSync(LocalCache.java:2312)

at com.google.common.cache.LocalCache$Segment.lockedGetOrLoad(LocalCache.java:2189)

at com.google.common.cache.LocalCache$Segment.get(LocalCache.java:2079)

at com.google.common.cache.LocalCache.get(LocalCache.java:4011)

at com.google.common.cache.LocalCache.getOrLoad(LocalCache.java:4034)

at com.google.common.cache.LocalCache$LocalLoadingCache.get(LocalCache.java:5010)

at com.google.common.cache.LocalCache$LocalLoadingCache.getUnchecked(LocalCache.java:5017)

at com.starrocks.connector.hive.CachingHiveMetastore.get(CachingHiveMetastore.java:623)

at com.starrocks.connector.hive.CachingHiveMetastore.getTableStatistics(CachingHiveMetastore.java:371)

at com.starrocks.connector.hive.HiveMetastoreOperations.getTableStatistics(HiveMetastoreOperations.java:271)

at com.starrocks.connector.hive.HiveStatisticsProvider.getTableStatistics(HiveStatisticsProvider.java:81)

at com.starrocks.connector.hive.HiveMetadata.getTableStatistics(HiveMetadata.java:268)

at com.starrocks.connector.CatalogConnectorMetadata.getTableStatistics(CatalogConnectorMetadata.java:150)

at com.starrocks.server.MetadataMgr.lambda$getTableStatistics$9(MetadataMgr.java:463)

at java.util.Optional.map(Optional.java:260)

at com.starrocks.server.MetadataMgr.getTableStatistics(MetadataMgr.java:463)

at com.starrocks.server.MetadataMgr.getTableStatistics(MetadataMgr.java:477)

at com.starrocks.sql.optimizer.statistics.StatisticsCalculator.computeHMSTableScanNode(StatisticsCalculator.j

ava:453)

at com.starrocks.sql.optimizer.statistics.StatisticsCalculator.visitLogicalHiveScan(StatisticsCalculator.java

:431)

at com.starrocks.sql.optimizer.statistics.StatisticsCalculator.visitLogicalHiveScan(StatisticsCalculator.java

:167)

at com.starrocks.sql.optimizer.operator.logical.LogicalHiveScanOperator.accept(LogicalHiveScanOperator.java:7

8)

at com.starrocks.sql.optimizer.statistics.StatisticsCalculator.estimatorStats(StatisticsCalculator.java:183)

at com.starrocks.sql.optimizer.task.DeriveStatsTask.execute(DeriveStatsTask.java:57)

at com.starrocks.sql.optimizer.task.SeriallyTaskScheduler.executeTasks(SeriallyTaskScheduler.java:65)

at com.starrocks.sql.optimizer.Optimizer.memoOptimize(Optimizer.java:724)

at com.starrocks.sql.optimizer.Optimizer.optimizeByCost(Optimizer.java:223)

at com.starrocks.sql.optimizer.Optimizer.optimize(Optimizer.java:146)

at com.starrocks.sql.StatementPlanner.createQueryPlan(StatementPlanner.java:187)

at com.starrocks.sql.StatementPlanner.plan(StatementPlanner.java:130)

at com.starrocks.sql.StatementPlanner.plan(StatementPlanner.java:87)

at com.starrocks.qe.StmtExecutor.execute(StmtExecutor.java:520)

at com.starrocks.qe.ConnectProcessor.handleQuery(ConnectProcessor.java:395)

at com.starrocks.qe.ConnectProcessor.dispatch(ConnectProcessor.java:589)

at com.starrocks.qe.ConnectProcessor.processOnce(ConnectProcessor.java:883)

at com.starrocks.mysql.nio.ReadListener.lambda$handleEvent$0(ReadListener.java:69)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1136)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:635)

at java.lang.Thread.run(Thread.java:833)

但是 relogin一直失败, 因为FE是以本机kinit方式创建 tgt, 没有显示设置keytab 路径的信息, isFromKeytab=false, 所以一直跳过 relogin(这就是为什么重新 kinit也没有用)

那么接下来就是想办法通过不修改代码的方式传递 keytab 路径和 principal

org.apache.hadoop.security.UserGroupInformation.LoginParams#getDefaults

static LoginParams getDefaults() {

LoginParams params = new LoginParams();

params.put(LoginParam.PRINCIPAL, System.getenv("KRB5PRINCIPAL"));

params.put(LoginParam.KEYTAB, System.getenv("KRB5KEYTAB"));

params.put(LoginParam.CCACHE, System.getenv("KRB5CCNAME"));

return params;

}

设置系统变量

export KRB5KEYTAB=/data1/alluxio.keytab

export KRB5PRINCIPAL=alluxio@HADOOP.QIYI.COM

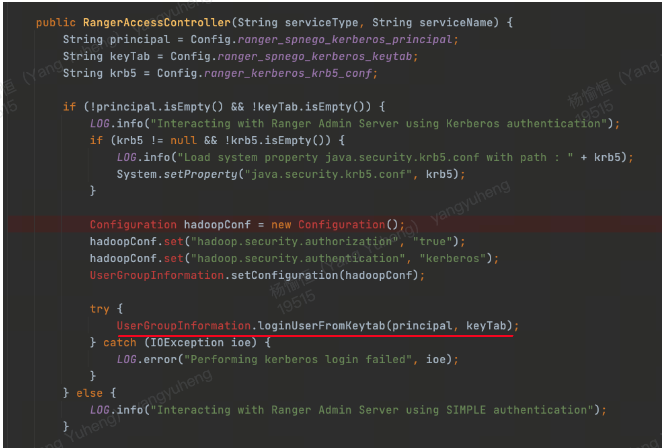

另一个方案是创建带有 Ranger的 catalog 并且和 metastore 使用相同的 keytab, 也能保证正常 relogin 成功,具体见下

Ranger

org.apache.ranger.admin.client.RangerAdminRESTClient 自己保证续约

ts=2024-04-19 16:06:16;thread_name=PolicyRefresher(serviceName=bdxs-t1_hive_ranger)-192;id=c0;is_daemon=true;priority=5;TCCL=jdk.internal.loader.ClassLoaders$AppClassLoader@5cb0d902

@org.apache.hadoop.security.UserGroupInformation.reloginFromKeytab()

at org.apache.hadoop.security.UserGroupInformation.checkTGTAndReloginFromKeytab(UserGroupInformation.java:1187)

at org.apache.ranger.audit.provider.MiscUtil.getUGILoginUser(MiscUtil.java:529)

at org.apache.ranger.admin.client.RangerAdminRESTClient.getServicePoliciesIfUpdatedWithCred(RangerAdminRESTClient.java:840)

at org.apache.ranger.admin.client.RangerAdminRESTClient.getServicePoliciesIfUpdated(RangerAdminRESTClient.java:146)

at org.apache.ranger.plugin.util.PolicyRefresher.loadPolicyfromPolicyAdmin(PolicyRefresher.java:308)

at org.apache.ranger.plugin.util.PolicyRefresher.loadPoålicy(PolicyRefresher.java:247)

at org.apache.ranger.plugin.util.PolicyRefresher.run(PolicyRefresher.java:209)

如果配置了带有 ranger的 Catalog, 每分钟都会刷新 policy策略,也会在 tgt失效后尝试 relogin keytab

因为Ranger在FE配置文件 显示设置keytab 路径的信息, 所以reloginFromKeytab能够成功

其实我们也有hive catalog,因为我们的版本是3.0.9,只能是在crontab里面定时的kinit,每6个小时就刷一次认证。但是在3.1.10版本,已经支持了自动续签了,这个我们在测试环境验证通过了。

我们使用的是starrocks社区版 3.2.4, hadoop 版本 3.3.6, 看下来 fe、be、cn 节点经过配置后都能实现不重启集群自动续签,这个能力是 hadoop 3.3.6 org.apache.hadoop.security.UserGroupInformation实现的,和你提到的3.1.10版本自动续签是相同原理么,感谢解答

应该是的,3.1.10用到的包也是hadoop3.3了