【StarRocks版本】3.1.7

【集群规模】例如:1fe(1 follower)+1be(fe与be混部)

【机器信息】96C、128G、4TSSD

【详述】订单详情表的数据量较大(4亿五千万条),使用几万订单id数据去join的耗时较长,已参考官网join加速案例,将字符串id映射为int id

【执行语句】

SELECT LEFT

( purchase_date, 10 ) AS DATE,

sum( quantity ) AS amount,

price

from

order_product_int_tmp a

right join orders_int b ON a.order_id_int = b.order_id_int

where account = “PT” and order_channel = “” and sales_channel = “Amazon.com” and purchase_date >= “2018-02-12” and purchase_date < “2022-03-12” and order_status in (“Shipped”, “Pending”, “Unshipped”)

GROUP BY

LEFT ( purchase_date, 10 ),price;

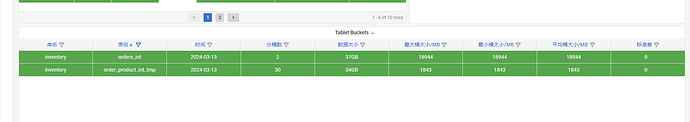

RightJoin 网络Shuffle左表的数据量太大了

order_id_int列是分桶列吗?

建表语句如下

CREATE TABLE order_product_int_tmp (

order_item_id varchar(65533) NOT NULL DEFAULT “” COMMENT “”,

order_id_int bigint(20) NOT NULL AUTO_INCREMENT COMMENT “”,

…

INDEX index1 (order_item_id) USING BITMAP,

INDEX index2 (amazon_order_id) USING BITMAP,

INDEX index3 (seller_sku) USING BITMAP

) ENGINE=OLAP

PRIMARY KEY(order_item_id)

DISTRIBUTED BY HASH(order_item_id) BUCKETS 30

PROPERTIES (

“replication_num” = “1”,

“bloom_filter_columns” = “order_item_id, seller_sku, amazon_order_id”,

“in_memory” = “false”,

“enable_persistent_index” = “true”,

“replicated_storage” = “true”,

“compression” = “LZ4”

);

CREATE TABLE orders_int (

account varchar(65533) NOT NULL DEFAULT “” COMMENT “”,

amazon_order_id varchar(65533) NOT NULL DEFAULT “” COMMENT “”,

order_id_int bigint(20) NOT NULL AUTO_INCREMENT COMMENT “”

…

) ENGINE=OLAP

PRIMARY KEY(account, amazon_order_id, order_id_int)

DISTRIBUTED BY HASH(account, amazon_order_id, order_id_int)

PROPERTIES (

“replication_num” = “1”,

“in_memory” = “false”,

“enable_persistent_index” = “true”,

“replicated_storage” = “true”,

“compression” = “LZ4”

);

对 order_product_int_tmp表进行过滤后的数据为12w和 orders_int过滤后为53w的数据join需要2秒多的时间,老师,请教下哪里还可以优化?

这是对应的profile:左表12w右表53w的profile.txt (100.0 KB)

order_id_int作为分桶列试试

先改下表结构,然后join后面加个[BUCKET]试试。不过你们只有一个be,卡在网络shuffle,也不合理。先这样试试把

好的,我这边尝试一下

有效果吗?

有一点效果,但是是稳定在1.5秒左右,但是效果还是达不到要求,现在对数据进行冷热分层,改为物化视图成大宽表的形式了,谢谢老师。

1.5s的profile,再发下?