为了更快的定位您的问题,请提供以下信息,谢谢

【详述】搭建基于hdfs的starrocks存算分离集群,创建表时报错:

ERROR 1064 (HY000): Unexpected exception: fail to create tablet: 11113: [Internal error: starlet err Create hdfs root dir ‘/user/starrocks/8b554de3-6dac-4623-95d9-0bbaeee77123/db11296/14014/14013’ error: Unknown error 255: Unknown error 255]

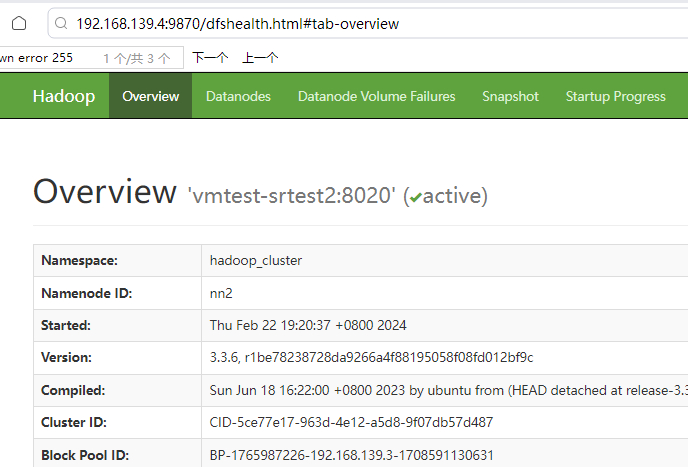

【背景】hadoop 版本3.3.6,两个namenode节点高可用部署(active:192.168.139.4:8020 standby:192.168.139.3:8020)

【业务影响】测试环境,无影响

【是否存算分离】是

【StarRocks版本】3.1.8

【集群规模】1fe+3be(fe与be混部)

【机器信息】8C/16G/千兆

【联系方式】StarRocks社区群5 -思变 [1412195108@qq.com]

【附件】

fe.conf配置

enable_load_volume_from_conf = true

run_mode = shared_data

cloud_native_meta_port = 6090

cloud_native_hdfs_url = hdfs://192.168.139.4:8020/user/starrocks/

cloud_native_storage_type = HDFS

be.out:

org.apache.hadoop.ipc.RemoteException(org.apache.hadoop.ipc.StandbyException): Operation category WRITE is not supported in state standby. Visit https://s.apache.org/sbnn-error

at org.apache.hadoop.hdfs.server.namenode.ha.StandbyState.checkOperation(StandbyState.java:108)

at org.apache.hadoop.hdfs.server.namenode.NameNode$NameNodeHAContext.checkOperation(NameNode.java:2107)

at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.checkOperation(FSNamesystem.java:1585)

at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.mkdirs(FSNamesystem.java:3433)

at org.apache.hadoop.hdfs.server.namenode.NameNodeRpcServer.mkdirs(NameNodeRpcServer.java:1167)

at org.apache.hadoop.hdfs.protocolPB.ClientNamenodeProtocolServerSideTranslatorPB.mkdirs(ClientNamenodeProtocolServerSideTranslatorPB.java:742)

at org.apache.hadoop.hdfs.protocol.proto.ClientNamenodeProtocolProtos$ClientNamenodeProtocol$2.callBlockingMethod(ClientNamenodeProtocolProtos.java)

at org.apache.hadoop.ipc.ProtobufRpcEngine2$Server$ProtoBufRpcInvoker.call(ProtobufRpcEngine2.java:621)

at org.apache.hadoop.ipc.ProtobufRpcEngine2$Server$ProtoBufRpcInvoker.call(ProtobufRpcEngine2.java:589)

at org.apache.hadoop.ipc.ProtobufRpcEngine2$Server$ProtoBufRpcInvoker.call(ProtobufRpcEngine2.java:573)

at org.apache.hadoop.ipc.RPC$Server.call(RPC.java:1227)

at org.apache.hadoop.ipc.Server$RpcCall.run(Server.java:1094)

at org.apache.hadoop.ipc.Server$RpcCall.run(Server.java:1017)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:422)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1899)

at org.apache.hadoop.ipc.Server$Handler.run(Server.java:3048)

at org.apache.hadoop.ipc.Client.getRpcResponse(Client.java:1567)

at org.apache.hadoop.ipc.Client.call(Client.java:1513)

at org.apache.hadoop.ipc.Client.call(Client.java:1410)

at org.apache.hadoop.ipc.ProtobufRpcEngine2$Invoker.invoke(ProtobufRpcEngine2.java:258)

at org.apache.hadoop.ipc.ProtobufRpcEngine2$Invoker.invoke(ProtobufRpcEngine2.java:139)

at com.sun.proxy.$Proxy25.mkdirs(Unknown Source)

at org.apache.hadoop.hdfs.protocolPB.ClientNamenodeProtocolTranslatorPB.mkdirs(ClientNamenodeProtocolTranslatorPB.java:675)

at jdk.internal.reflect.GeneratedMethodAccessor1.invoke(Unknown Source)

at java.base/jdk.internal.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.base/java.lang.reflect.Method.invoke(Method.java:566)

at org.apache.hadoop.io.retry.RetryInvocationHandler.invokeMethod(RetryInvocationHandler.java:433)

at org.apache.hadoop.io.retry.RetryInvocationHandler$Call.invokeMethod(RetryInvocationHandler.java:166)

at org.apache.hadoop.io.retry.RetryInvocationHandler$Call.invoke(RetryInvocationHandler.java:158)

at org.apache.hadoop.io.retry.RetryInvocationHandler$Call.invokeOnce(RetryInvocationHandler.java:96)

at org.apache.hadoop.io.retry.RetryInvocationHandler.invoke(RetryInvocationHandler.java:362)

at com.sun.proxy.$Proxy26.mkdirs(Unknown Source)

at org.apache.hadoop.hdfs.DFSClient.primitiveMkdir(DFSClient.java:2507)

at org.apache.hadoop.hdfs.DFSClient.mkdirs(DFSClient.java:2483)

at org.apache.hadoop.hdfs.DistributedFileSystem$27.doCall(DistributedFileSystem.java:1498)

at org.apache.hadoop.hdfs.DistributedFileSystem$27.doCall(DistributedFileSystem.java:1495)

at org.apache.hadoop.fs.FileSystemLinkResolver.resolve(FileSystemLinkResolver.java:81)

at org.apache.hadoop.hdfs.DistributedFileSystem.mkdirsInternal(DistributedFileSystem.java:1512)

at org.apache.hadoop.hdfs.DistributedFileSystem.mkdirs(DistributedFileSystem.java:1487)

at org.apache.hadoop.fs.FileSystem.mkdirs(FileSystem.java:2496)

be.INFO

/build/starrocks/be/src/storage/protobuf_file.cpp:154 value_or_err_L154

/build/starrocks/be/src/storage/lake/tablet_manager.cpp:255 file.load(metadata.get(), fill_cache)

/build/starrocks/be/src/storage/lake/tablet_manager.cpp:273 value_or_err_L273

W0222 20:14:18.897930 22870 lake_service.cpp:530] Fail to get tablet metadata. tablet_id: 11785, version: 1, error: Internal error: starlet err Create hdfs root dir ‘/user/starrocks/8b554de3-6dac-4623-95d9-0bbaeee77123/db11001/11775/11774’ error: Unknown error 255: Unknown error 255

/build/starrocks/be/src/storage/protobuf_file.cpp:154 value_or_err_L154

/build/starrocks/be/src/storage/lake/tablet_manager.cpp:255 file.load(metadata.get(), fill_cache)

/build/starrocks/be/src/storage/lake/tablet_manager.cpp:273 value_or_err_L273

W0222 20:14:18.908003 22870 lake_service.cpp:530] Fail to get tablet metadata. tablet_id: 11786, version: 1, error: Internal error: starlet err Create hdfs root dir ‘/user/starrocks/8b554de3-6dac-4623-95d9-0bbaeee77123/db11001/11775/11774’ error: Unknown error 255: Unknown error 255

/build/starrocks/be/src/storage/protobuf_file.cpp:154 value_or_err_L154

/build/starrocks/be/src/storage/lake/tablet_manager.cpp:255 file.load(metadata.get(), fill_cache)

/build/starrocks/be/src/storage/lake/tablet_manager.cpp:273 value_or_err_L273

I0222 20:14:21.285480 19651 daemon.cpp:225] Current memory statistics: process(49999048), query_pool(0), load(0), metadata(0), compaction(0), schema_change(0), column_pool(0), page_cache(0), update(0), chunk_allocator(0), clone(0), consistency(0), datacache(0)