这里是日志更早的一部分

2024-02-14 04:40:00,029 ERROR (autovacuum-pool1-t1|175) [AutovacuumDaemon.vacuumPartitionImpl():194] failed to vacuum db_ods.amazon_order_info.p202312: A error occurred: errorCode=62 errorMessage:method request time out, please check ‘onceTalkTimeout’ property. current value is:3600000(MILLISECONDS) correlationId:12786253 timeout with bound channel =>[id: 0x5a138a24, L:/192.168.0.16:55522 - R:/192.168.0.14:8060]

2024-02-14 04:40:42,729 ERROR (autovacuum-pool1-t7|181) [AutovacuumDaemon.vacuumPartitionImpl():194] failed to vacuum db_ods.amazon_order_info.p202208: A error occurred: errorCode=62 errorMessage:method request time out, please check ‘onceTalkTimeout’ property. current value is:3600000(MILLISECONDS) correlationId:12786265 timeout with bound channel =>[id: 0xbe97fb1f, L:/192.168.0.16:54996 - R:/192.168.0.14:8060]

2024-02-14 04:41:42,229 ERROR (autovacuum-pool1-t4|178) [AutovacuumDaemon.vacuumPartitionImpl():194] failed to vacuum db_ods.amazon_order_info.p202209: A error occurred: errorCode=62 errorMessage:method request time out, please check ‘onceTalkTimeout’ property. current value is:3600000(MILLISECONDS) correlationId:12786274 timeout with bound channel =>[id: 0x2195a517, L:/192.168.0.16:54994 - R:/192.168.0.14:8060]

2024-02-14 04:42:15,129 ERROR (autovacuum-pool1-t5|179) [AutovacuumDaemon.vacuumPartitionImpl():194] failed to vacuum db_ods.amazon_order_info.p202211: A error occurred: errorCode=62 errorMessage:method request time out, please check ‘onceTalkTimeout’ property. current value is:3600000(MILLISECONDS) correlationId:12786277 timeout with bound channel =>[id: 0x8b91c37b, L:/192.168.0.16:54990 - R:/192.168.0.14:8060]

2024-02-14 04:43:53,629 ERROR (autovacuum-pool1-t2|176) [AutovacuumDaemon.vacuumPartitionImpl():194] failed to vacuum db_ods.amazon_order_info.p202310: A error occurred: errorCode=62 errorMessage:method request time out, please check ‘onceTalkTimeout’ property. current value is:3600000(MILLISECONDS) correlationId:12786280 timeout with bound channel =>[id: 0xe108f245, L:/192.168.0.16:55752 - R:/192.168.0.14:8060]

2024-02-14 04:51:40,129 ERROR (autovacuum-pool1-t8|182) [AutovacuumDaemon.vacuumPartitionImpl():194] failed to vacuum db_ods.amazon_order_info.p202307: A error occurred: errorCode=62 errorMessage:method request time out, please check ‘onceTalkTimeout’ property. current value is:3600000(MILLISECONDS) correlationId:12786283 timeout with bound channel =>[id: 0xbe97fb1f, L:/192.168.0.16:54996 - R:/192.168.0.14:8060]

2024-02-14 04:51:48,429 ERROR (autovacuum-pool1-t3|177) [AutovacuumDaemon.vacuumPartitionImpl():194] failed to vacuum db_ods.amazon_order_info.p202210: A error occurred: errorCode=62 errorMessage:method request time out, please check ‘onceTalkTimeout’ property. current value is:3600000(MILLISECONDS) correlationId:12786286 timeout with bound channel =>[id: 0x03f6fd94, L:/192.168.0.16:55754 - R:/192.168.0.14:8060]

2024-02-14 04:52:09,229 ERROR (autovacuum-pool1-t6|180) [AutovacuumDaemon.vacuumPartitionImpl():194] failed to vacuum db_ods.amazon_order_info.p202305: A error occurred: errorCode=62 errorMessage:method request time out, please check ‘onceTalkTimeout’ property. current value is:3600000(MILLISECONDS) correlationId:12786289 timeout with bound channel =>[id: 0x2bda0143, L:/192.168.0.16:54992 - R:/192.168.0.14:8060]

2024-02-14 05:02:48,993 WARN (grpc-default-executor-901|477503) [ShardManager.validateWorkerReportedReplicas():1100] shard [2045020, 2045023] not exist or have outdated info when update shard info from worker heartbeat, schedule remove from worker 3003.

2024-02-14 05:02:48,994 WARN (pool-27-thread-2|316) [ShardManager.updateShardReplicaInfoInternal():1070] shard [2045020, 2045023] not exist when update shard info from shard scheduler!

2024-02-14 05:02:49,389 WARN (grpc-default-executor-901|477503) [ShardManager.validateWorkerReportedReplicas():1100] shard [2045018, 2045021, 2045025, 2045030] not exist or have outdated info when update shard info from worker heartbeat, schedule remove from worker 3002.

2024-02-14 05:02:49,389 WARN (pool-27-thread-1|315) [ShardManager.updateShardReplicaInfoInternal():1070] shard [2045018, 2045021, 2045025, 2045030] not exist when update shard info from shard scheduler!

2024-02-14 05:02:52,495 WARN (grpc-default-executor-901|477503) [ShardManager.validateWorkerReportedReplicas():1100] shard [2045019, 2045022, 2045026, 2045028, 2045032, 2045033, 2045036, 2045040, 2045044, 2045047, 2045051, 2045054, 2045057, 2045061, 2045064, 2045068, 2045070, 2045076, 2045080, 2045083, 2045085, 2045091, 2045093] not exist or have outdated info when update shard info from worker heartbeat, schedule remove from worker 3001.

2024-02-14 05:02:52,496 WARN (pool-27-thread-2|316) [ShardManager.updateShardReplicaInfoInternal():1070] shard [2045019, 2045022, 2045026, 2045028, 2045032, 2045033, 2045036, 2045040, 2045044, 2045047, 2045051, 2045054, 2045057, 2045061, 2045064, 2045068, 2045070, 2045076, 2045080, 2045083, 2045085, 2045091, 2045093] not exist when update shard info from shard scheduler!

2024-02-14 05:02:59,010 WARN (grpc-default-executor-901|477503) [ShardManager.validateWorkerReportedReplicas():1100] shard [2045027, 2045029, 2045034, 2045037, 2045041, 2045043, 2045048, 2045049, 2045055, 2045058, 2045062, 2045065, 2045071, 2045072, 2045077, 2045078, 2045082, 2045086, 2045089, 2045092, 2045097, 2045100, 2045104, 2045107, 2045111, 2045114] not exist or have outdated info when update shard info from worker heartbeat, schedule remove from worker 3003.

2024-02-14 05:02:59,011 WARN (pool-27-thread-1|315) [ShardManager.updateShardReplicaInfoInternal():1070] shard [2045027, 2045029, 2045034, 2045037, 2045041, 2045043, 2045048, 2045049, 2045055, 2045058, 2045062, 2045065, 2045071, 2045072, 2045077, 2045078, 2045082, 2045086, 2045089, 2045092, 2045097, 2045100, 2045104, 2045107, 2045111, 2045114] not exist when update shard info from shard scheduler!

2024-02-14 05:02:59,405 WARN (grpc-default-executor-901|477503) [ShardManager.validateWorkerReportedReplicas():1100] shard [2045035, 2045039, 2045042, 2045046, 2045050, 2045053, 2045056, 2045060, 2045063, 2045067, 2045069, 2045075, 2045079, 2045081, 2045084, 2045088, 2045090, 2045095, 2045098, 2045102, 2045105, 2045109, 2045112] not exist or have outdated info when update shard info from worker heartbeat, schedule remove from worker 3002.

2024-02-14 05:02:59,406 WARN (pool-27-thread-2|316) [ShardManager.updateShardReplicaInfoInternal():1070] shard [2045035, 2045039, 2045042, 2045046, 2045050, 2045053, 2045056, 2045060, 2045063, 2045067, 2045069, 2045075, 2045079, 2045081, 2045084, 2045088, 2045090, 2045095, 2045098, 2045102, 2045105, 2045109, 2045112] not exist when update shard info from shard scheduler!

2024-02-14 05:03:02,511 WARN (grpc-default-executor-901|477503) [ShardManager.validateWorkerReportedReplicas():1100] shard [2045096, 2045099, 2045103, 2045106, 2045110, 2045113] not exist or have outdated info when update shard info from worker heartbeat, schedule remove from worker 3001.

2024-02-14 05:03:02,512 WARN (pool-27-thread-1|315) [ShardManager.updateShardReplicaInfoInternal():1070] shard [2045096, 2045099, 2045103, 2045106, 2045110, 2045113] not exist when update shard info from shard scheduler!

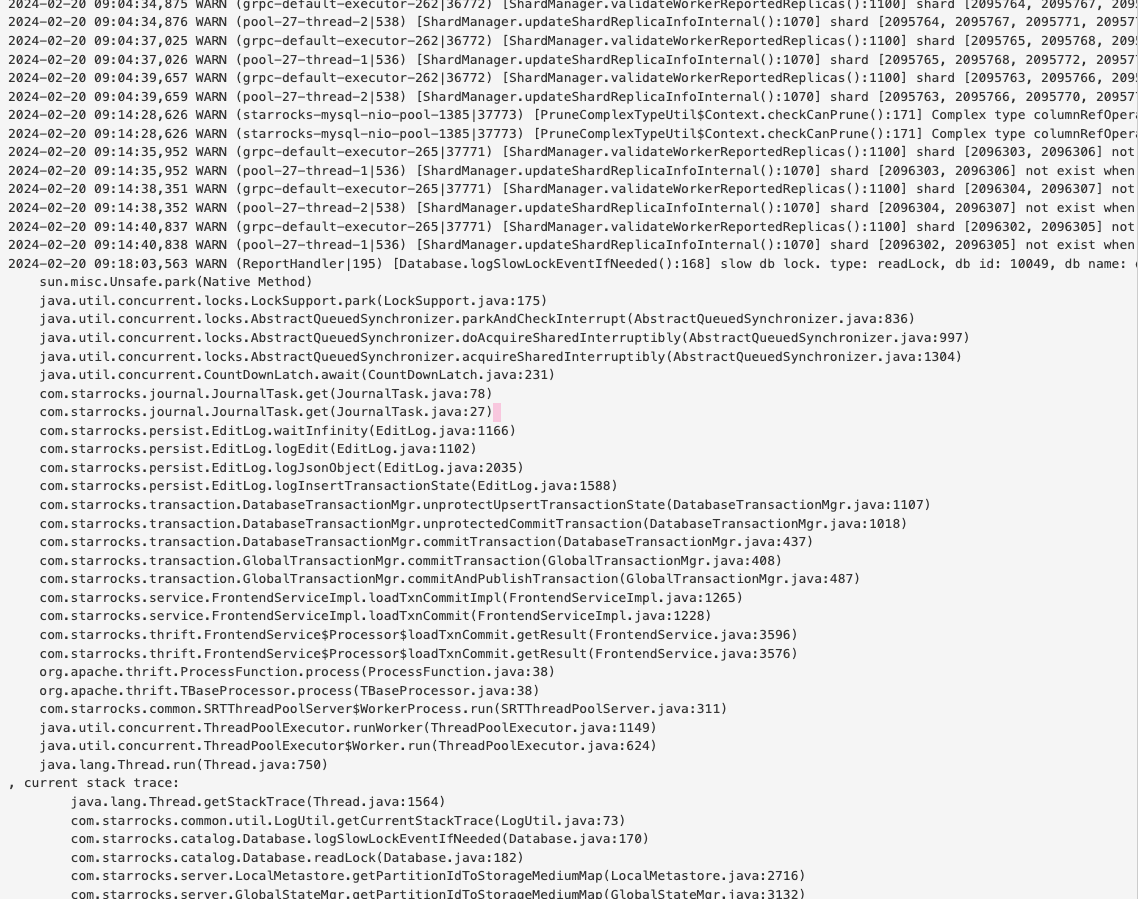

2024-02-14 05:05:16,384 WARN (starrocks-mysql-nio-pool-17817|483323) [Database.logSlowLockEventIfNeeded():168] slow db lock. type: tryWriteLock, db id: 11147, db name: db_dwd, wait time: 4036ms, former owner id: 483174, owner name: starrocks-mysql-nio-pool-17816, owner stack: dump thread: starrocks-mysql-nio-pool-17816, id: 483174

sun.misc.Unsafe.park(Native Method)

java.util.concurrent.locks.LockSupport.park(LockSupport.java:175)

java.util.concurrent.locks.AbstractQueuedSynchronizer.parkAndCheckInterrupt(AbstractQueuedSynchronizer.java:836)

java.util.concurrent.locks.AbstractQueuedSynchronizer.doAcquireSharedInterruptibly(AbstractQueuedSynchronizer.java:997)

java.util.concurrent.locks.AbstractQueuedSynchronizer.acquireSharedInterruptibly(AbstractQueuedSynchronizer.java:1304)

java.util.concurrent.CountDownLatch.await(CountDownLatch.java:231)

com.starrocks.journal.JournalTask.get(JournalTask.java:78)

com.starrocks.journal.JournalTask.get(JournalTask.java:27)

com.starrocks.persist.EditLog.waitInfinity(EditLog.java:1166)

com.starrocks.persist.EditLog.logEdit(EditLog.java:1102)

com.starrocks.persist.EditLog.logJsonObject(EditLog.java:2035)

com.starrocks.persist.EditLog.logInsertTransactionState(EditLog.java:1588)

com.starrocks.transaction.DatabaseTransactionMgr.unprotectUpsertTransactionState(DatabaseTransactionMgr.java:1107)

com.starrocks.transaction.DatabaseTransactionMgr.unprotectedCommitTransaction(DatabaseTransactionMgr.java:1018)

com.starrocks.transaction.DatabaseTransactionMgr.commitTransaction(DatabaseTransactionMgr.java:437)

com.starrocks.transaction.GlobalTransactionMgr.commitTransaction(GlobalTransactionMgr.java:408)

com.starrocks.transaction.GlobalTransactionMgr.commitAndPublishTransaction(GlobalTransactionMgr.java:487)

com.starrocks.qe.StmtExecutor.handleDMLStmt(StmtExecutor.java:1872)

com.starrocks.qe.StmtExecutor.handleDMLStmtWithProfile(StmtExecutor.java:1523)

com.starrocks.qe.StmtExecutor.execute(StmtExecutor.java:615)

com.starrocks.qe.ConnectProcessor.handleQuery(ConnectProcessor.java:363)

com.starrocks.qe.ConnectProcessor.dispatch(ConnectProcessor.java:477)

com.starrocks.qe.ConnectProcessor.processOnce(ConnectProcessor.java:753)

com.starrocks.mysql.nio.ReadListener.lambda$handleEvent$0(ReadListener.java:69)

com.starrocks.mysql.nio.ReadListener$$Lambda$929/1122474595.run(Unknown Source)

java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)

java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)

java.lang.Thread.run(Thread.java:750)

, current stack trace:

java.lang.Thread.getStackTrace(Thread.java:1564)

com.starrocks.common.util.LogUtil.getCurrentStackTrace(LogUtil.java:73)

com.starrocks.catalog.Database.logSlowLockEventIfNeeded(Database.java:170)

com.starrocks.catalog.Database.tryWriteLock(Database.java:275)

com.starrocks.transaction.GlobalTransactionMgr.commitAndPublishTransaction(GlobalTransactionMgr.java:481)

com.starrocks.qe.StmtExecutor.handleDMLStmt(StmtExecutor.java:1872)

com.starrocks.qe.StmtExecutor.handleDMLStmtWithProfile(StmtExecutor.java:1523)

com.starrocks.qe.StmtExecutor.execute(StmtExecutor.java:615)

com.starrocks.qe.ConnectProcessor.handleQuery(ConnectProcessor.java:363)

com.starrocks.qe.ConnectProcessor.dispatch(ConnectProcessor.java:477)

com.starrocks.qe.ConnectProcessor.processOnce(ConnectProcessor.java:753)

com.starrocks.mysql.nio.ReadListener.lambda$handleEvent$0(ReadListener.java:69)

java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)

java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)

java.lang.Thread.run(Thread.java:750)

2024-02-14 05:11:35,940 ERROR (JournalWriter|113) [BDBJEJournal.batchWriteCommit():422] failed to commit journal after retried 1 times! txn[] db[CloseSafeDatabase{db=32117065}]

com.sleepycat.je.rep.InsufficientAcksException: (JE 18.3.16) Transaction: -43035012 VLSN: 73,813,249, initiated at: 05:11:25. Insufficient acks for policy:SIMPLE_MAJORITY. Need replica acks: 1. Missing replica acks: 1. Timeout: 10000ms. FeederState=192.168.0.16_9010_1691045936256(1)[MASTER]