为了更快的定位您的问题,请提供以下信息,谢谢

【详述】使用 insert into select 从 hive 里面 导数导 sr集群, 提交任务的方式如下

SUBMIT /+set_var(query_timeout=259200)/ TASK task_tv_total_info_1201

AS

INSERT into `enlightent_daily`.`tv_total_info`

SELECT md5(concat(`name`, `channel`)) name_channel_md5,

`index`, `videoType`,`date`, `id`, `name`,

`channel`, `play_times`, `dayPlayTimes`,`up`, `dayUp`, `down`, `dayDown`,

`comment_count`, `totalComments`, `barrageCount`, `dayBarrageCount`,

`rating`, `fake`, `dayPlayTimesPredicted`, `playTimesPredicted`

FROM hive.mysql_prod_enlightent_daily.tv_total_info_oss_table

【背景】 表数据大小 在 1.5T 左右

【业务影响】

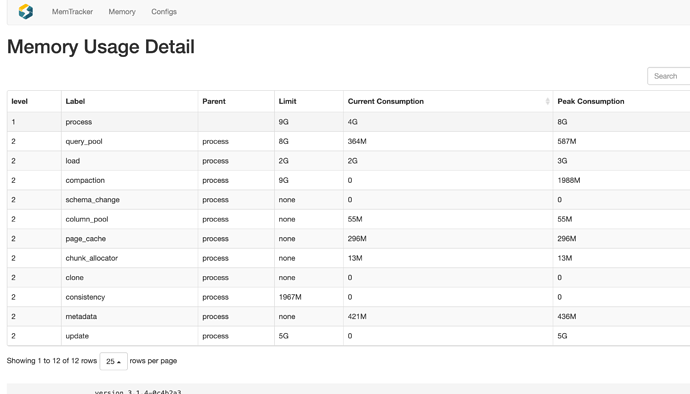

【是否存算分离】 存算分离

【StarRocks版本】例如:3.1.4

【集群规模】例如:1fe + 2be(fe与be混部)

【机器信息】阿里云 万兆

【联系方式】社区群17-不知不觉

【附件】

- fe.log/beINFO/相应截图

fe.warnning 没有报警日志

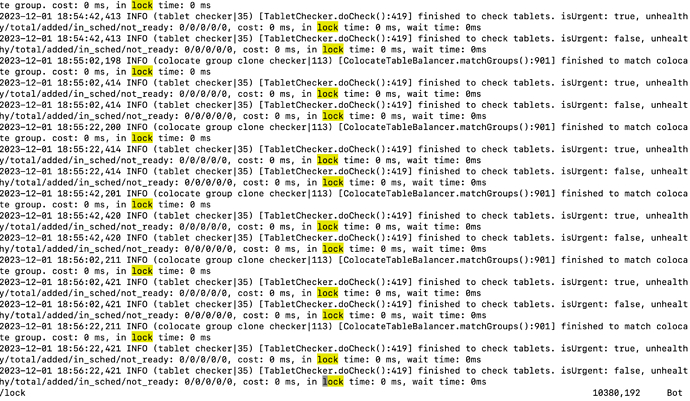

be.info warnning: 报

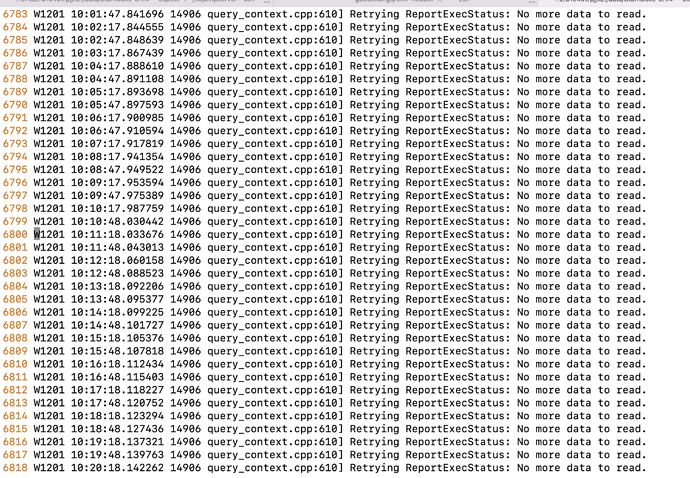

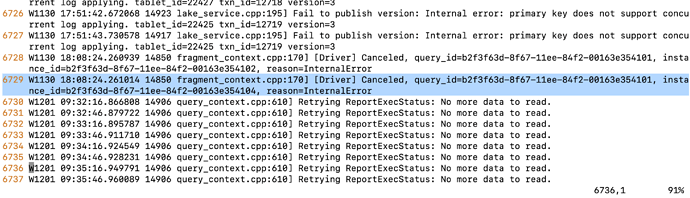

W1201 14:40:19.600549 14906 query_context.cpp:610] Retrying ReportExecStatus: No more data to read.

任务状态信息:

Name |Value |

--------------------±--------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+

JOB_ID |22554 |

LABEL |insert_5d0255e8-8fe9-11ee-84f2-00163e354101 |

DATABASE_NAME |enlightent_daily |

STATE |LOADING |

PROGRESS |ETL:100%; LOAD:99% |

TYPE |INSERT |

PRIORITY |NORMAL |

SCAN_ROWS |0 |

FILTERED_ROWS |0 |

UNSELECTED_ROWS |0 |

SINK_ROWS |2906109860 |

ETL_INFO | |

TASK_INFO |resource:N/A; timeout(s):259200; max_filter_ratio:0.0 |

CREATE_TIME |2023-12-01 09:31:35 |

ETL_START_TIME |2023-12-01 09:31:37 |

ETL_FINISH_TIME |2023-12-01 09:31:37 |

LOAD_START_TIME |2023-12-01 09:31:37 |

LOAD_FINISH_TIME | |

JOB_DETAILS |{“All backends”:{“5d0255e8-8fe9-11ee-84f2-00163e354101”:[10034]},“FileNumber”:0,“FileSize”:0,“InternalTableLoadBytes”:480064304611,“InternalTableLoadRows”:2906109860,“ScanBytes”:0,“ScanRows”:0,“TaskNumber”:1,“Unfinished backends”:{“5d0255e8-8fe9-11ee-84f2-00163e354101”:[10034]}}|

ERROR_MSG | |

TRACKING_URL | |

TRACKING_SQL | |

REJECTED_RECORD_PATH| |

:supervise_thread()

:supervise_thread()