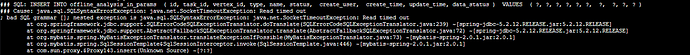

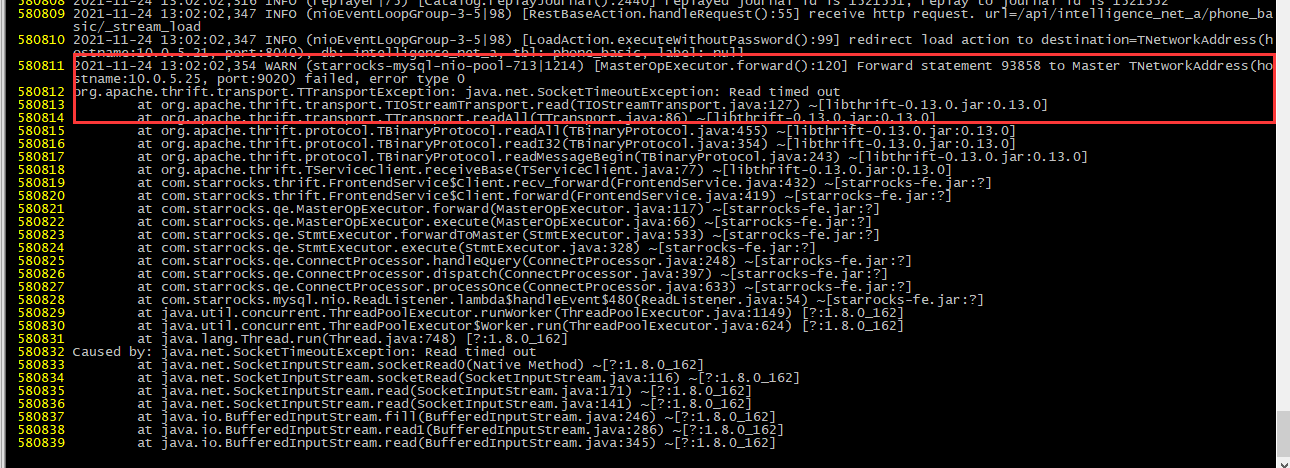

【详述】fe 节点之间的通讯超时导致插入失败,往不是master的fe上进行insert插入,任务失败,查看日志为与master节点的fe的通讯超时

【背景】使用insert into values进行数据的插入

【业务影响】数据插入失败

【StarRocks版本】1.19.1

【集群规模】例如:3fe(1 follower+2observer)+6be(fe与be混部)

【机器信息】CPU虚拟核/内存/网卡,48C/256G/万兆

【附件】

在使用spark进行stream load的时候也会出现插入broken pipe情况

org.apache.spark.api.python.PythonException: Traceback (most recent call last):

File “/data1/hadoop/hd_space/tmp/nm-local-dir/usercache/root/appcache/application_1637315118106_0053/container_e07_1637315118106_0053_02_000011/python3.6/python3.6/lib/python3.6/site-packages/urllib3/connectionpool.py”, line 677, in urlopen

chunked=chunked,

File “/data1/hadoop/hd_space/tmp/nm-local-dir/usercache/root/appcache/application_1637315118106_0053/container_e07_1637315118106_0053_02_000011/python3.6/python3.6/lib/python3.6/site-packages/urllib3/connectionpool.py”, line 392, in _make_request

conn.request(method, url, **httplib_request_kw)

File “/data1/hadoop/hd_space/tmp/nm-local-dir/usercache/root/appcache/application_1637315118106_0053/container_e07_1637315118106_0053_02_000011/python3.6/python3.6/lib/python3.6/http/client.py”, line 1239, in request

self._send_request(method, url, body, headers, encode_chunked)

File “/data1/hadoop/hd_space/tmp/nm-local-dir/usercache/root/appcache/application_1637315118106_0053/container_e07_1637315118106_0053_02_000011/python3.6/python3.6/lib/python3.6/http/client.py”, line 1285, in _send_request

self.endheaders(body, encode_chunked=encode_chunked)

File “/data1/hadoop/hd_space/tmp/nm-local-dir/usercache/root/appcache/application_1637315118106_0053/container_e07_1637315118106_0053_02_000011/python3.6/python3.6/lib/python3.6/http/client.py”, line 1234, in endheaders

self._send_output(message_body, encode_chunked=encode_chunked)

File “/data1/hadoop/hd_space/tmp/nm-local-dir/usercache/root/appcache/application_1637315118106_0053/container_e07_1637315118106_0053_02_000011/python3.6/python3.6/lib/python3.6/http/client.py”, line 1065, in _send_output

self.send(chunk)

File “/data1/hadoop/hd_space/tmp/nm-local-dir/usercache/root/appcache/application_1637315118106_0053/container_e07_1637315118106_0053_02_000011/python3.6/python3.6/lib/python3.6/http/client.py”, line 986, in send

self.sock.sendall(data)

BrokenPipeError: [Errno 32] Broken pipe

During handling of the above exception, another exception occurred:

Traceback (most recent call last):

File “/data1/hadoop/hd_space/tmp/nm-local-dir/usercache/root/appcache/application_1637315118106_0053/container_e07_1637315118106_0053_02_000011/python3.6/python3.6/lib/python3.6/site-packages/requests/adapters.py”, line 449, in send

timeout=timeout

File “/data1/hadoop/hd_space/tmp/nm-local-dir/usercache/root/appcache/application_1637315118106_0053/container_e07_1637315118106_0053_02_000011/python3.6/python3.6/lib/python3.6/site-packages/urllib3/connectionpool.py”, line 727, in urlopen

method, url, error=e, _pool=self, _stacktrace=sys.exc_info()[2]

File “/data1/hadoop/hd_space/tmp/nm-local-dir/usercache/root/appcache/application_1637315118106_0053/container_e07_1637315118106_0053_02_000011/python3.6/python3.6/lib/python3.6/site-packages/urllib3/util/retry.py”, line 403, in increment

raise six.reraise(type(error), error, _stacktrace)

File “/data1/hadoop/hd_space/tmp/nm-local-dir/usercache/root/appcache/application_1637315118106_0053/container_e07_1637315118106_0053_02_000011/python3.6/python3.6/lib/python3.6/site-packages/urllib3/packages/six.py”, line 734, in reraise

raise value.with_traceback(tb)

File “/data1/hadoop/hd_space/tmp/nm-local-dir/usercache/root/appcache/application_1637315118106_0053/container_e07_1637315118106_0053_02_000011/python3.6/python3.6/lib/python3.6/site-packages/urllib3/connectionpool.py”, line 677, in urlopen

chunked=chunked,

File “/data1/hadoop/hd_space/tmp/nm-local-dir/usercache/root/appcache/application_1637315118106_0053/container_e07_1637315118106_0053_02_000011/python3.6/python3.6/lib/python3.6/site-packages/urllib3/connectionpool.py”, line 392, in _make_request

conn.request(method, url, **httplib_request_kw)

File “/data1/hadoop/hd_space/tmp/nm-local-dir/usercache/root/appcache/application_1637315118106_0053/container_e07_1637315118106_0053_02_000011/python3.6/python3.6/lib/python3.6/http/client.py”, line 1239, in request

self._send_request(method, url, body, headers, encode_chunked)

File “/data1/hadoop/hd_space/tmp/nm-local-dir/usercache/root/appcache/application_1637315118106_0053/container_e07_1637315118106_0053_02_000011/python3.6/python3.6/lib/python3.6/http/client.py”, line 1285, in _send_request

self.endheaders(body, encode_chunked=encode_chunked)

File “/data1/hadoop/hd_space/tmp/nm-local-dir/usercache/root/appcache/application_1637315118106_0053/container_e07_1637315118106_0053_02_000011/python3.6/python3.6/lib/python3.6/http/client.py”, line 1234, in endheaders

self._send_output(message_body, encode_chunked=encode_chunked)

File “/data1/hadoop/hd_space/tmp/nm-local-dir/usercache/root/appcache/application_1637315118106_0053/container_e07_1637315118106_0053_02_000011/python3.6/python3.6/lib/python3.6/http/client.py”, line 1065, in _send_output

self.send(chunk)

File “/data1/hadoop/hd_space/tmp/nm-local-dir/usercache/root/appcache/application_1637315118106_0053/container_e07_1637315118106_0053_02_000011/python3.6/python3.6/lib/python3.6/http/client.py”, line 986, in send

self.sock.sendall(data)

urllib3.exceptions.ProtocolError: (‘Connection aborted.’, BrokenPipeError(32, ‘Broken pipe’))

During handling of the above exception, another exception occurred:

Traceback (most recent call last):

File “/data1/hadoop/hd_space/tmp/nm-local-dir/usercache/root/appcache/application_1637315118106_0053/container_e07_1637315118106_0053_02_000011/pyspark.zip/pyspark/worker.py”, line 377, in main

process()

File “/data1/hadoop/hd_space/tmp/nm-local-dir/usercache/root/appcache/application_1637315118106_0053/container_e07_1637315118106_0053_02_000011/pyspark.zip/pyspark/worker.py”, line 372, in process

serializer.dump_stream(func(split_index, iterator), outfile)

File “/data1/hadoop/hd_space/tmp/nm-local-dir/usercache/root/appcache/application_1637315118106_0053/container_e07_1637315118106_0053_02_000001/pyspark.zip/pyspark/rdd.py”, line 2499, in pipeline_func

File “/data1/hadoop/hd_space/tmp/nm-local-dir/usercache/root/appcache/application_1637315118106_0053/container_e07_1637315118106_0053_02_000001/pyspark.zip/pyspark/rdd.py”, line 2499, in pipeline_func

File “/data1/hadoop/hd_space/tmp/nm-local-dir/usercache/root/appcache/application_1637315118106_0053/container_e07_1637315118106_0053_02_000001/pyspark.zip/pyspark/rdd.py”, line 2499, in pipeline_func

File “/data1/hadoop/hd_space/tmp/nm-local-dir/usercache/root/appcache/application_1637315118106_0053/container_e07_1637315118106_0053_02_000001/pyspark.zip/pyspark/rdd.py”, line 352, in func

File “/data1/hadoop/hd_space/tmp/nm-local-dir/usercache/root/appcache/application_1637315118106_0053/container_e07_1637315118106_0053_02_000001/pyspark.zip/pyspark/rdd.py”, line 801, in func

File “/data1/hadoop/hd_space/tmp/nm-local-dir/usercache/root/appcache/application_1637315118106_0053/container_e07_1637315118106_0053_02_000001/pyfiles/edge2doris.py”, line 85, in

File “/data1/hadoop/hd_space/tmp/nm-local-dir/usercache/root/appcache/application_1637315118106_0053/container_e07_1637315118106_0053_02_000001/pyfiles/edge2doris.py”, line 83, in inner

File “/data1/hadoop/hd_space/tmp/nm-local-dir/usercache/root/appcache/application_1637315118106_0053/container_e07_1637315118106_0053_02_000011/pyfiles/edge2doris.py”, line 54, in put_data

response = session.put(url=api, headers=headers, data=payload.encode())

File “/data1/hadoop/hd_space/tmp/nm-local-dir/usercache/root/appcache/application_1637315118106_0053/container_e07_1637315118106_0053_02_000011/python3.6/python3.6/lib/python3.6/site-packages/requests/sessions.py”, line 590, in put

return self.request(‘PUT’, url, data=data, **kwargs)

File “/data1/hadoop/hd_space/tmp/nm-local-dir/usercache/root/appcache/application_1637315118106_0053/container_e07_1637315118106_0053_02_000011/python3.6/python3.6/lib/python3.6/site-packages/requests/sessions.py”, line 530, in request

resp = self.send(prep, **send_kwargs)

File “/data1/hadoop/hd_space/tmp/nm-local-dir/usercache/root/appcache/application_1637315118106_0053/container_e07_1637315118106_0053_02_000011/python3.6/python3.6/lib/python3.6/site-packages/requests/sessions.py”, line 665, in send

history = [resp for resp in gen]

File “/data1/hadoop/hd_space/tmp/nm-local-dir/usercache/root/appcache/application_1637315118106_0053/container_e07_1637315118106_0053_02_000011/python3.6/python3.6/lib/python3.6/site-packages/requests/sessions.py”, line 665, in

history = [resp for resp in gen]

File “/data1/hadoop/hd_space/tmp/nm-local-dir/usercache/root/appcache/application_1637315118106_0053/container_e07_1637315118106_0053_02_000011/python3.6/python3.6/lib/python3.6/site-packages/requests/sessions.py”, line 245, in resolve_redirects

**adapter_kwargs

File “/data1/hadoop/hd_space/tmp/nm-local-dir/usercache/root/appcache/application_1637315118106_0053/container_e07_1637315118106_0053_02_000011/python3.6/python3.6/lib/python3.6/site-packages/requests/sessions.py”, line 643, in send

r = adapter.send(request, **kwargs)

File “/data1/hadoop/hd_space/tmp/nm-local-dir/usercache/root/appcache/application_1637315118106_0053/container_e07_1637315118106_0053_02_000011/python3.6/python3.6/lib/python3.6/site-packages/requests/adapters.py”, line 498, in send

raise ConnectionError(err, request=request)

requests.exceptions.ConnectionError: (‘Connection aborted.’, BrokenPipeError(32, ‘Broken pipe’))

at org.apache.spark.api.python.BasePythonRunner$ReaderIterator.handlePythonException(PythonRunner.scala:456)

at org.apache.spark.api.python.PythonRunner$$anon$1.read(PythonRunner.scala:592)

at org.apache.spark.api.python.PythonRunner$$anon$1.read(PythonRunner.scala:575)

at org.apache.spark.api.python.BasePythonRunner$ReaderIterator.hasNext(PythonRunner.scala:410)

at org.apache.spark.InterruptibleIterator.hasNext(InterruptibleIterator.scala:37)

at scala.collection.Iterator$class.foreach(Iterator.scala:891)

at org.apache.spark.InterruptibleIterator.foreach(InterruptibleIterator.scala:28)

at scala.collection.generic.Growable$class.$plus$plus$eq(Growable.scala:59)

at scala.collection.mutable.ArrayBuffer.$plus$plus$eq(ArrayBuffer.scala:104)

at scala.collection.mutable.ArrayBuffer.$plus$plus$eq(ArrayBuffer.scala:48)

at scala.collection.TraversableOnce$class.to(TraversableOnce.scala:310)

at org.apache.spark.InterruptibleIterator.to(InterruptibleIterator.scala:28)

at scala.collection.TraversableOnce$class.toBuffer(TraversableOnce.scala:302)

at org.apache.spark.InterruptibleIterator.toBuffer(InterruptibleIterator.scala:28)

at scala.collection.TraversableOnce$class.toArray(TraversableOnce.scala:289)

at org.apache.spark.InterruptibleIterator.toArray(InterruptibleIterator.scala:28)

at org.apache.spark.rdd.RDD$$anonfun$collect$1$$anonfun$15.apply(RDD.scala:990)

at org.apache.spark.rdd.RDD$$anonfun$collect$1$$anonfun$15.apply(RDD.scala:990)

at org.apache.spark.SparkContext$$anonfun$runJob$5.apply(SparkContext.scala:2101)

at org.apache.spark.SparkContext$$anonfun$runJob$5.apply(SparkContext.scala:2101)

at org.apache.spark.scheduler.ResultTask.runTask(ResultTask.scala:90)

at org.apache.spark.scheduler.Task.run(Task.scala:123)

at org.apache.spark.executor.Executor$TaskRunner$$anonfun$10.apply(Executor.scala:408)

at org.apache.spark.util.Utils$.tryWithSafeFinally(Utils.scala:1360)

at org.apache.spark.executor.Executor$TaskRunner.run(Executor.scala:414)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)

at java.lang.Thread.run(Thread.java:748)