java.lang.RuntimeException: Writing records to StarRocks failed.

at com.starrocks.connector.flink.manager.StarRocksSinkManager.checkFlushException(StarRocksSinkManager.java:291)

at com.starrocks.connector.flink.manager.StarRocksSinkManager.writeRecord(StarRocksSinkManager.java:164)

at com.starrocks.connector.flink.table.StarRocksDynamicSinkFunction.invoke(StarRocksDynamicSinkFunction.java:105)

at org.apache.flink.streaming.api.operators.StreamSink.processElement(StreamSink.java:54)

at org.apache.flink.streaming.runtime.tasks.CopyingChainingOutput.pushToOperator(CopyingChainingOutput.java:71)

at org.apache.flink.streaming.runtime.tasks.CopyingChainingOutput.collect(CopyingChainingOutput.java:46)

at org.apache.flink.streaming.runtime.tasks.CopyingChainingOutput.collect(CopyingChainingOutput.java:26)

at org.apache.flink.streaming.api.operators.CountingOutput.collect(CountingOutput.java:50)

at org.apache.flink.streaming.api.operators.CountingOutput.collect(CountingOutput.java:28)

at org.apache.flink.streaming.api.operators.StreamSourceContexts$ManualWatermarkContext.processAndCollectWithTimestamp(StreamSourceContexts.java:322)

at org.apache.flink.streaming.api.operators.StreamSourceContexts$WatermarkContext.collectWithTimestamp(StreamSourceContexts.java:426)

at org.apache.flink.streaming.connectors.kafka.internals.AbstractFetcher.emitRecordsWithTimestamps(AbstractFetcher.java:365)

at org.apache.flink.streaming.connectors.kafka.internals.KafkaFetcher.partitionConsumerRecordsHandler(KafkaFetcher.java:183)

at org.apache.flink.streaming.connectors.kafka.internals.KafkaFetcher.runFetchLoop(KafkaFetcher.java:142)

at org.apache.flink.streaming.connectors.kafka.FlinkKafkaConsumerBase.run(FlinkKafkaConsumerBase.java:826)

at org.apache.flink.streaming.api.operators.StreamSource.run(StreamSource.java:110)

at org.apache.flink.streaming.api.operators.StreamSource.run(StreamSource.java:66)

at org.apache.flink.streaming.runtime.tasks.SourceStreamTask$LegacySourceFunctionThread.run(SourceStreamTask.java:263)

Caused by: java.io.IOException: com.starrocks.connector.flink.manager.StarRocksStreamLoadFailedException: Failed to flush data to StarRocks, Error response:

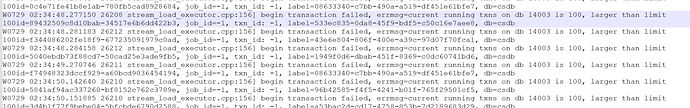

{“Status”:“Fail”,“BeginTxnTimeMs”:0,“Message”:“current running txns on db 12003 is 100, larger than limit 100”,“NumberUnselectedRows”:0,“CommitAndPublishTimeMs”:0,“Label”:“bfca24b7-e4b8-48cb-9571-ba77ecfd3948”,“LoadBytes”:0,“StreamLoadPutTimeMs”:0,“NumberTotalRows”:0,“WriteDataTimeMs”:0,“TxnId”:-1,“LoadTimeMs”:0,“ReadDataTimeMs”:0,“NumberLoadedRows”:0,“NumberFilteredRows”:0}

at com.starrocks.connector.flink.manager.StarRocksSinkManager.asyncFlush(StarRocksSinkManager.java:273)

at com.starrocks.connector.flink.manager.StarRocksSinkManager.access$000(StarRocksSinkManager.java:52)

at com.starrocks.connector.flink.manager.StarRocksSinkManager$1.run(StarRocksSinkManager.java:121)

at java.lang.Thread.run(Thread.java:748)

Caused by: com.starrocks.connector.flink.manager.StarRocksStreamLoadFailedException: Failed to flush data to StarRocks, Error response:

{“Status”:“Fail”,“BeginTxnTimeMs”:0,“Message”:“current running txns on db 12003 is 100, larger than limit 100”,“NumberUnselectedRows”:0,“CommitAndPublishTimeMs”:0,“Label”:“bfca24b7-e4b8-48cb-9571-ba77ecfd3948”,“LoadBytes”:0,“StreamLoadPutTimeMs”:0,“NumberTotalRows”:0,“WriteDataTimeMs”:0,“TxnId”:-1,“LoadTimeMs”:0,“ReadDataTimeMs”:0,“NumberLoadedRows”:0,“NumberFilteredRows”:0}

at com.starrocks.connector.flink.manager.StarRocksStreamLoadVisitor.doStreamLoad(StarRocksStreamLoadVisitor.java:87)

at com.starrocks.connector.flink.manager.StarRocksSinkManager.asyncFlush(StarRocksSinkManager.java:259)

请问这个错误应该调大哪个参数