【详述】DATAX 导HBASE 数据导SR,总是报错,没办法进行下去

【背景】DATAX 导HBASE 某张大表数据(大概5亿左右)导SR,总是报错

【业务影响】

【StarRocks版本】例如:3.5.2

【集群规模】例如:3fe(1 follower+2observer)+3be(fe与be混部)

【机器信息】CPU虚拟核/内存/网卡,例如:16C/32G/千兆

【联系方式】784830900@qq.com

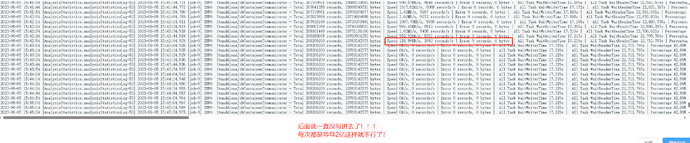

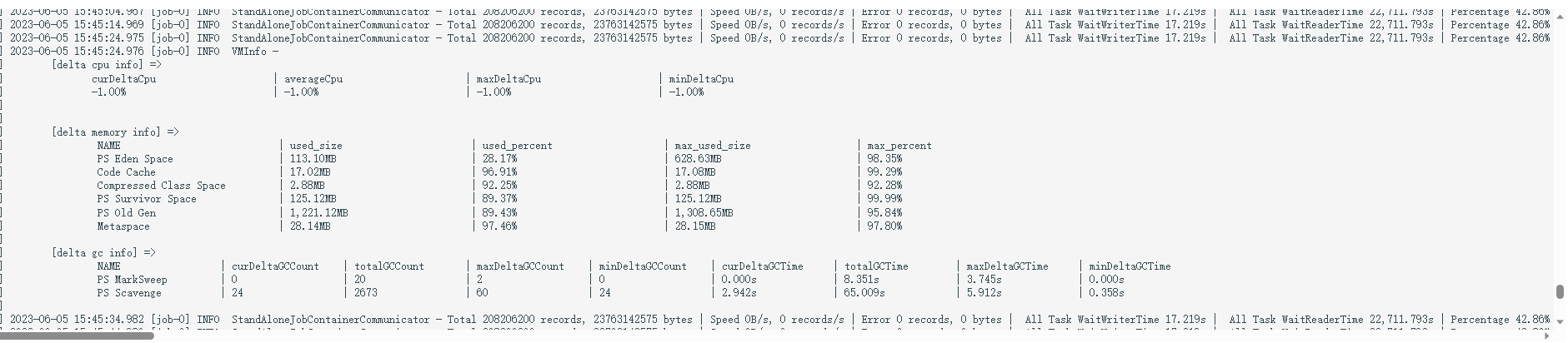

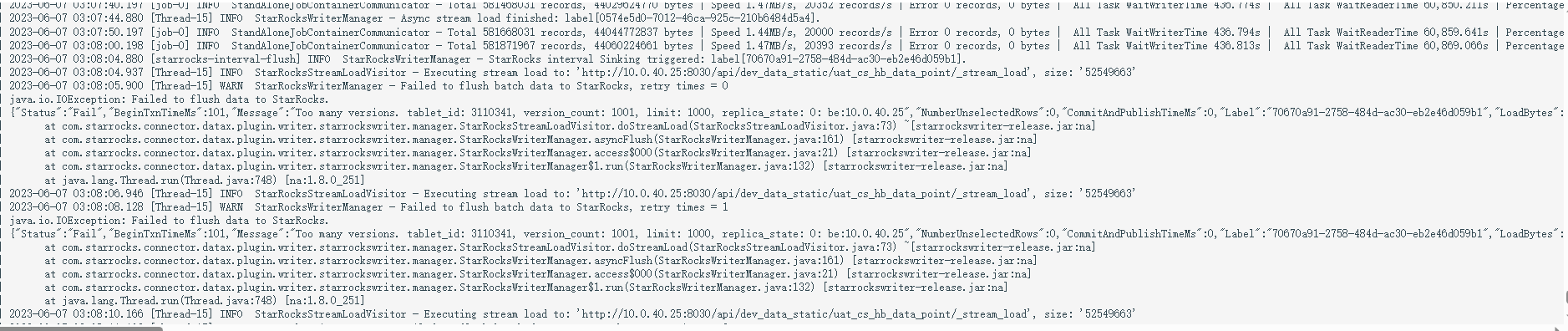

现在导的速度也不快,才5000条/秒左右!结果每次到5千万-1亿之间就报错。

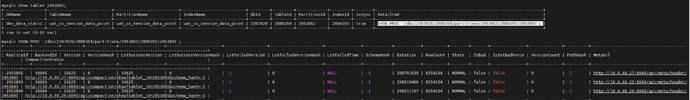

报错后再查看tablet 也没问题

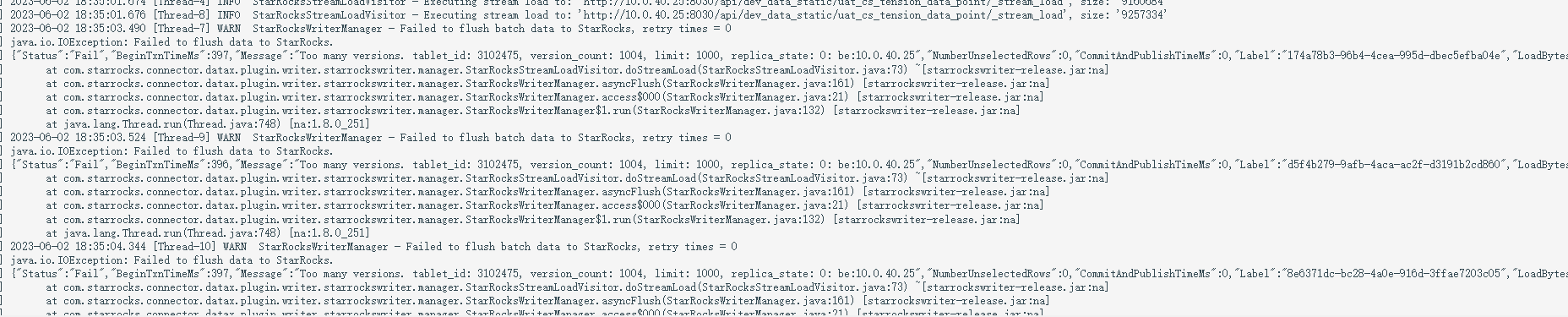

报错如下:

2023-06-01 22:59:16 [AnalysisStatistics.analysisStatisticsLog-53] 2023-06-01 22:59:16.319 [job-0] INFO StandAloneJobContainerCommunicator - Total 66536352 records, 7593211726 bytes | Speed 810.02KB/s, 7289 records/s | Error 0 records, 0 bytes | All Task WaitWriterTime 66,105.383s | All Task WaitReaderTime 11,600.075s | Percentage 0.00%

2023-06-01 22:59:26 [AnalysisStatistics.analysisStatisticsLog-53] 2023-06-01 22:59:26.320 [job-0] INFO StandAloneJobContainerCommunicator - Total 66592256 records, 7599613065 bytes | Speed 625.13KB/s, 5590 records/s | Error 0 records, 0 bytes | All Task WaitWriterTime 66,147.234s | All Task WaitReaderTime 11,610.298s | Percentage 0.00%

2023-06-01 22:59:46 [AnalysisStatistics.analysisStatisticsLog-53] 2023-06-01 22:59:46.321 [job-0] INFO StandAloneJobContainerCommunicator - Total 66711040 records, 7613188605 bytes | Speed 662.87KB/s, 5939 records/s | Error 0 records, 0 bytes | All Task WaitWriterTime 66,240.344s | All Task WaitReaderTime 11,631.891s | Percentage 0.00%

2023-06-01 22:59:56 [AnalysisStatistics.analysisStatisticsLog-53] 2023-06-01 22:59:56.321 [job-0] INFO StandAloneJobContainerCommunicator - Total 66776480 records, 7620634755 bytes | Speed 727.16KB/s, 6544 records/s | Error 0 records, 0 bytes | All Task WaitWriterTime 66,288.297s | All Task WaitReaderTime 11,642.315s | Percentage 0.00%

2023-06-01 23:00:01 [AnalysisStatistics.analysisStatisticsLog-53] 2023-06-01 23:00:01.813 [0-0-2-writer] WARN CommonRdbmsWriter$Task - 回滚此次写入, 采用每次写入一行方式提交. 因为:Too many versions. tablet_id: 2953891, version_count: 1001, limit: 1000, replica_state: 0: be:10.0.40.27

2023-06-01 23:00:01 [AnalysisStatistics.analysisStatisticsLog-53] 2023-06-01 23:00:01.815 [0-1-3-writer] ERROR StdoutPluginCollector -

2023-06-01 23:00:01 [AnalysisStatistics.analysisStatisticsLog-53] com.mysql.jdbc.exceptions.jdbc4.MySQLSyntaxErrorException: Too many versions. tablet_id: 2953891, version_count: 1001, limit: 1000, replica_state: 0: be:10.0.40.27

2023-06-01 23:00:01 [AnalysisStatistics.analysisStatisticsLog-53] at sun.reflect.GeneratedConstructorAccessor16.newInstance(Unknown Source) ~[na:na]

2023-06-01 23:00:01 [AnalysisStatistics.analysisStatisticsLog-53] at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45) ~[na:1.8.0_251]

2023-06-01 23:00:01 [AnalysisStatistics.analysisStatisticsLog-53] at java.lang.reflect.Constructor.newInstance(Constructor.java:423) ~[na:1.8.0_251]

2023-06-01 23:00:01 [AnalysisStatistics.analysisStatisticsLog-53] at com.mysql.jdbc.Util.handleNewInstance(Util.java:425) ~[mysql-connector-java-5.1.47.jar:5.1.47]

2023-06-01 23:00:01 [AnalysisStatistics.analysisStatisticsLog-53] at com.mysql.jdbc.Util.getInstance(Util.java:408) ~[mysql-connector-java-5.1.47.jar:5.1.47]

2023-06-01 23:00:01 [AnalysisStatistics.analysisStatisticsLog-53] at com.mysql.jdbc.SQLError.createSQLException(SQLError.java:944) ~[mysql-connector-java-5.1.47.jar:5.1.47]

2023-06-01 23:00:01 [AnalysisStatistics.analysisStatisticsLog-53] at com.mysql.jdbc.MysqlIO.checkErrorPacket(MysqlIO.java:3978) ~[mysql-connector-java-5.1.47.jar:5.1.47]

2023-06-01 23:00:01 [AnalysisStatistics.analysisStatisticsLog-53] at com.mysql.jdbc.MysqlIO.checkErrorPacket(MysqlIO.java:3914) ~[mysql-connector-java-5.1.47.jar:5.1.47]

2023-06-01 23:00:01 [AnalysisStatistics.analysisStatisticsLog-53] at com.mysql.jdbc.MysqlIO.sendCommand(MysqlIO.java:2530) ~[mysql-connector-java-5.1.47.jar:5.1.47]

2023-06-01 23:00:01 [AnalysisStatistics.analysisStatisticsLog-53] at com.mysql.jdbc.MysqlIO.sqlQueryDirect(MysqlIO.java:2683) ~[mysql-connector-java-5.1.47.jar:5.1.47]

2023-06-01 23:00:01 [AnalysisStatistics.analysisStatisticsLog-53] at com.mysql.jdbc.ConnectionImpl.execSQL(ConnectionImpl.java:2495) ~[mysql-connector-java-5.1.47.jar:5.1.47]

2023-06-01 23:00:01 [AnalysisStatistics.analysisStatisticsLog-53] at com.mysql.jdbc.PreparedStatement.executeInternal(PreparedStatement.java:1903) ~[mysql-connector-java-5.1.47.jar:5.1.47]

2023-06-01 23:00:01 [AnalysisStatistics.analysisStatisticsLog-53] at com.mysql.jdbc.PreparedStatement.execute(PreparedStatement.java:1242) ~[mysql-connector-java-5.1.47.jar:5.1.47]

2023-06-01 23:00:01 [AnalysisStatistics.analysisStatisticsLog-53] at com.alibaba.datax.plugin.rdbms.writer.CommonRdbmsWriter$Task.doOneInsert(CommonRdbmsWriter.java:382) [plugin-rdbms-util-0.0.1-SNAPSHOT.jar:na]

2023-06-01 23:00:01 [AnalysisStatistics.analysisStatisticsLog-53] at com.alibaba.datax.plugin.rdbms.writer.CommonRdbmsWriter$Task.doBatchInsert(CommonRdbmsWriter.java:362) [plugin-rdbms-util-0.0.1-SNAPSHOT.jar:na]

2023-06-01 23:00:01 [AnalysisStatistics.analysisStatisticsLog-53] at com.alibaba.datax.plugin.rdbms.writer.CommonRdbmsWriter$Task.startWriteWithConnection(CommonRdbmsWriter.java:291) [plugin-rdbms-util-0.0.1-SNAPSHOT.jar:na]

2023-06-01 23:00:01 [AnalysisStatistics.analysisStatisticsLog-53] at com.alibaba.datax.plugin.rdbms.writer.CommonRdbmsWriter$Task.startWrite(CommonRdbmsWriter.java:319) [plugin-rdbms-util-0.0.1-SNAPSHOT.jar:na]

2023-06-01 23:00:01 [AnalysisStatistics.analysisStatisticsLog-53] at com.alibaba.datax.plugin.writer.mysqlwriter.MysqlWriter$Task.startWrite(MysqlWriter.java:78) [mysqlwriter-0.0.1-SNAPSHOT.jar:na]

2023-06-01 23:00:01 [AnalysisStatistics.analysisStatisticsLog-53] at com.alibaba.datax.core.taskgroup.runner.WriterRunner.run(WriterRunner.java:56) [datax-core-0.0.1-SNAPSHOT.jar:na]

2023-06-01 23:00:01 [AnalysisStatistics.analysisStatisticsLog-53] at java.lang.Thread.run(Thread.java:748) [na:1.8.0_251]

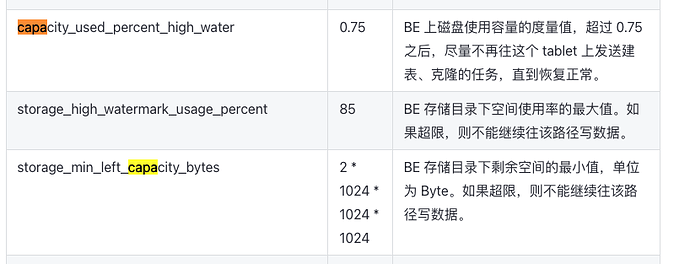

be.conf 也做了配置:FE 内存设置12G

base_compaction_check_interval_seconds = 10

cumulative_compaction_num_threads_per_disk = 4

base_compaction_num_threads_per_disk = 2

cumulative_compaction_check_interval_seconds = 2

enable_new_load_on_memory_limit_exceeded = true

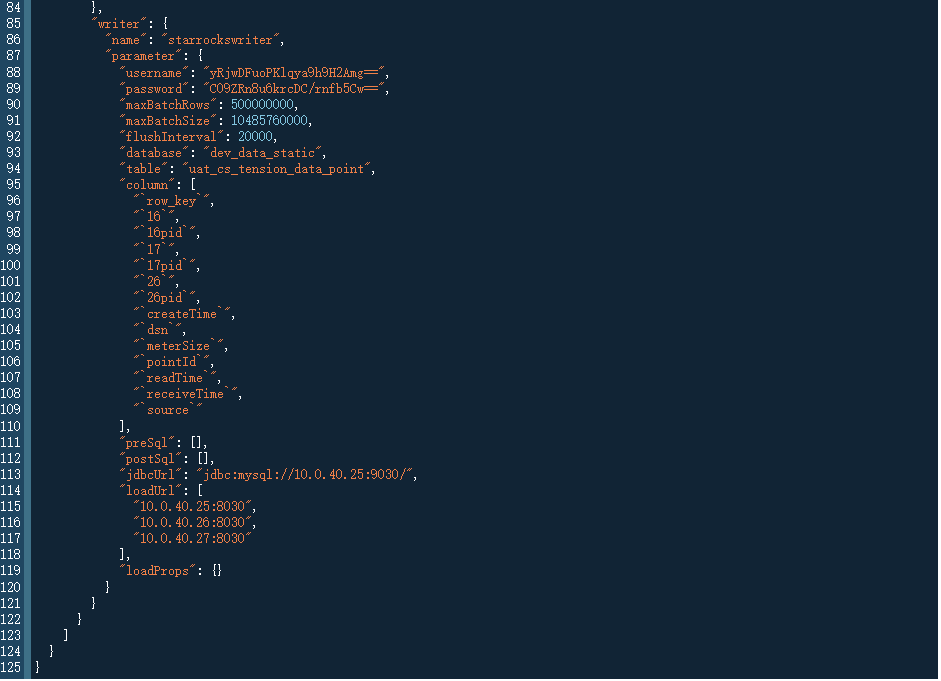

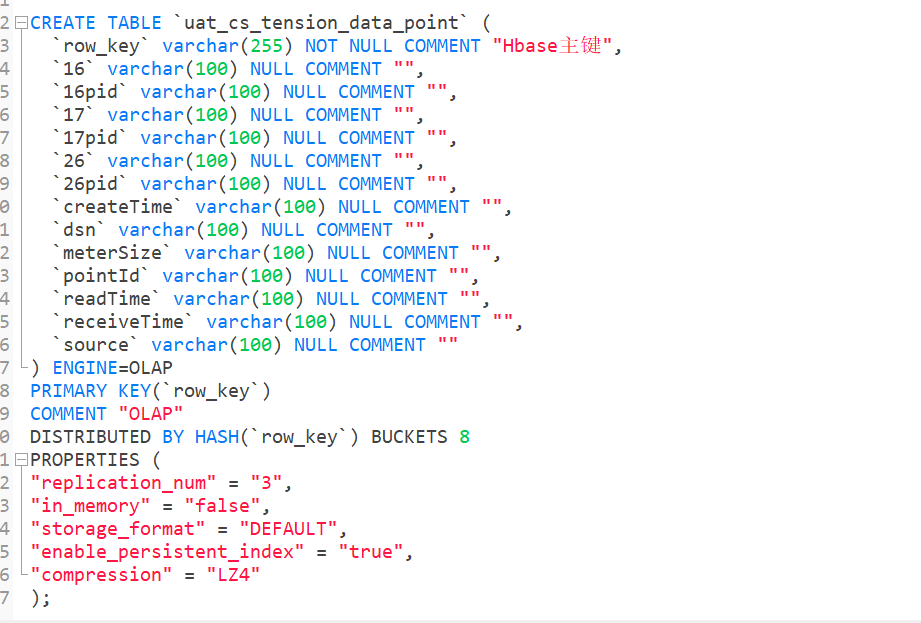

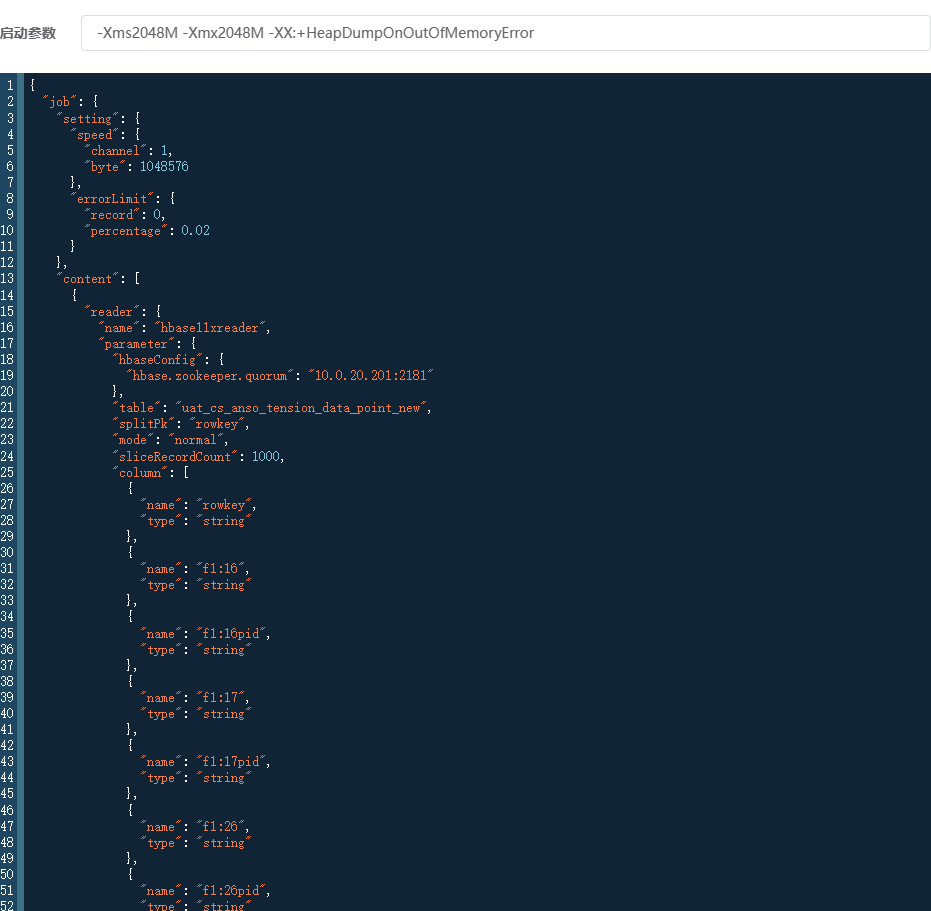

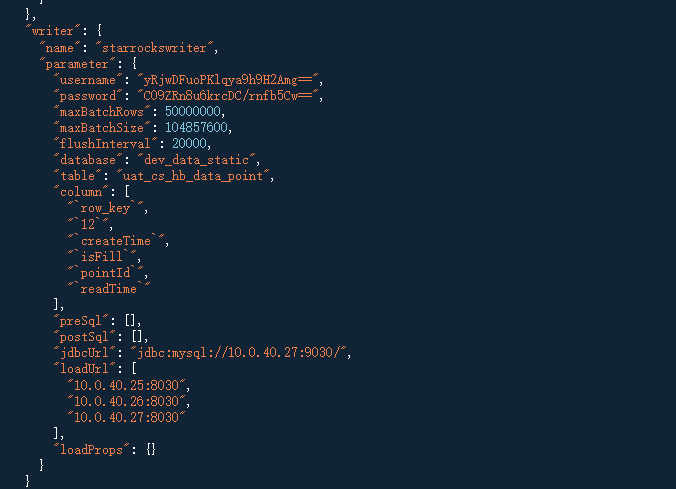

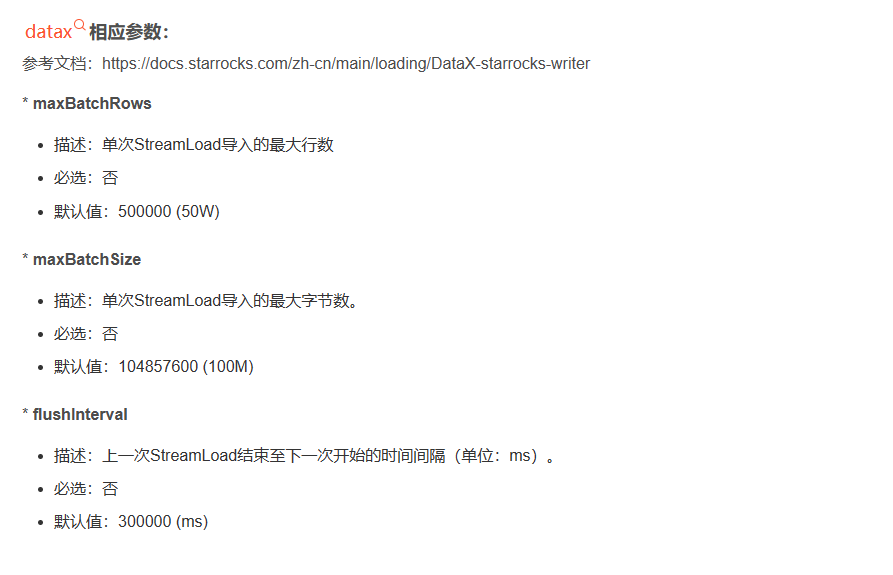

DATAX配置的json配置:

{

“job”: {

“setting”: {

“speed”: {

“channel”: 1,

“byte”: 1048576000

},

“errorLimit”: {

“record”: 0,

“percentage”: 0.02

}

},

“content”: [

{

“reader”: {

“name”: “hbase11xreader”,

“parameter”: {

“hbaseConfig”: {

“hbase.zookeeper.quorum”: “10.0.20.201:2181”

},

“table”: “uat_cs_anso_tension_data_point_new”,

“splitPk”: “rowkey”,

“mode”: “normal”,

“sliceRecordCount”: 20000,

“column”: [

{

“name”: “rowkey”,

“type”: “string”

},

{

“name”: “f1:16”,

“type”: “string”

},

{

“name”: “f1:16pid”,

“type”: “string”

},

{

“name”: “f1:17”,

“type”: “string”

},

{

“name”: “f1:17pid”,

“type”: “string”

},

{

“name”: “f1:26”,

“type”: “string”

},

{

“name”: “f1:26pid”,

“type”: “string”

},

{

“name”: “f1:createTime”,

“type”: “string”

},

{

“name”: “f1:dsn”,

“type”: “string”

},

{

“name”: “f1:meterSize”,

“type”: “string”

},

{

“name”: “f1:pointId”,

“type”: “string”

},

{

“name”: “f1:readTime”,

“type”: “string”

},

{

“name”: “f1:receiveTime”,

“type”: “string”

},

{

“name”: “f1:source”,

“type”: “string”

}

]

}

},

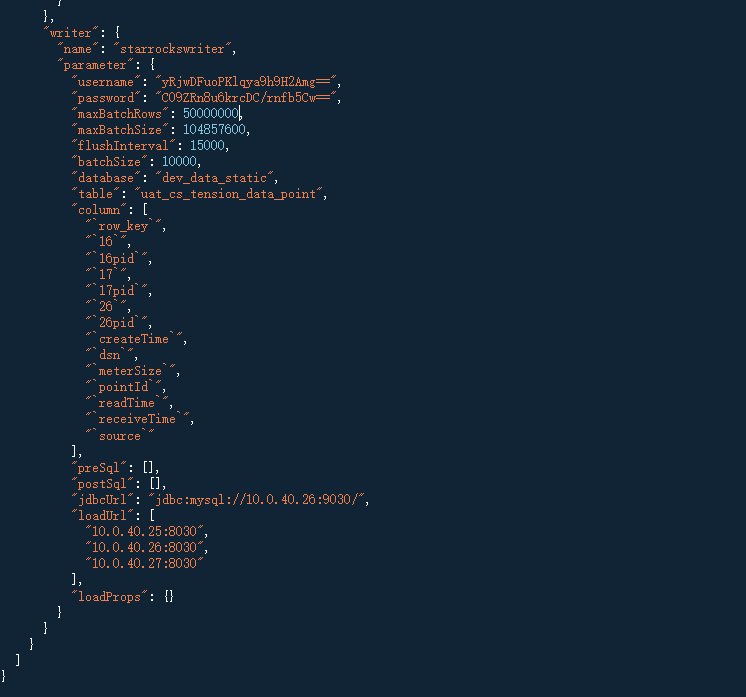

“writer”: {

“name”: “mysqlwriter”,

“parameter”: {

“username”: “yRjwDFuoPKlqya9h9H2Amg==”,

“password”: “C09ZRn8u6krcDC/rnfb5Cw==”,

“maxBatchRows”: 500000000,

“maxBatchSize”: 10485760000,

“flushInterval”: 20000,

“column”: [

“row_key”,

“16”,

“16pid”,

“17”,

“17pid”,

“26”,

“26pid”,

“createTime”,

“dsn”,

“meterSize”,

“pointId”,

“readTime”,

“receiveTime”,

“source”

],

“connection”: [

{

“table”: [

“uat_cs_tension_data_point”

],

“jdbcUrl”: “jdbc:mysql://10.0.40.25:9030/dev_data_static?sessionVariables=MAX_EXECUTION_TIME=68400000&useCompression=true&useCursorFetch=true”,

“loadUrl”: [

“10.0.40.25:8030”,

“10.0.40.26:8030”,

“10.0.40.27:8030”

]

}

]

}

}

}

]

}

}