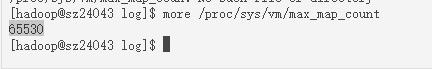

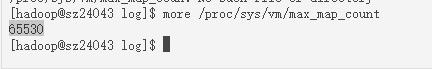

现在这个参数值是 65530 ,改成60000?

现在这个参数值是 65530 ,改成60000?

我把concat_ws改成concat,再运行一段时间观察看看

echo 200000 > /proc/sys/vm/max_map_count

好的,我试试调整一下

这个命令我没有权限执行,我执行了sudo echo “vm.max_map_count = 200000” >> /etc/sysctl.conf 应该是等效的吧?

只改文件没用,需要sysctl -p 才能生效

sysctl -p 这个也执行了,我观察看看

调整后今天上午10点半另外一台机器又宕机 ,另外数据量是昨天执行的两倍,

改了SQL,这段SQL是执行代码中的一小段:

truncate table ods.tmp_ent_port_tlns_frqc;

insert into ods.tmp_ent_port_tlns_frqc

SELECT

cast(substr(t.STRT_CD,4) as int) as dbct_cd,

cast(substr(t.DEST_CD,4) as int) as nxt_site_cd,

MAX(case when ONE_FRQC_ADJ_LST_SND_CAR_TM is not null then concat(ONE_FRQC_ADJ_LST_SND_CAR_TM,’:’,‘00’) end) one_frqc_snd_tm,

MAX(case when ONE_FRQC_FLD_TM is not null then concat(ONE_FRQC_FLD_TM,’:’,‘00’) end)one_frqc_oper_dur,

MAX(case when ONE_FRQC_TLNS_END_PT is not null then concat(ONE_FRQC_TLNS_END_PT,’:’,‘00’) end)one_frqc_tlns_end_pt,

MAX(case when TWO_FRQC_ADJ_LST_SND_CAR_TM is not null then concat(TWO_FRQC_ADJ_LST_SND_CAR_TM,’:’,‘00’) end) two_frqc_snd_tm,

MAX(case when TWO_FRQC_FLD_TM is not null then concat(TWO_FRQC_FLD_TM,’:’,‘00’) end) two_frqc_oper_dur,

MAX(case when TWO_FRQC_TLNS_END_PT is not null then concat(TWO_FRQC_TLNS_END_PT,’:’,‘00’) end) two_frqc_tlns_end_pt,

t.vld_tm,t.end_tm as ivld_tm

FROM dim.dim_dbct_lst_snd_car_schd t (–这个表是greenplum的外部表)

BE.OUT :

start time: Tue May 16 15:36:24 CST 2023

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/d/p2/app/StarRocks/be/lib/jni-packages/starrocks-jdbc-bridge-jar-with-dependencies.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/d/p2/app/StarRocks/be/lib/hadoop/common/lib/slf4j-log4j12-1.7.25.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory]

[warn] evbuffer_file_segment_materialize: mmap(4167, 0, 74) failed: No such device

2.5.5 RELEASE (build 24c1eca)

query_id:3c42d765-faa3-11ed-9712-ecebb89ecd60, fragment_instance:3c42d765-faa3-11ed-9712-ecebb89ecd61

tracker:process consumption: 158607687276

tracker:query_pool consumption: 67686453743

tracker:load consumption: 40336

tracker:metadata consumption: 5105087579

tracker:tablet_metadata consumption: 669229292

tracker:rowset_metadata consumption: 426347655

tracker:segment_metadata consumption: 1329814882

tracker:column_metadata consumption: 2679695750

tracker:tablet_schema consumption: 267884

tracker:segment_zonemap consumption: 1272609617

tracker:short_key_index consumption: 12517666

tracker:column_zonemap_index consumption: 2105348790

tracker:ordinal_index consumption: -866499616

tracker:bitmap_index consumption: 0

tracker:bloom_filter_index consumption: 0

tracker:compaction consumption: 0

tracker:schema_change consumption: 0

tracker:column_pool consumption: 6622752505

tracker:page_cache consumption: 52464753152

tracker:update consumption: 5610415267

tracker:chunk_allocator consumption: 1955993536

tracker:clone consumption: 0

tracker:consistency consumption: 0

*** Aborted at 1684981454 (unix time) try “date -d @1684981454” if you are using GNU date ***

PC: @ 0x2b407321d74c __memcpy_ssse3_back

*** SIGSEGV (@0x2b472f9ffff0) received by PID 35967 (TID 0x2b4237b05700) from PID 799014896; stack trace: ***

@ 0x58f9dc2 google::(anonymous namespace)::FailureSignalHandler()

@ 0x2b40720ca852 os::Linux::chained_handler()

@ 0x2b40720d1676 JVM_handle_linux_signal

@ 0x2b40720c7653 signalHandler()

@ 0x2b40727ad5d0 (unknown)

@ 0x2b407321d74c __memcpy_ssse3_back

@ 0x4e9d058 starrocks::stream_load::OlapTableSink::_print_varchar_error_msg()

@ 0x4e9fb09 starrocks::stream_load::OlapTableSink::_validate_data()

@ 0x4eac9d3 starrocks::stream_load::OlapTableSink::send_chunk()

@ 0x4f1fd99 starrocks::pipeline::OlapTableSinkOperator::push_chunk()

@ 0x2cf7836 starrocks::pipeline::PipelineDriver::process()

@ 0x4f2ef23 starrocks::pipeline::GlobalDriverExecutor::_worker_thread()

@ 0x4924bf2 starrocks::ThreadPool::dispatch_thread()

@ 0x491f6ea starrocks: :supervise_thread()

:supervise_thread()

@ 0x2b40727a5dd5 start_thread

@ 0x2b40731c5ead __clone

@ 0x0 (unknown)

start time: Thu May 25 10:57:06 CST 2023

这个SQL,是读外表,还是写外表?

读取外部表dim.dim_brch_cfm_rcv_maint 写入到starrocks中的表,我刚又调整了vm.max_map_count = 655360,再观察一下

那可能是其它原因,我们再查下。可以获取个Core文件吗。

这个怎么获取,命令发我

@U_1653974266322_1581

ulimit -c unlimited

/data/StarRocks/be/bin/stop_be.sh

/data/StarRocks/be/bin/start_be.sh --daemon

然后执行sql触发bug

再在执行 /data/StarRocks/be/bin/start_be.sh --daemon 这个命令的目录下找一下 core 文件

打包一下core文件

tar czvf core.xxx.tar.gz core.xxx

一般会比较大,最好能上传的 cos 或者云盘再发出来

调整vm.max_map_count 这个参数没有用,刚刚又挂了一台机器。sql放在shell里十分钟跑一次,每隔几个小时会挂一台机器

这个core文件我明天捕捉一下,这个sql不固定几个小时会导致be挂一次。

升级机器内存临时解决,但是这个问题并非是内存不足导致的,还剩很多内存,dmesg突然间就报oom,be就挂了,太不稳定了

链接: https://pan.baidu.com/s/1cCwQ9TQR9DxE-EDuldb2fg 提取码: 8ifg

core文件已经上传百度网盘

这次宕机是由另外一段sql导致的,和之前的宕机有共同点:都是从greenplum外部表查数据插入sr中:

truncate table ods.tmp_org_ful;

insert into ods.tmp_org_ful --starrocks明细表

select one_grd_brch,one_grd_dbct,org_cd,vld_tm,ivld_tm

from dim.dim_org_ful --greeplum外部表,数据量2.7w

where ivld_tm>now()-interval 25 day;

基本上可以确定是greeplum外部表的问题了,今天把外部表去掉后,跑了一天的程序,未再出现宕机情况。