【详述】集群原本是1.19 ,后面升级到2.3.10 , 查看日志发现三个问题

- be 崩溃 std::length_error

- fe报 query failed: no global dict

- fe报 WARN cannot find task. type: PUBLISH_VERSION, backendId: 10003, signature: 51951650

三个问题的详细日志如附件 ,请问该如何解决? 2与3如果不解决会有什么后果?

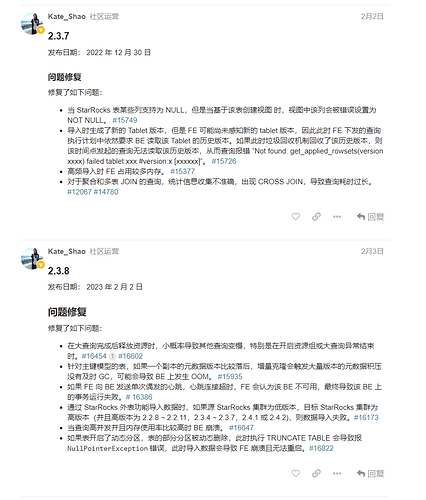

1 有找到类似的 常见 Crash / BUG / 优化 查询 -  StarRocks 用户问答 - StarRocks中文社区论坛 (mirrorship.cn) 第29个 ,但报错不太一致 ,并且2.3.10已经补丁修复了

StarRocks 用户问答 - StarRocks中文社区论坛 (mirrorship.cn) 第29个 ,但报错不太一致 ,并且2.3.10已经补丁修复了

【背景】有大量的flink stream load写入 , 每5分钟 有600条insert overwriete写入 ,只有用到明细表跟聚合表,更新表 ,指有主键表没用到 ,升级2.3.10前 ,不健康的副本大概20几个 ,不一致 2千多个 , 升级完后差别不大。

【业务影响】目前我们尚未收到业务反馈 ,be崩溃我们及时拉起了。

【StarRocks版本】2.3.10

【集群规模】例如:3fe(1 follower+2observer)+5be

【机器信息】 3fe 16C/32G , 5be 32C/64G/ 5be挂了5个1TB ssd

【联系方式】社区群8-tempo

【附件】

-

崩溃日志:

terminate called recursively

terminate called after throwing an instance of ‘std::length_error’

what(): basic_string::_M_create

query_id:00000000-0000-0000-0000-000000000000, fragment_instance:00000000-0000-0000-0000-000000000000

*** Aborted at 1682493788 (unix time) try “date -d @1682493788” if you are using GNU date ***

PC: @ 0x7fdd04c7a3d7 __GI_raise

*** SIGABRT (@0x4e96) received by PID 20118 (TID 0x7fdbdfd89700) from PID 20118; stack trace: ***

@ 0x41b9c62 google::(anonymous namespace)::FailureSignalHandler()

@ 0x7fdd05bb3630 (unknown)

@ 0x7fdd04c7a3d7 __GI_raise

@ 0x7fdd04c7bac8 __GI_abort

@ 0x1991adb _ZN9__gnu_cxx27__verbose_terminate_handlerEv.cold

@ 0x5ccd4c6 __cxxabiv1::__terminate()

@ 0x5d71599 __cxa_call_terminate

@ 0x5cccee1 __gxx_personality_v0

@ 0x5d781ee _Unwind_RaiseException_Phase2

@ 0x5d78ce6 _Unwind_Resume

@ 0x18a518c _ZN4brpc6policy17ProcessRpcRequestEPNS_16InputMessageBaseE.cold

@ 0x42e2427 brpc::ProcessInputMessage()

@ 0x42e32d3 brpc::InputMessenger::OnNewMessages()

@ 0x4389f9e brpc::Socket::ProcessEvent()

@ 0x4297f2f bthread::TaskGroup::task_runner()

@ 0x4420711 bthread_make_fcontext -

query failed: no global dict

2023-04-26 09:18:41,292 WARN (ForkJoinPool.commonPool-worker-1|15745) [Coordinator.getNext():916] get next fail, need cancel. status errorCode CANCELLED Cancelled BufferControlBlock::cancel, query id: 47834049-e3d0-11ed-8195-fa163e84adb1

2023-04-26 09:18:41,292 WARN (ForkJoinPool.commonPool-worker-1|15745) [Coordinator.getNext():937] query failed: no global dict

2023-04-26 09:18:41,292 WARN (ForkJoinPool.commonPool-worker-1|15745) [StatisticExecutor.executeStmt():391] com.starrocks.common.UserException: no global dict

2023-04-26 09:18:41,292 WARN (thrift-server-pool-6851|15644) [Coordinator.updateFragmentExecStatus():1682] one instance report fail errorCode CANCELLED Cancelled SenderQueue::get_chunk, query_id=47834049-e3d0-11ed-8195-fa163e84adb1 instance_id=47834049-e3d0-11ed-8195-fa163e84adb7

- cannot find task. type: PUBLISH_VERSION

fe 与 be时间线

//fe

2023-04-26 14:08:57,166 INFO (thrift-server-pool-8671|17974) [DatabaseTransactionMgr.beginTransaction():301] begin transaction: txn_id: 51699033 with label 68c63cb4-85aa-4b9b-8179-7d288bd84c58 from coordinator BE: xxxx, listner id: -1

2023-04-26 14:08:57,166 INFO (thrift-server-pool-8666|17969) [FrontendServiceImpl.streamLoadPut():1114] receive stream load put request. db:ods, tbl: table, txn_id: 51699033, load id: 4548c857-a405-6beb-1424-330753d552a3, backend: xxxx

2023-04-26 14:08:57,170 INFO (thrift-server-pool-8666|17969) [StreamLoadPlanner.plan():222] load job id: TUniqueId(hi:4992460465679723499, lo:1451341086484419235) tx id 51699033 parallel 0 compress null

//to be

I0426 14:08:57.294407 26091 txn_manager.cpp:204] Commit txn successfully. tablet: 8849141, txn_id: 51699033, rowsetid: 0200000009832db547484b3519a758f867dec7c83415f4a6 #segment:1 #delfile:0

I0426 14:08:57.294926 26735 txn_manager.cpp:204] Commit txn successfully. tablet: 8849149, txn_id: 51699033, rowsetid: 0200000009832db647484b3519a758f867dec7c83415f4a6 #segment:1 #delfile:0

…

I0426 14:08:59.614212 21232 task_worker_pool.cpp:206] Submit task success. type=PUBLISH_VERSION, signature=51699033, task_count_in_queue=1

I0426 14:08:59.614226 26533 task_worker_pool.cpp:873] get publish version task, signature:51699033 txn_id: 51699033 priority queue size: 1

I0426 14:08:59.614496 26548 engine_publish_version_task.cpp:60] Publish txn success tablet:8849117 version:5072 partition:8849112 txn_id: 51699033 rowset:0200000009832db147484b3519a758f867dec7c83415f4a6

I0426 14:08:59.614691 26540 engine_publish_version_task.cpp:60] Publish txn success tablet:8849121 version:5072 partition:8849112 txn_id: 51699033 rowset:0200000009832db247484b3519a758f867dec7c83415f4a6

…

I0426 14:08:59.615902 26533 task_worker_pool.cpp:801] Publish version on partition. partition: 8849112, txn_id: 51699033, version: 5072

I0426 14:08:59.615912 26533 task_worker_pool.cpp:902] publish_version success. signature:51699033 txn_id: 51699033 related tablet num: 12 time: 1ms

//back to fe

2023-04-26 14:08:59,609 INFO (thrift-server-pool-7859|17045) [FrontendServiceImpl.loadTxnCommit():943] receive txn commit request. db: ods, tbl: table, txn_id: 51699033, backend: xxxx

2023-04-26 14:08:59,612 INFO (PUBLISH_VERSION|31) [PublishVersionDaemon.publishVersion():86] send publish tasks for txn_id: 51699033

2023-04-26 14:08:59,612 INFO (thrift-server-pool-7859|17045) [DatabaseTransactionMgr.commitTransaction():575] transaction:[TransactionState. txn_id: 51699033, label: 68c63cb4-85aa-4b9b-8179-7d288bd84c58, db id: 10045, table id list: , callback id: -1, coordinator: BE: 10.67.2.69, transaction status: COMMITTED, error replicas num: 0, replica ids: , prepare time: 1682489337166, commit time: 1682489339609, finish time: -1, publish cost: -1682489339610ms, reason: attachment: com.starrocks.load.loadv2.ManualLoadTxnCommitAttachment@325408c8] successfully committed

2023-04-26 14:09:00,120 INFO (PUBLISH_VERSION|31) [DatabaseTransactionMgr.finishTransaction():927] finish transaction TransactionState. txn_id: 51699033, label: 68c63cb4-85aa-4b9b-8179-7d288bd84c58, db id: 10045, table id list: , callback id: -1, coordinator: BE: xxxx, transaction status: VISIBLE, error replicas num: 12, replica ids: 8849191,8849158,8849124,8849171,8849138, prepare time: 1682489337166, commit time: 1682489339609, finish time: 1682489340116, publish cost: 507ms, reason: attachment: com.starrocks.load.loadv2.ManualLoadTxnCommitAttachment@325408c8 successfully

2023-04-26 14:09:01,880 WARN (thrift-server-pool-221|585) [MasterImpl.finishTask():194] cannot find task. type: PUBLISH_VERSION, backendId: 10004, signature: 5169903

看到了 error replicas num :12

:supervise_thread()

:supervise_thread()