-

BE TabletSchemaMap 死锁

Thread 1331 (Thread 0x7f697b1fe700 (LWP 98875)):

#0 0x00007fe5a12794ed in __lll_lock_wait () from /lib64/libpthread.so.0

#1 0x00007fe5a1274dcb in _L_lock_883 () from /lib64/libpthread.so.0

#2 0x00007fe5a1274c98 in pthread_mutex_lock () from /lib64/libpthread.so.0

#3 0x00000000042f5efb in starrocks::TabletSchemaMap::emplace(starrocks::TabletSchemaPB const&) ()

#4 0x00000000042d673d in starrocks::TabletMeta::init_from_pb(starrocks::TabletMetaPB*) ()

#5 0x00000000042b4e42 in starrocks::Tablet::generate_tablet_meta_copy_unlocked(std::shared_ptr<starrocks::TabletMeta> const&) const ()

#6 0x000000000429d05e in starrocks::SnapshotManager::snapshot_full[abi:cxx11](std::shared_ptr<starrocks::Tablet> const&, long, long, bool) ()

#7 0x000000000429f223 in starrocks::SnapshotManager::make_snapshot(starrocks::TSnapshotRequest const&, std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> >*) ()

#8 0x0000000002caccd1 in starrocks::AgentServer::Impl::make_snapshot(starrocks::TAgentResult&, starrocks::TSnapshotRequest const&) ()

#9 0x0000000004c4f94d in starrocks::BackendServiceProcessor::process_make_snapshot(int, apache::thrift::protocol::TProtocol*, apache::thrift::protocol::TProtocol*, void*) ()

#10 0x0000000004c55c92 in starrocks::BackendServiceProcessor::dispatchCall(apache::thrift::protocol::TProtocol*, apache::thrift::protocol::TProtocol*, std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> > const&, int, void*) ()

#11 0x0000000004c579a2 in apache::thrift::TDispatchProcessor::process(std::shared_ptr<apache::thrift::protocol::TProtocol>, std::shared_ptr<apache::thrift::protocol::TProtocol>, void*) ()

#12 0x0000000005c081b8 in apache::thrift::server::TConnectedClient::run() ()

#13 0x0000000005c006b4 in apache::thrift::server::TThreadedServer::TConnectedClientRunner::run() ()

#14 0x0000000005c02ebd in apache::thrift::concurrency::Thread::threadMain(std::shared_ptr<apache::thrift::concurrency::Thread>) ()

#15 0x0000000005be8626 in std::thread::_State_impl<std::thread::_Invoker<std::tuple<void (*)(std::shared_ptr<apache::thrift::concurrency::Thread>), std::shared_ptr<apache::thrift::concurrency::Thread> > > >::_M_run() ()

#16 0x0000000008133fa0 in execute_native_thread_routine ()

#17 0x00007fe5a1272dd5 in start_thread () from /lib64/libpthread.so.0

#18 0x00007fe5a088dead in clone () from /lib64/libc.so.6

-

Github Issue:

-

Github Fix PR:

-

Jira

-

问题版本:

-

2.5.0 ~ 2.5.18

-

3.0.0 ~ 3.0.9

-

3.1.0 ~ 3.1.8

-

3.2.0 ~ 3.2.2

-

-

修复版本:

-

2.5.19+

-

3.0.10+

-

3.1.9+

-

3.2.3+

-

-

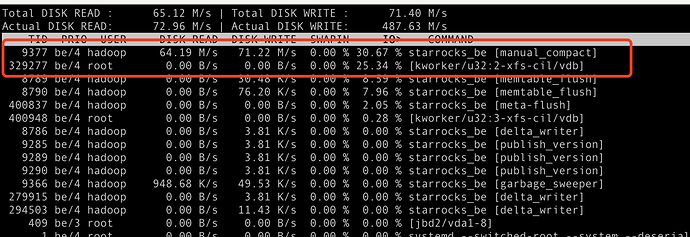

问题原因:

-

临时解决办法: