为了更快的定位您的问题,请提供以下信息,谢谢

【详述】StarRocks运行一段时间后,数据不可写,所有查询都超时

同时,StarRocks的事务数一直保持不变

【背景】

【业务影响】Starrocks不可读不可写

【是否存算分离】

【StarRocks版本】例如:3.3.0

【集群规模】例如:1fe +6be(fe与be混部)

【机器信息】CPU虚拟核/内存/网卡,例如:16C/64G/万兆

【联系方式】StarRocks社区群17-Golden

【附件】

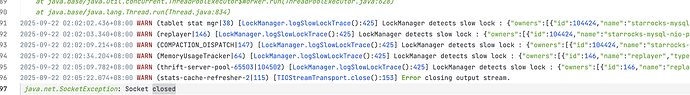

StarRocks在凌晨4点半开始,fe开始刷大量warn日志,被以下日志刷屏:

2025-06-06 04:36:12.837+08:00 INFO (thrift-server-pool-828754|887140) [ReportHandler.putToQueue():367] update be 11456393 report task, type: RESOURCE_USAGE_REPORT

2025-06-06 04:36:12.973+08:00 WARN (thrift-server-pool-828715|887101) [LockManager.logSlowLockTrace():398] LockManager detects slow lock : {"owners":[{"id":886160,"name":"starrocks-taskrun-pool-6065","heldFor":341334,"waitTime":0,"stack":["java.base@11.0.18/java.lang.Object.wait(Native Method)","app//com.starrocks.common.util.concurrent.lock.LockManager.lock(LockManager.java:153)","app//com.starrocks.common.util.concurrent.lock.Locker.lock(Locker.java:85)","app//com.starrocks.common.util.concurrent.lock.Locker.lockDatabase(Locker.java:112)","app//com.starrocks.sql.analyzer.AnalyzerUtils.getDBUdfFunction(AnalyzerUtils.java:207)","app//com.starrocks.sql.analyzer.AnalyzerUtils.getUdfFunction(AnalyzerUtils.java:249)","app//com.starrocks.sql.analyzer.DecimalV3FunctionAnalyzer.getDecimalV3Function(DecimalV3FunctionAnalyzer.java:353)","app//com.starrocks.sql.analyzer.ExpressionAnalyzer$Visitor.visitFunctionCall(ExpressionAnalyzer.java:1159)","app//com.starrocks.sql.analyzer.ExpressionAnalyzer$Visitor.visitFunctionCall(ExpressionAnalyzer.java:380)","app//com.starrocks.analysis.FunctionCallExpr.accept(FunctionCallExpr.java:483)","app//com.starrocks.sql.ast.AstVisitor.visit(AstVisitor.java:71)","app//com.starrocks.sql.analyzer.ExpressionAnalyzer.bottomUpAnalyze(ExpressionAnalyzer.java:376)","app//com.starrocks.sql.analyzer.ExpressionAnalyzer.analyze(ExpressionAnalyzer.java:144)","app//com.starrocks.sql.analyzer.ExpressionAnalyzer.analyzeExpression(ExpressionAnalyzer.java:2046)","app//com.starrocks.sql.analyzer.SelectAnalyzer.analyzeExpression(SelectAnalyzer.java:704)","app//com.starrocks.sql.analyzer.SelectAnalyzer.analyzeSelect(SelectAnalyzer.java:248)","app//com.starrocks.sql.analyzer.SelectAnalyzer.analyze(SelectAnalyzer.java:77)","app//com.starrocks.sql.analyzer.QueryAnalyzer$Visitor.visitSelect(QueryAnalyzer.java:234)","app//com.starrocks.sql.analyzer.QueryAnalyzer$Visitor.visitSelect(QueryAnalyzer.java:128)","app//com.starrocks.sql.ast.SelectRelation.accept(SelectRelation.java:242)"]}],"waiter":[{"id":887101,"name":"thrift-server-pool-828715","heldFor":"","waitTime":3001,"locker":"GlobalTransactionMgr.commitTransactionUnderDatabaseWLock():432"}]}

2025-06-06 04:36:13.061+08:00 INFO (thrift-server-pool-828755|887141) [ReportHandler.putToQueue():367] update be 10123 report task, type: RESOURCE_USAGE_REPORT

2025-06-06 04:36:13.097+08:00 INFO (thrift-server-pool-828105|886485) [ReportHandler.putToQueue():367] update be 11456394 report task, type: RESOURCE_USAGE_REPORT

2025-06-06 04:36:13.328+08:00 INFO (thrift-server-pool-828668|887053) [ReportHandler.putToQueue():367] update be 11456395 report task, type: TASK_REPORT

2025-06-06 04:36:13.342+08:00 INFO (leaderCheckpointer|111) [BDBJEJournal.getFinalizedJournalId():272] database names: 234802895

2025-06-06 04:36:13.342+08:00 INFO (leaderCheckpointer|111) [Checkpoint.runAfterCatalogReady():101] checkpoint imageVersion 234802894, logVersion 0

2025-06-06 04:36:13.352+08:00 INFO (thrift-server-pool-828756|887142) [ReportHandler.putToQueue():367] update be 10122 report task, type: RESOURCE_USAGE_REPORT

2025-06-06 04:36:13.546+08:00 INFO (thrift-server-pool-828736|887122) [ReportHandler.putToQueue():367] update be 11456395 report task, type: RESOURCE_USAGE_REPORT

2025-06-06 04:36:13.629+08:00 INFO (thrift-server-pool-828757|887143) [ReportHandler.putToQueue():367] update be 10005 report task, type: RESOURCE_USAGE_REPORT

2025-06-06 04:36:13.684+08:00 WARN (Connect-Scheduler-Check-Timer-0|21) [ConnectContext.checkTimeout():835] kill query timeout, remote: 192.168.0.137:35762, query timeout: 300

2025-06-06 04:36:13.684+08:00 WARN (Connect-Scheduler-Check-Timer-0|21) [ConnectContext.kill():784] kill query, 192.168.0.137:35762, kill connection: false

2025-06-06 04:36:13.684+08:00 WARN (Connect-Scheduler-Check-Timer-0|21) [ConnectContext.checkTimeout():835] kill query timeout, remote: 192.168.0.137:35906, query timeout: 300

2025-06-06 04:36:13.684+08:00 WARN (Connect-Scheduler-Check-Timer-0|21) [ConnectContext.kill():784] kill query, 192.168.0.137:35906, kill connection: false

2025-06-06 04:36:13.684+08:00 WARN (Connect-Scheduler-Check-Timer-0|21) [ConnectContext.checkTimeout():835] kill query timeout, remote: 192.168.0.137:43388, query timeout: 300

2025-06-06 04:36:13.684+08:00 WARN (Connect-Scheduler-Check-Timer-0|21) [ConnectContext.kill():784] kill query, 192.168.0.137:43388, kill connection: false

2025-06-06 04:36:13.684+08:00 WARN (Connect-Scheduler-Check-Timer-0|21) [ConnectContext.checkTimeout():835] kill query timeout, remote: 192.168.0.123:34742, query timeout: 300

2025-06-06 04:36:13.684+08:00 WARN (Connect-Scheduler-Check-Timer-0|21) [ConnectContext.kill():784] kill query, 192.168.0.123:34742, kill connection: false

2025-06-06 04:36:13.684+08:00 WARN (Connect-Scheduler-Check-Timer-0|21) [ConnectContext.checkTimeout():835] kill query timeout, remote: 192.168.0.123:34744, query timeout: 300

2025-06-06 04:36:13.684+08:00 WARN (Connect-Scheduler-Check-Timer-0|21) [ConnectContext.kill():784] kill query, 192.168.0.123:34744, kill connection: false

2025-06-06 04:36:13.684+08:00 WARN (Connect-Scheduler-Check-Timer-0|21) [ConnectContext.checkTimeout():835] kill query timeout, remote: 192.168.0.123:35126, query timeout: 300

2025-06-06 04:36:13.684+08:00 WARN (Connect-Scheduler-Check-Timer-0|21) [ConnectContext.kill():784] kill query, 192.168.0.123:35126, kill connection: false

2025-06-06 04:36:13.684+08:00 WARN (Connect-Scheduler-Check-Timer-0|21) [ConnectContext.checkTimeout():835] kill query timeout, remote: 192.168.0.137:35698, query timeout: 300

2025-06-06 04:36:13.684+08:00 WARN (Connect-Scheduler-Check-Timer-0|21) [ConnectContext.kill():784] kill query, 192.168.0.137:35698, kill connection: false

2025-06-06 04:36:13.684+08:00 WARN (Connect-Scheduler-Check-Timer-0|21) [ConnectContext.checkTimeout():835] kill query timeout, remote: 192.168.0.137:35700, query timeout: 300

2025-06-06 04:36:13.684+08:00 WARN (Connect-Scheduler-Check-Timer-0|21) [ConnectContext.kill():784] kill query, 192.168.0.137:35700, kill connection: false

2025-06-06 04:36:13.685+08:00 WARN (Connect-Scheduler-Check-Timer-0|21) [ConnectContext.checkTimeout():835] kill query timeout, remote: 192.168.0.137:35948, query timeout: 300

2025-06-06 04:36:13.685+08:00 WARN (Connect-Scheduler-Check-Timer-0|21) [ConnectContext.kill():784] kill query, 192.168.0.137:35948, kill connection: false

同时be的日志也被刷屏:

ING_FINISH, operator-chain: [olap_scan_2_0x7f2ac351e010(X) -> chunk_accumulate_2_0x7f293ef0d910(X) -> exchange_sink_3_0x7f23b8a2a110(X)]

W0606 14:47:23.625468 96288 storage_engine.cpp:1014] failed to perform update compaction. res=Internal error: illegal state: another compaction is running, tablet=166348020.136553271.ed4b9f4d9c488fb8-c22d6f7ccf76ebb1

W0606 14:47:23.625401 96052 pipeline_driver.cpp:552] begin to cancel operators for query_id=a6b99f1e-4243-11f0-8ed0-fefcfe67552d fragment_id=a6b99f1e-4243-11f0-8ed0-fefcfe6755ff driver=driver_2_5, status=PENDING_FINISH, operator-chain: [olap_scan_2_0x7f2aa2b1fc10(X) -> chunk_accumulate_2_0x7f293ef0de10(X) -> exchange_sink_3_0x7f23b8a2a610(X)]

W0606 14:47:23.625540 96287 storage_engine.cpp:1014] failed to perform update compaction. res=Internal error: illegal state: another compaction is running, tablet=166348020.136553271.ed4b9f4d9c488fb8-c22d6f7ccf76ebb1

W0606 14:47:23.625543 96052 pipeline_driver.cpp:552] begin to cancel operators for query_id=a6b99f1e-4243-11f0-8ed0-fefcfe67552d fragment_id=a6b99f1e-4243-11f0-8ed0-fefcfe6755ff driver=driver_2_1, status=PENDING_FINISH, operator-chain: [olap_scan_2_0x7f2adb0fdc10(X) -> chunk_accumulate_2_0x7f293ef0c790(X) -> exchange_sink_3_0x7f256c8bac10(X)]

W0606 14:47:23.625571 96052 pipeline_driver.cpp:552] begin to cancel operators for query_id=a6b99f1e-4243-11f0-8ed0-fefcfe67552d fragment_id=a6b99f1e-4243-11f0-8ed0-fefcfe6755ff driver=driver_2_11, status=PENDING_FINISH, operator-chain: [olap_scan_2_0x7f2b3b0e4410(X) -> chunk_accumulate_2_0x7f293fe82790(X) -> exchange_sink_3_0x7f2940905410(X)]

W0606 14:47:23.625582 96052 pipeline_driver.cpp:552] begin to cancel operators for query_id=a6b99f1e-4243-11f0-8ed0-fefcfe67552d fragment_id=a6b99f1e-4243-11f0-8ed0-fefcfe6755ff driver=driver_2_10, status=PENDING_FINISH, operator-chain: [olap_scan_2_0x7f2b3b0e4010(X) -> chunk_accumulate_2_0x7f293fe82290(X) -> exchange_sink_3_0x7f2940904f10(X)]

W0606 14:47:23.625591 96052 pipeline_driver.cpp:552] begin to cancel operators for query_id=a6b99f1e-4243-11f0-8ed0-fefcfe67552d fragment_id=a6b99f1e-4243-11f0-8ed0-fefcfe6755ff driver=driver_2_6, status=PENDING_FINISH, operator-chain: [olap_scan_2_0x7f2b3b0bc010(X) -> chunk_accumulate_2_0x7f293ef0ef90(X) -> exchange_sink_3_0x7f23b8a2ab10(X)]

W0606 14:47:23.625612 96052 pipeline_driver.cpp:552] begin to cancel operators for query_id=a6b99f1e-4243-11f0-8ed0-fefcfe67552d fragment_id=a6b99f1e-4243-11f0-8ed0-fefcfe6755ff driver=driver_2_7, status=PENDING_FINISH, operator-chain: [olap_scan_2_0x7f2b3b0bc410(X) -> chunk_accumulate_2_0x7f293ef0f490(X) -> exchange_sink_3_0x7f2940904010(X)]

W0606 14:47:23.625619 96052 pipeline_driver.cpp:552] begin to cancel operators for query_id=a6b99f1e-4243-11f0-8ed0-fefcfe67552d fragment_id=a6b99f1e-4243-11f0-8ed0-fefcfe6755ff driver=driver_2_2, status=PENDING_FINISH, operator-chain: [olap_scan_2_0x7f2ac552cc10(X) -> chunk_accumulate_2_0x7f293ef0cf10(X) -> exchange_sink_3_0x7f23b8a28d10(X)]

W0606 14:47:23.625628 96052 pipeline_driver.cpp:552] begin to cancel operators for query_id=a6b99f1e-4243-11f0-8ed0-fefcfe67552d fragment_id=a6b99f1e-4243-11f0-8ed0-fefcfe6755ff driver=driver_2_8, status=PENDING_FINISH, operator-chain: [olap_scan_2_0x7f2b3b0bc810(X) -> chunk_accumulate_2_0x7f293ef0f990(X) -> exchange_sink_3_0x7f2940904510(X)]

W0606 14:47:23.625638 96052 pipeline_driver.cpp:552] begin to cancel operators for query_id=de3c05a7-4150-11f0-8ed0-fefcfe67552d fragment_id=de3c05a7-4150-11f0-8ed0-fefcfe675671 driver=driver_72_34, status=PENDING_FINISH, operator-chain: [local_exchange_source_72_0x7f2a84f0b490(X) -> spillable_hash_join_probe_72_0x7f27531fc410(X)(HashJoiner=0x7f297bc5e210) -> chunk_accumulate_72_0x7f2750b52790(X) -> project_73_0x7f2a02ee4e10(X) -> exchange_sink_74_0x7f27531fc910(X)]

W0606 14:47:23.625656 96052 pipeline_driver.cpp:552] begin to cancel operators for query_id=de3c05a7-4150-11f0-8ed0-fefcfe67552d fragment_id=de3c05a7-4150-11f0-8ed0-fefcfe675671 driver=driver_72_28, status=PENDING_FINISH, operator-chain: [local_exchange_source_72_0x7f2a84f09f90(X) -> spillable_hash_join_probe_72_0x7f28ea897d10(X)(HashJoiner=0x7f297bc5b510) -> chunk_accumulate_72_0x7f2807644d90(X) -> project_73_0x7f2a02732a10(X) -> exchange_sink_74_0x7f28ea898210(X)]

W0606 14:47:23.625672 96052 pipeline_driver.cpp:552] begin to cancel operators for query_id=de3c05a7-4150-11f0-8ed0-fefcfe67552d fragment_id=de3c05a7-4150-11f0-8ed0-fefcfe675671 driver=driver_72_30, status=PENDING_FINISH, operator-chain: [local_exchange_source_72_0x7f2a84f0a690(X) -> spillable_hash_join_probe_72_0x7f28ea899110(X)(HashJoiner=0x7f297bc5c410) -> chunk_accumulate_72_0x7f274e236790(X) -> project_73_0x7f2a02739610(X) -> exchange_sink_74_0x7f28ecdea710(X)]

W0606 14:47:23.625684 96052 pipeline_driver.cpp:552] begin to cancel operators for query_id=de3c05a7-4150-11f0-8ed0-fefcfe67552d fragment_id=de3c05a7-4150-11f0-8ed0-fefcfe675671 driver=driver_72_31, status=PENDING_FINISH, operator-chain: [local_exchange_source_72_0x7f2a84f0aa10(X) -> spillable_hash_join_probe_72_0x7f28ecdeb110(X)(HashJoiner=0x7f297bc5cb90) -> chunk_accumulate_72_0x7f274e237690(X) -> project_73_0x7f2a02edac10(X) -> exchange_sink_74_0x7f28ecdeb610(X)]

be出问题的时间点的日志已经被刷新过去了找不回来了,目前被以上日志刷频

以上问题已经出现3次了,每次starrocks集群重启后,大约2-3天,就会出现数据不可写的情况

,值设置大一点试试呢

,值设置大一点试试呢