【详述】创建了hive catalog,查询hive表正常,由于starrocks集群机器断电,所有节点都重启了,重启后查询hive表数据出现异常

【背景】Starrocks集群断电重启了,重启后出现异常

【业务影响】无法通过hive catalog查询hive中数据

【是否存算分离】是

【StarRocks版本】例如:3.2.6

【集群规模】3fe(2 follower+1observer)+ 3be(fe与be混部)

【机器信息】16C/64G/千兆

【联系方式】xiaxiong2010@163.com

【附件】

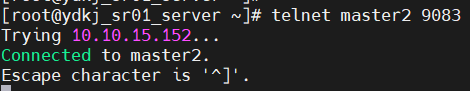

机器访问hive9083端口测试

CREATE EXTERNAL CATALOG hive_catalog

comment “hive catalog”

PROPERTIES (

“type” = “hive”,

“hive.metastore.type” = “hive”,

“hive.metastore.uris” = “thrift://master2:9083”,

“enable_metastore_cache” = “true”,

“metastore_cache_refresh_interval_sec”=“30”

)

SHOW DATABASES FROM hive_catalog;

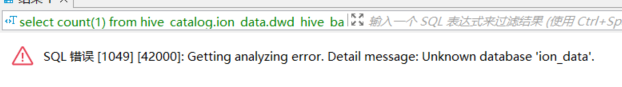

select count(1) from hive_catalog.ion_data.dwd_hive_battery_exchange;

- fe.log/beINFO/相应截图

2024-04-29 17:36:33.116+08:00 WARN (heartbeat mgr|23) [HeartbeatMgr.runAfterCatalogReady():166] get bad heartbeat response: type: BROKER, status: BAD, msg: java.net.ConnectException: Connection refused (Connection refused), name: hdfs_broker, host: 10.10.15.193, port: 8000

2024-04-29 17:36:33.393+08:00 INFO (tablet stat mgr|38) [TabletStatMgr.updateLocalTabletStat():173] finished to get local tablet stat of all backends. cost: 74 ms

2024-04-29 17:36:33.396+08:00 INFO (tablet stat mgr|38) [TabletStatMgr.runAfterCatalogReady():138] finished to update index row num of all databases. cost: 3 ms

2024-04-29 17:36:38.120+08:00 WARN (heartbeat mgr|23) [HeartbeatMgr.runAfterCatalogReady():166] get bad heartbeat response: type: BROKER, status: BAD, msg: java.net.ConnectException: Connection refused (Connection refused), name: hdfs_broker, host: 10.10.15.193, port: 8000

2024-04-29 17:36:38.327+08:00 ERROR (starrocks-mysql-nio-pool-1302|1558644) [HiveMetaClient.callRPC():163] Failed to get database ion_data

java.lang.reflect.InvocationTargetException: null

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method) ~[?:1.8.0_333]

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62) ~[?:1.8.0_333]

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43) ~[?:1.8.0_333]

at java.lang.reflect.Method.invoke(Method.java:498) ~[?:1.8.0_333]

at com.starrocks.connector.hive.HiveMetaClient.callRPC(HiveMetaClient.java:161) ~[starrocks-fe.jar:?]

at com.starrocks.connector.hive.HiveMetaClient.callRPC(HiveMetaClient.java:150) ~[starrocks-fe.jar:?]

at com.starrocks.connector.hive.HiveMetaClient.getDb(HiveMetaClient.java:252) ~[starrocks-fe.jar:?]

at com.starrocks.connector.hive.HiveMetastore.getDb(HiveMetastore.java:94) ~[starrocks-fe.jar:?]

at com.starrocks.connector.hive.CachingHiveMetastore.loadDb(CachingHiveMetastore.java:288) ~[starrocks-fe.jar:?]

at com.google.common.cache.CacheLoader$FunctionToCacheLoader.load(CacheLoader.java:169) ~[spark-dpp-1.0.0.jar:?]

at com.google.common.cache.CacheLoader$1.load(CacheLoader.java:192) ~[spark-dpp-1.0.0.jar:?]

at com.google.common.cache.LocalCache$LoadingValueReference.loadFuture(LocalCache.java:3570) ~[spark-dpp-1.0.0.jar:?]

at com.google.common.cache.LocalCache$Segment.loadSync(LocalCache.java:2312) ~[spark-dpp-1.0.0.jar:?]

at com.google.common.cache.LocalCache$Segment.lockedGetOrLoad(LocalCache.java:2189) ~[spark-dpp-1.0.0.jar:?]

at com.google.common.cache.LocalCache$Segment.get(LocalCache.java:2079) ~[spark-dpp-1.0.0.jar:?]

at com.google.common.cache.LocalCache.get(LocalCache.java:4011) ~[spark-dpp-1.0.0.jar:?]

at com.google.common.cache.LocalCache.getOrLoad(LocalCache.java:4034) ~[spark-dpp-1.0.0.jar:?]

at com.google.common.cache.LocalCache$LocalLoadingCache.get(LocalCache.java:5010) ~[spark-dpp-1.0.0.jar:?]

at com.google.common.cache.LocalCache$LocalLoadingCache.getUnchecked(LocalCache.java:5017) ~[spark-dpp-1.0.0.jar:?]

at com.starrocks.connector.hive.CachingHiveMetastore.get(CachingHiveMetastore.java:623) ~[starrocks-fe.jar:?]

at com.starrocks.connector.hive.CachingHiveMetastore.getDb(CachingHiveMetastore.java:284) ~[starrocks-fe.jar:?]

at com.starrocks.connector.hive.CachingHiveMetastore.loadDb(CachingHiveMetastore.java:288) ~[starrocks-fe.jar:?]

at com.google.common.cache.CacheLoader$FunctionToCacheLoader.load(CacheLoader.java:169) ~[spark-dpp-1.0.0.jar:?]

at com.google.common.cache.CacheLoader$1.load(CacheLoader.java:192) ~[spark-dpp-1.0.0.jar:?]

at com.google.common.cache.LocalCache$LoadingValueReference.loadFuture(LocalCache.java:3570) ~[spark-dpp-1.0.0.jar:?]

at com.google.common.cache.LocalCache$Segment.loadSync(LocalCache.java:2312) ~[spark-dpp-1.0.0.jar:?]

at com.google.common.cache.LocalCache$Segment.lockedGetOrLoad(LocalCache.java:2189) ~[spark-dpp-1.0.0.jar:?]

at com.google.common.cache.LocalCache$Segment.get(LocalCache.java:2079) ~[spark-dpp-1.0.0.jar:?]

at com.google.common.cache.LocalCache.get(LocalCache.java:4011) ~[spark-dpp-1.0.0.jar:?]

at com.google.common.cache.LocalCache.getOrLoad(LocalCache.java:4034) ~[spark-dpp-1.0.0.jar:?]

at com.google.common.cache.LocalCache$LocalLoadingCache.get(LocalCache.java:5010) ~[spark-dpp-1.0.0.jar:?]

at com.google.common.cache.LocalCache$LocalLoadingCache.getUnchecked(LocalCache.java:5017) ~[spark-dpp-1.0.0.jar:?]

at com.starrocks.connector.hive.CachingHiveMetastore.get(CachingHiveMetastore.java:623) ~[starrocks-fe.jar:?]

at com.starrocks.connector.hive.CachingHiveMetastore.getDb(CachingHiveMetastore.java:284) ~[starrocks-fe.jar:?]

at com.starrocks.connector.hive.HiveMetastoreOperations.getDb(HiveMetastoreOperations.java:145) ~[starrocks-fe.jar:?]

at com.starrocks.connector.hive.HiveMetadata.getDb(HiveMetadata.java:126) ~[starrocks-fe.jar:?]

at com.starrocks.connector.CatalogConnectorMetadata.getDb(CatalogConnectorMetadata.java:192) ~[starrocks-fe.jar:?]

at com.starrocks.server.MetadataMgr.lambda$getDb$1(MetadataMgr.java:221) ~[starrocks-fe.jar:?]

at java.util.Optional.map(Optional.java:215) ~[?:1.8.0_333]

at com.starrocks.server.MetadataMgr.getDb(MetadataMgr.java:221) ~[starrocks-fe.jar:?]

at com.starrocks.sql.analyzer.QueryAnalyzer.resolveTable(QueryAnalyzer.java:970) ~[starrocks-fe.jar:?]

at com.starrocks.sql.analyzer.QueryAnalyzer.access$200(QueryAnalyzer.java:97) ~[starrocks-fe.jar:?]

at com.starrocks.sql.analyzer.QueryAnalyzer$Visitor.resolveTableRef(QueryAnalyzer.java:296) ~[starrocks-fe.jar:?]

at com.starrocks.sql.analyzer.QueryAnalyzer$Visitor.visitSelect(QueryAnalyzer.java:194) ~[starrocks-fe.jar:?]

at com.starrocks.sql.analyzer.QueryAnalyzer$Visitor.visitSelect(QueryAnalyzer.java:114) ~[starrocks-fe.jar:?]

at com.starrocks.sql.ast.SelectRelation.accept(SelectRelation.java:242) ~[starrocks-fe.jar:?]

at com.starrocks.sql.analyzer.QueryAnalyzer$Visitor.process(QueryAnalyzer.java:119) ~[starrocks-fe.jar:?]

at com.starrocks.sql.analyzer.QueryAnalyzer$Visitor.visitQueryRelation(QueryAnalyzer.java:134) ~[starrocks-fe.jar:?]

at com.starrocks.sql.analyzer.QueryAnalyzer$Visitor.visitQueryStatement(QueryAnalyzer.java:124) ~[starrocks-fe.jar:?]

at com.starrocks.sql.analyzer.QueryAnalyzer$Visitor.visitQueryStatement(QueryAnalyzer.java:114) ~[starrocks-fe.jar:?]

at com.starrocks.sql.ast.QueryStatement.accept(QueryStatement.java:56) ~[starrocks-fe.jar:?]

at com.starrocks.sql.analyzer.QueryAnalyzer$Visitor.process(QueryAnalyzer.java:119) ~[starrocks-fe.jar:?]

at com.starrocks.sql.analyzer.QueryAnalyzer.analyze(QueryAnalyzer.java:107) ~[starrocks-fe.jar:?]

at com.starrocks.sql.analyzer.AnalyzerVisitor.visitQueryStatement(AnalyzerVisitor.java:365) ~[starrocks-fe.jar:?]

at com.starrocks.sql.analyzer.AnalyzerVisitor.visitQueryStatement(AnalyzerVisitor.java:142) ~[starrocks-fe.jar:?]

at com.starrocks.sql.ast.QueryStatement.accept(QueryStatement.java:56) ~[starrocks-fe.jar:?]

at com.starrocks.sql.ast.AstVisitor.visit(AstVisitor.java:68) ~[starrocks-fe.jar:?]

at com.starrocks.sql.analyzer.AnalyzerVisitor.analyze(AnalyzerVisitor.java:144) ~[starrocks-fe.jar:?]

at com.starrocks.sql.analyzer.Analyzer.analyze(Analyzer.java:34) ~[starrocks-fe.jar:?]

at com.starrocks.sql.StatementPlanner.plan(StatementPlanner.java:113) ~[starrocks-fe.jar:?]

at com.starrocks.sql.StatementPlanner.plan(StatementPlanner.java:91) ~[starrocks-fe.jar:?]

at com.starrocks.qe.StmtExecutor.execute(StmtExecutor.java:520) ~[starrocks-fe.jar:?]

at com.starrocks.qe.ConnectProcessor.handleQuery(ConnectProcessor.java:413) ~[starrocks-fe.jar:?]

at com.starrocks.qe.ConnectProcessor.dispatch(ConnectProcessor.java:607) ~[starrocks-fe.jar:?]

at com.starrocks.qe.ConnectProcessor.processOnce(ConnectProcessor.java:901) ~[starrocks-fe.jar:?]

at com.starrocks.mysql.nio.ReadListener.lambda$handleEvent$0(ReadListener.java:69) ~[starrocks-fe.jar:?]

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149) ~[?:1.8.0_333]

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624) ~[?:1.8.0_333]

at java.lang.Thread.run(Thread.java:750) ~[?:1.8.0_333]

Caused by: org.apache.thrift.transport.TTransportException: java.net.SocketTimeoutException: Read timed out

at org.apache.thrift.transport.TIOStreamTransport.read(TIOStreamTransport.java:127) ~[libthrift-0.13.0.jar:0.13.0]

at org.apache.thrift.transport.TTransport.readAll(TTransport.java:86) ~[libthrift-0.13.0.jar:0.13.0]

at org.apache.thrift.protocol.TBinaryProtocol.readAll(TBinaryProtocol.java:455) ~[libthrift-0.13.0.jar:0.13.0]

at org.apache.thrift.protocol.TBinaryProtocol.readI32(TBinaryProtocol.java:354) ~[libthrift-0.13.0.jar:0.13.0]

at org.apache.thrift.protocol.TBinaryProtocol.readMessageBegin(TBinaryProtocol.java:243) ~[libthrift-0.13.0.jar:0.13.0]

at org.apache.thrift.TServiceClient.receiveBase(TServiceClient.java:77) ~[libthrift-0.13.0.jar:0.13.0]

at org.apache.hadoop.hive.metastore.api.ThriftHiveMetastore$Client.recv_get_database(ThriftHiveMetastore.java:1151) ~[hive-apache-3.1.2-13.jar:?]

at org.apache.hadoop.hive.metastore.api.ThriftHiveMetastore$Client.get_database(ThriftHiveMetastore.java:1138) ~[hive-apache-3.1.2-13.jar:?]

at org.apache.hadoop.hive.metastore.HiveMetaStoreClient.getDatabase(HiveMetaStoreClient.java:1068) ~[starrocks-fe.jar:?]

at sun.reflect.GeneratedMethodAccessor6.invoke(Unknown Source) ~[?:?]

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43) ~[?:1.8.0_333]

at java.lang.reflect.Method.invoke(Method.java:498) ~[?:1.8.0_333]

at org.apache.hadoop.hive.metastore.RetryingMetaStoreClient.invoke(RetryingMetaStoreClient.java:208) ~[hive-apache-3.1.2-13.jar:?]

at com.sun.proxy.$Proxy44.getDatabase(Unknown Source) ~[?:?]

… 69 more

Caused by: java.net.SocketTimeoutException: Read timed out

at java.net.SocketInputStream.socketRead0(Native Method) ~[?:1.8.0_333]

at java.net.SocketInputStream.socketRead(SocketInputStream.java:116) ~[?:1.8.0_333]

at java.net.SocketInputStream.read(SocketInputStream.java:171) ~[?:1.8.0_333]

at java.net.SocketInputStream.read(SocketInputStream.java:141) ~[?:1.8.0_333]

at java.io.BufferedInputStream.fill(BufferedInputStream.java:246) ~[?:1.8.0_333]

at java.io.BufferedInputStream.read1(BufferedInputStream.java:286) ~[?:1.8.0_333]

at java.io.BufferedInputStream.read(BufferedInputStream.java:345) ~[?:1.8.0_333]

at org.apache.thrift.transport.TIOStreamTransport.read(TIOStreamTransport.java:125) ~[libthrift-0.13.0.jar:0.13.0]

at org.apache.thrift.transport.TTransport.readAll(TTransport.java:86) ~[libthrift-0.13.0.jar:0.13.0]

at org.apache.thrift.protocol.TBinaryProtocol.readAll(TBinaryProtocol.java:455) ~[libthrift-0.13.0.jar:0.13.0]

at org.apache.thrift.protocol.TBinaryProtocol.readI32(TBinaryProtocol.java:354) ~[libthrift-0.13.0.jar:0.13.0]

at org.apache.thrift.protocol.TBinaryProtocol.readMessageBegin(TBinaryProtocol.java:243) ~[libthrift-0.13.0.jar:0.13.0]

at org.apache.thrift.TServiceClient.receiveBase(TServiceClient.java:77) ~[libthrift-0.13.0.jar:0.13.0]

at org.apache.hadoop.hive.metastore.api.ThriftHiveMetastore$Client.recv_get_database(ThriftHiveMetastore.java:1151) ~[hive-apache-3.1.2-13.jar:?]

at org.apache.hadoop.hive.metastore.api.ThriftHiveMetastore$Client.get_database(ThriftHiveMetastore.java:1138) ~[hive-apache-3.1.2-13.jar:?]

at org.apache.hadoop.hive.metastore.HiveMetaStoreClient.getDatabase(HiveMetaStoreClient.java:1068) ~[starrocks-fe.jar:?]

at sun.reflect.GeneratedMethodAccessor6.invoke(Unknown Source) ~[?:?]

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43) ~[?:1.8.0_333]

at java.lang.reflect.Method.invoke(Method.java:498) ~[?:1.8.0_333]

at org.apache.hadoop.hive.metastore.RetryingMetaStoreClient.invoke(RetryingMetaStoreClient.java:208) ~[hive-apache-3.1.2-13.jar:?]

at com.sun.proxy.$Proxy44.getDatabase(Unknown Source) ~[?:?]

… 69 more

2024-04-29 17:36:38.332+08:00 ERROR (starrocks-mysql-nio-pool-1302|1558644) [HiveMetaClient.callRPC():170] An exception occurred when using the current long link to access metastore. msg: Failed to get database ion_data

2024-04-29 17:36:38.333+08:00 INFO (starrocks-mysql-nio-pool-1302|1558644) [HiveMetaStoreClient.close():624] Closed a connection to metastore, current connections: 0

2024-04-29 17:36:38.333+08:00 ERROR (starrocks-mysql-nio-pool-1302|1558644) [CachingHiveMetastore.get():625] Error occurred when loading cache

com.google.common.util.concurrent.UncheckedExecutionException: com.starrocks.connector.exception.StarRocksConnectorException: Failed to get database ion_data, msg: null

at com.google.common.cache.LocalCache$Segment.get(LocalCache.java:2085) ~[spark-dpp-1.0.0.jar:?]

at com.google.common.cache.LocalCache.get(LocalCache.java:4011) ~[spark-dpp-1.0.0.jar:?]

at com.google.common.cache.LocalCache.getOrLoad(LocalCache.java:4034) ~[spark-dpp-1.0.0.jar:?]

at com.google.common.cache.LocalCache$LocalLoadingCache.get(LocalCache.java:5010) ~[spark-dpp-1.0.0.jar:?]

at com.google.common.cache.LocalCache$LocalLoadingCache.getUnchecked(LocalCache.java:5017) ~[spark-dpp-1.0.0.jar:?]

at com.starrocks.connector.hive.CachingHiveMetastore.get(CachingHiveMetastore.java:623) ~[starrocks-fe.jar:?]

at com.starrocks.connector.hive.CachingHiveMetastore.getDb(CachingHiveMetastore.java:284) ~[starrocks-fe.jar:?]

at com.starrocks.connector.hive.CachingHiveMetastore.loadDb(CachingHiveMetastore.java:288) ~[starrocks-fe.jar:?]

at com.google.common.cache.CacheLoader$FunctionToCacheLoader.load(CacheLoader.java:169) ~[spark-dpp-1.0.0.jar:?]

at com.google.common.cache.CacheLoader$1.load(CacheLoader.java:192) ~[spark-dpp-1.0.0.jar:?]

at com.google.common.cache.LocalCache$LoadingValueReference.loadFuture(LocalCache.java:3570) ~[spark-dpp-1.0.0.jar:?]

at com.google.common.cache.LocalCache$Segment.loadSync(LocalCache.java:2312) ~[spark-dpp-1.0.0.jar:?]

at com.google.common.cache.LocalCache$Segment.lockedGetOrLoad(LocalCache.java:2189) ~[spark-dpp-1.0.0.jar:?]

at com.google.common.cache.LocalCache$Segment.get(LocalCache.java:2079) ~[spark-dpp-1.0.0.jar:?]

at com.google.common.cache.LocalCache.get(LocalCache.java:4011) ~[spark-dpp-1.0.0.jar:?]

at com.google.common.cache.LocalCache.getOrLoad(LocalCache.java:4034) ~[spark-dpp-1.0.0.jar:?]

at com.google.common.cache.LocalCache$LocalLoadingCache.get(LocalCache.java:5010) ~[spark-dpp-1.0.0.jar:?]

at com.google.common.cache.LocalCache$LocalLoadingCache.getUnchecked(LocalCache.java:5017) ~[spark-dpp-1.0.0.jar:?]

at com.starrocks.connector.hive.CachingHiveMetastore.get(CachingHiveMetastore.java:623) ~[starrocks-fe.jar:?]

at com.starrocks.connector.hive.CachingHiveMetastore.getDb(CachingHiveMetastore.java:284) ~[starrocks-fe.jar:?]

at com.starrocks.connector.hive.HiveMetastoreOperations.getDb(HiveMetastoreOperations.java:145) ~[starrocks-fe.jar:?]

at com.starrocks.connector.hive.HiveMetadata.getDb(HiveMetadata.java:126) ~[starrocks-fe.jar:?]

at com.starrocks.connector.CatalogConnectorMetadata.getDb(CatalogConnectorMetadata.java:192) ~[starrocks-fe.jar:?]

at com.starrocks.server.MetadataMgr.lambda$getDb$1(MetadataMgr.java:221) ~[starrocks-fe.jar:?]

at java.util.Optional.map(Optional.java:215) ~[?:1.8.0_333]

at com.starrocks.server.MetadataMgr.getDb(MetadataMgr.java:221) ~[starrocks-fe.jar:?]

at com.starrocks.sql.analyzer.QueryAnalyzer.resolveTable(QueryAnalyzer.java:970) ~[starrocks-fe.jar:?]

at com.starrocks.sql.analyzer.QueryAnalyzer.access$200(QueryAnalyzer.java:97) ~[starrocks-fe.jar:?]

at com.starrocks.sql.analyzer.QueryAnalyzer$Visitor.resolveTableRef(QueryAnalyzer.java:296) ~[starrocks-fe.jar:?]

at com.starrocks.sql.analyzer.QueryAnalyzer$Visitor.visitSelect(QueryAnalyzer.java:194) ~[starrocks-fe.jar:?]

at com.starrocks.sql.analyzer.QueryAnalyzer$Visitor.visitSelect(QueryAnalyzer.java:114) ~[starrocks-fe.jar:?]

at com.starrocks.sql.ast.SelectRelation.accept(SelectRelation.java:242) ~[starrocks-fe.jar:?]

at com.starrocks.sql.analyzer.QueryAnalyzer$Visitor.process(QueryAnalyzer.java:119) ~[starrocks-fe.jar:?]

at com.starrocks.sql.analyzer.QueryAnalyzer$Visitor.visitQueryRelation(QueryAnalyzer.java:134) ~[starrocks-fe.jar:?]

at com.starrocks.sql.analyzer.QueryAnalyzer$Visitor.visitQueryStatement(QueryAnalyzer.java:124) ~[starrocks-fe.jar:?]

at com.starrocks.sql.analyzer.QueryAnalyzer$Visitor.visitQueryStatement(QueryAnalyzer.java:114) ~[starrocks-fe.jar:?]

at com.starrocks.sql.ast.QueryStatement.accept(QueryStatement.java:56) ~[starrocks-fe.jar:?]

at com.starrocks.sql.analyzer.QueryAnalyzer$Visitor.process(QueryAnalyzer.java:119) ~[starrocks-fe.jar:?]

at com.starrocks.sql.analyzer.QueryAnalyzer.analyze(QueryAnalyzer.java:107) ~[starrocks-fe.jar:?]

at com.starrocks.sql.analyzer.AnalyzerVisitor.visitQueryStatement(AnalyzerVisitor.java:365) ~[starrocks-fe.jar:?]

at com.starrocks.sql.analyzer.AnalyzerVisitor.visitQueryStatement(AnalyzerVisitor.java:142) ~[starrocks-fe.jar:?]

at com.starrocks.sql.ast.QueryStatement.accept(QueryStatement.java:56) ~[starrocks-fe.jar:?]

at com.starrocks.sql.ast.AstVisitor.visit(AstVisitor.java:68) ~[starrocks-fe.jar:?]

at com.starrocks.sql.analyzer.AnalyzerVisitor.analyze(AnalyzerVisitor.java:144) ~[starrocks-fe.jar:?]

at com.starrocks.sql.analyzer.Analyzer.analyze(Analyzer.java:34) ~[starrocks-fe.jar:?]

at com.starrocks.sql.StatementPlanner.plan(StatementPlanner.java:113) ~[starrocks-fe.jar:?]

at com.starrocks.sql.StatementPlanner.plan(StatementPlanner.java:91) ~[starrocks-fe.jar:?]

at com.starrocks.qe.StmtExecutor.execute(StmtExecutor.java:520) ~[starrocks-fe.jar:?]

at com.starrocks.qe.ConnectProcessor.handleQuery(ConnectProcessor.java:413) ~[starrocks-fe.jar:?]

at com.starrocks.qe.ConnectProcessor.dispatch(ConnectProcessor.java:607) ~[starrocks-fe.jar:?]

at com.starrocks.qe.ConnectProcessor.processOnce(ConnectProcessor.java:901) ~[starrocks-fe.jar:?]

at com.starrocks.mysql.nio.ReadListener.lambda$handleEvent$0(ReadListener.java:69) ~[starrocks-fe.jar:?]

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149) ~[?:1.8.0_333]

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624) ~[?:1.8.0_333]

at java.lang.Thread.run(Thread.java:750) ~[?:1.8.0_333]

Caused by: com.starrocks.connector.exception.StarRocksConnectorException: Failed to get database ion_data, msg: null

at com.starrocks.connector.hive.HiveMetaClient.callRPC(HiveMetaClient.java:164) ~[starrocks-fe.jar:?]

at com.starrocks.connector.hive.HiveMetaClient.callRPC(HiveMetaClient.java:150) ~[starrocks-fe.jar:?]

at com.starrocks.connector.hive.HiveMetaClient.getDb(HiveMetaClient.java:252) ~[starrocks-fe.jar:?]

at com.starrocks.connector.hive.HiveMetastore.getDb(HiveMetastore.java:94) ~[starrocks-fe.jar:?]

at com.starrocks.connector.hive.CachingHiveMetastore.loadDb(CachingHiveMetastore.java:288) ~[starrocks-fe.jar:?]

at com.google.common.cache.CacheLoader$FunctionToCacheLoader.load(CacheLoader.java:169) ~[spark-dpp-1.0.0.jar:?]

at com.google.common.cache.CacheLoader$1.load(CacheLoader.java:192) ~[spark-dpp-1.0.0.jar:?]

at com.google.common.cache.LocalCache$LoadingValueReference.loadFuture(LocalCache.java:3570) ~[spark-dpp-1.0.0.jar:?]

at com.google.common.cache.LocalCache$Segment.loadSync(LocalCache.java:2312) ~[spark-dpp-1.0.0.jar:?]

at com.google.common.cache.LocalCache$Segment.lockedGetOrLoad(LocalCache.java:2189) ~[spark-dpp-1.0.0.jar:?]

at com.google.common.cache.LocalCache$Segment.get(LocalCache.java:2079) ~[spark-dpp-1.0.0.jar:?]

… 54 more